Introduction

Snowflake Architecture: Revolutionizing Data Warehousing for Unmatched Scalability and Elasticity

In the realm of data warehousing, Snowflake's architecture is setting new standards by decoupling the storage and compute layers. This revolutionary design allows for unmatched scalability and elasticity, catering to the increasing demand for flexible and efficient data warehouse solutions.

At its core, Snowflake employs a centralized repository for ingested data, accessible across various computational clusters. This separation ensures that storage costs can be managed independently from compute resources, which can be dynamically scaled to meet the demand of workloads, whether it's batch processing or real-time analytics.

With an internal staging area, Snowflake streamlines the process of data ingestion, enabling a smoother transition from source to analysis. This approach is essential for maintaining data integrity and ensuring consistent performance post-migration, regardless of the data's structure.

The effectiveness of Snowflake's architecture is evident in its substantial growth, with revenue soaring from $334.4 million to $734.2 million in just two years. This reflects the increasing need for expertise and reliability in handling the velocity, variety, and volume of modern data.

As industries pivot towards more data-intensive operations, traditional data warehouses' limitations become apparent. Snowflake's customizable suite of APIs further underscores its adaptability, catering to a diverse range of applications and industries that demand real-time data processing and near-perfect uptime.

This shift towards Snowflake is not just a trend but a fundamental transformation in the data industry, marking the dawn of a new era where real-time access to data is not just a luxury but a necessity for businesses to remain competitive and agile in a fast-paced market.

Snowflake Architecture

Snowflake's architecture is revolutionizing the realm of data warehousing by uncoupling the storage and compute layers, a design that allows for unmatched scalability and elasticity. At its core, Snowflake employs a centralized repository for ingested data, which is then accessible across various computational clusters. This separation ensures that storage costs can be managed independently from compute resources, which can be scaled dynamically to meet the demand of workloads, be it batch processing or real-time analytics.

With an internal staging area, Snowflake streamlines the process of data ingestion, enabling a smoother transition from source to analysis. This approach proves essential for maintaining data integrity and ensures consistent performance post-migration, irrespective of the data's form, be it structured or unstructured.

A testament to its efficacy, Snowflake has demonstrated substantial growth, with revenue soaring from $334.4 million to $734.2 million in a span of two years, reflecting the increasing demand for flexible and efficient data warehouse solutions. The ability to customize through a suite of APIs further underscores Snowflake's adaptability, catering to a diverse range of applications and industries that demand real-time data processing and near-perfect uptime.

As industries pivot toward more data-intensive operations, the limitations of traditional data warehouses become apparent, driving the need for innovative solutions like Snowflake that are designed to handle the velocity, variety, and volume of modern data. This shift is not just a trend but a fundamental transformation in the data industry, marking the dawn of a new era where real-time access to data is not just a luxury, but a necessity for businesses to remain competitive and agile in a fast-paced market.

Getting Started with Snowflake

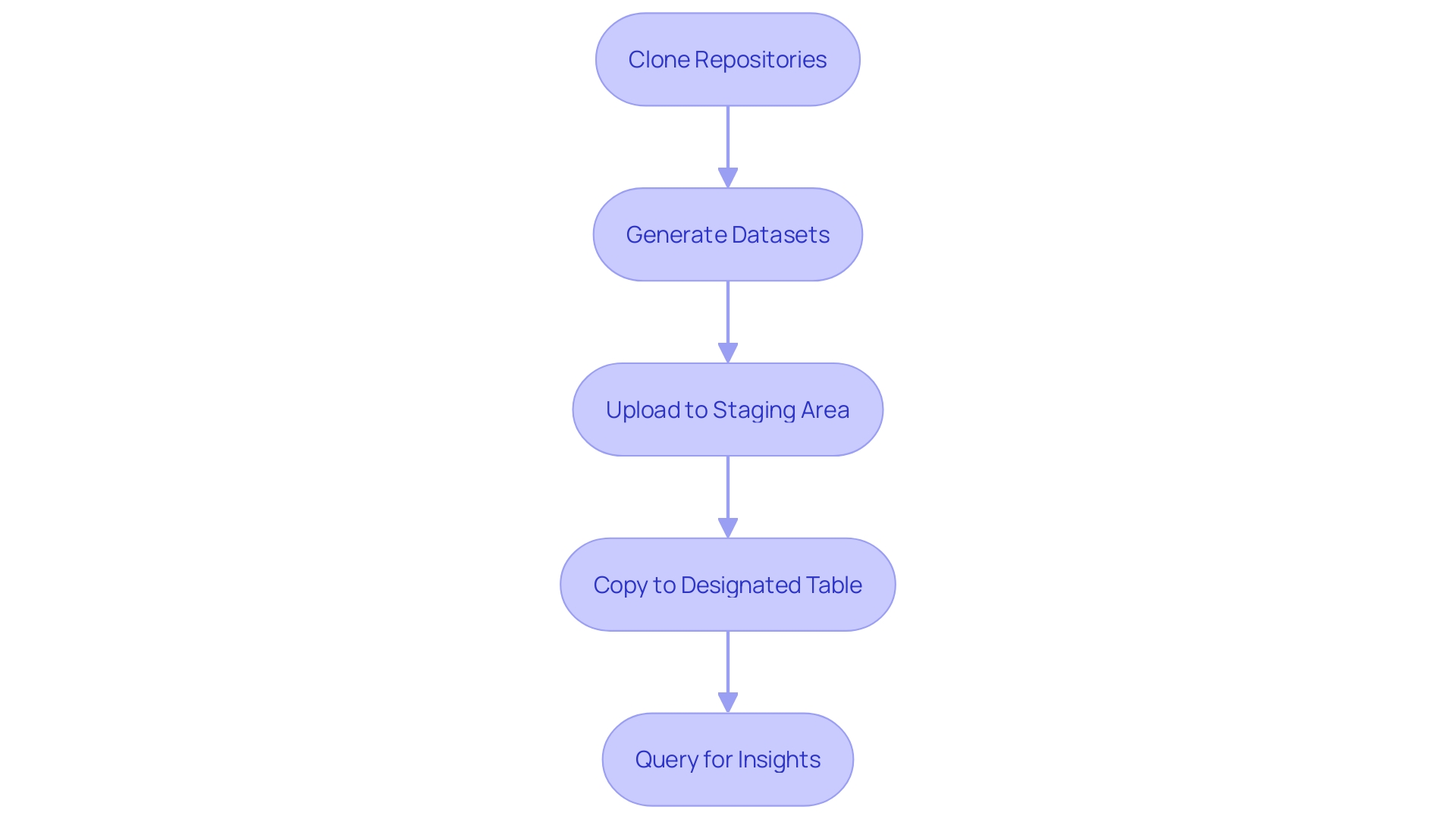

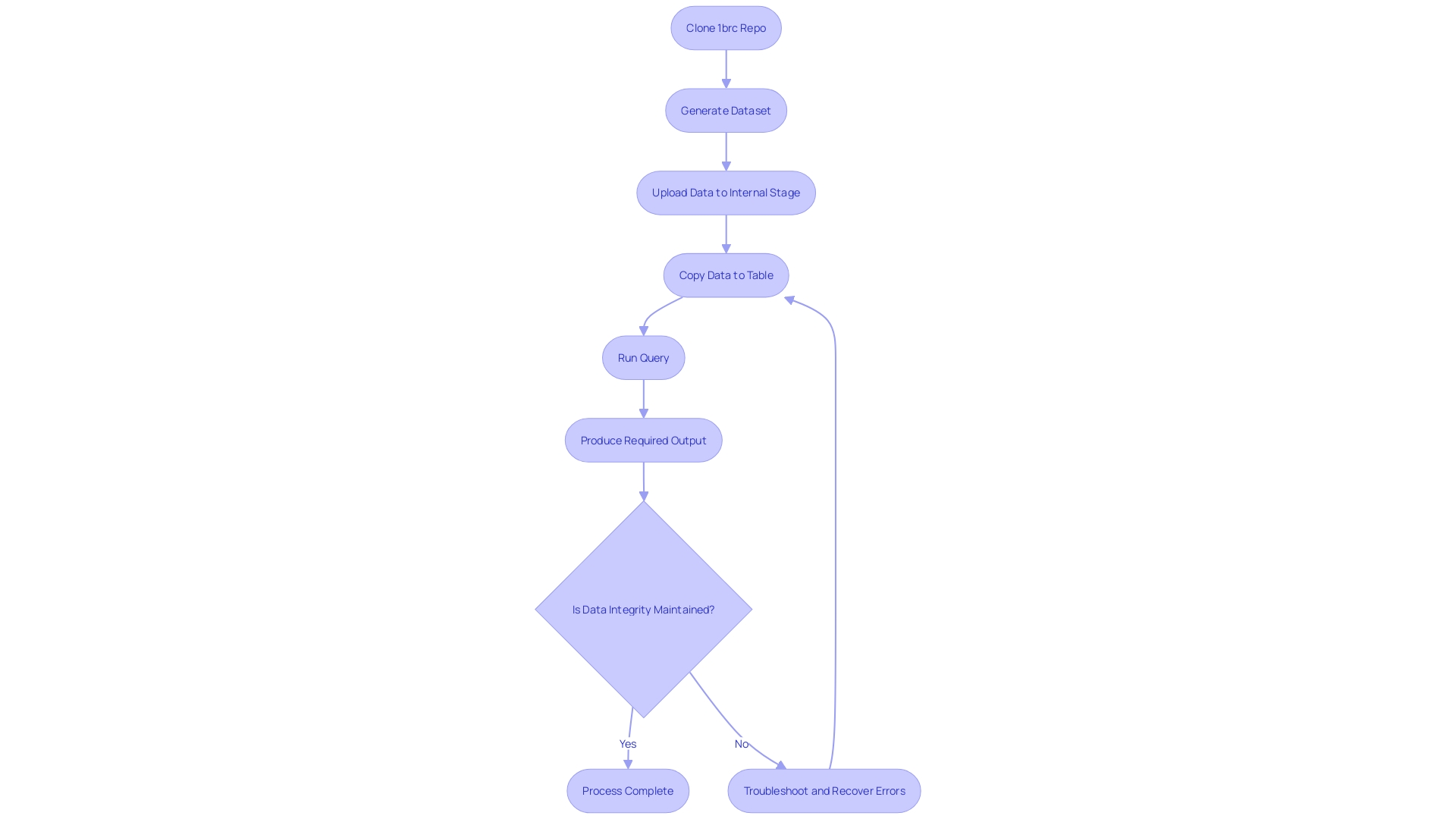

Embarking on the Snowflake journey requires a foundational understanding of its components and a step-by-step approach to setting up your environment. Initially, one would clone the necessary repositories and generate datasets, which are then uploaded to Snowflake's internal stage. This staging area is pivotal for maintaining data integrity and facilitating error recovery during transfer operations. With data securely uploaded, the subsequent action is to copy it into a designated table. This sets the stage for querying and deriving valuable insights.

Snowflake's user interface is crafted to accommodate bespoke requirements, thanks to its robust set of APIs. It allows for a diverse range of customizations, assuring that the platform can be tailored to fit the specific needs of various applications. As generative AI continues to gain traction, the emphasis on a solid data strategy becomes unavoidable; Snowflake's UI and API capabilities are instrumental in this regard.

Moreover, Snowflake's data loading capabilities are built to handle complexity. Employing open-source tools, customized scripts, and a plethora of techniques ensures efficient data reading and transfer. This includes parallel processing, reading Postgres' Write-Ahead Logging (WAL) effectively, data compression, and batch loading. Such optimizations are vital in reducing compute, network, and storage costs while maintaining the data flow's efficiency and consistency.

Logging into Snowflake

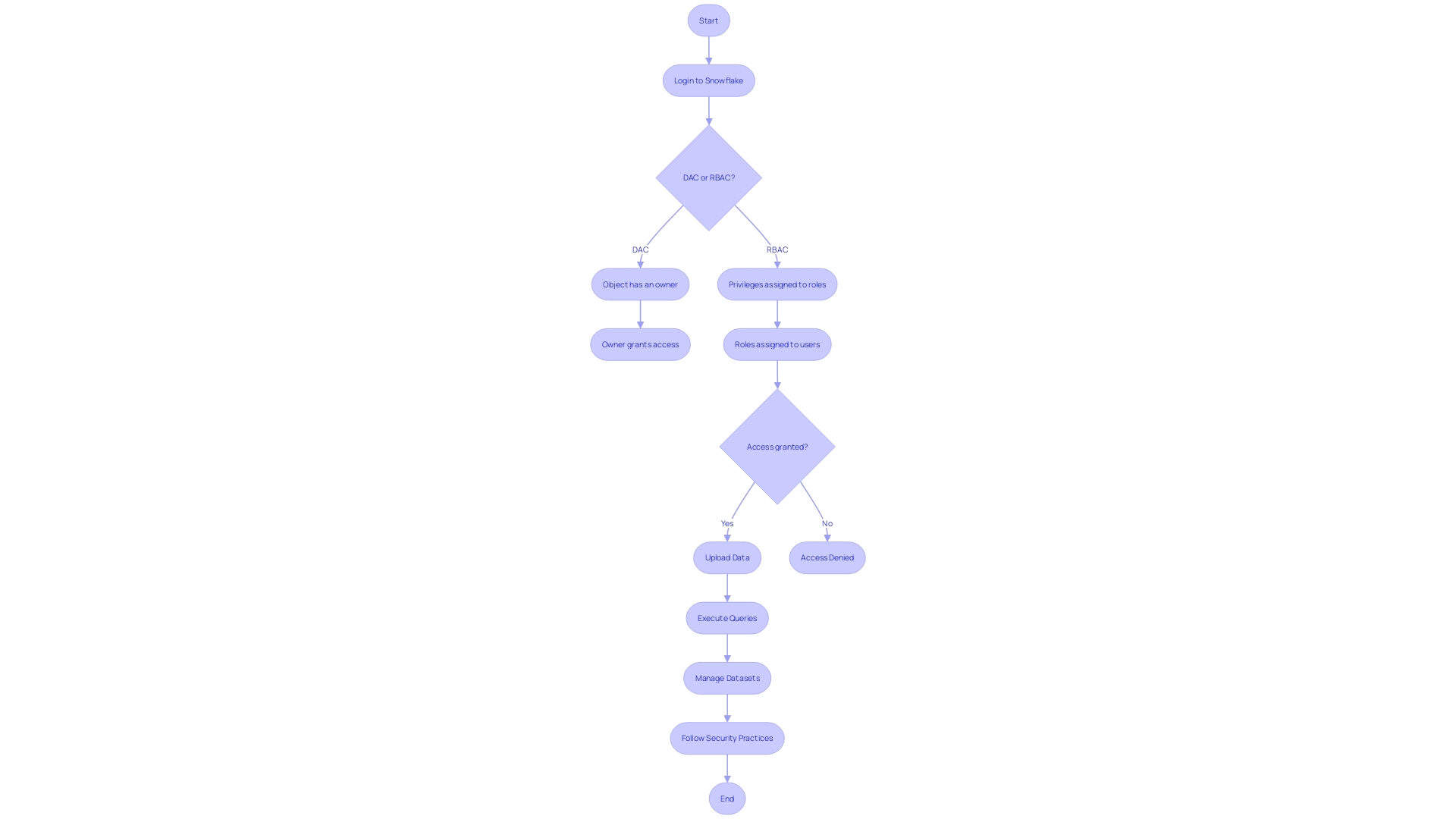

Gaining access to your Snowflake environment is a crucial step after setting up your account. The login process is straightforward, but it is accompanied by robust security measures to ensure the integrity and privacy of your data. Snowflake employs discrete access control (DAC) and role-based access control (RBAC) models, which collectively ensure that each object or entity, like databases and schemas, has specific access privileges. These can be granted by object owners or assigned to roles, which are then associated with users or other roles, creating a hierarchy that provides fine-grained control over who can see or manipulate data.

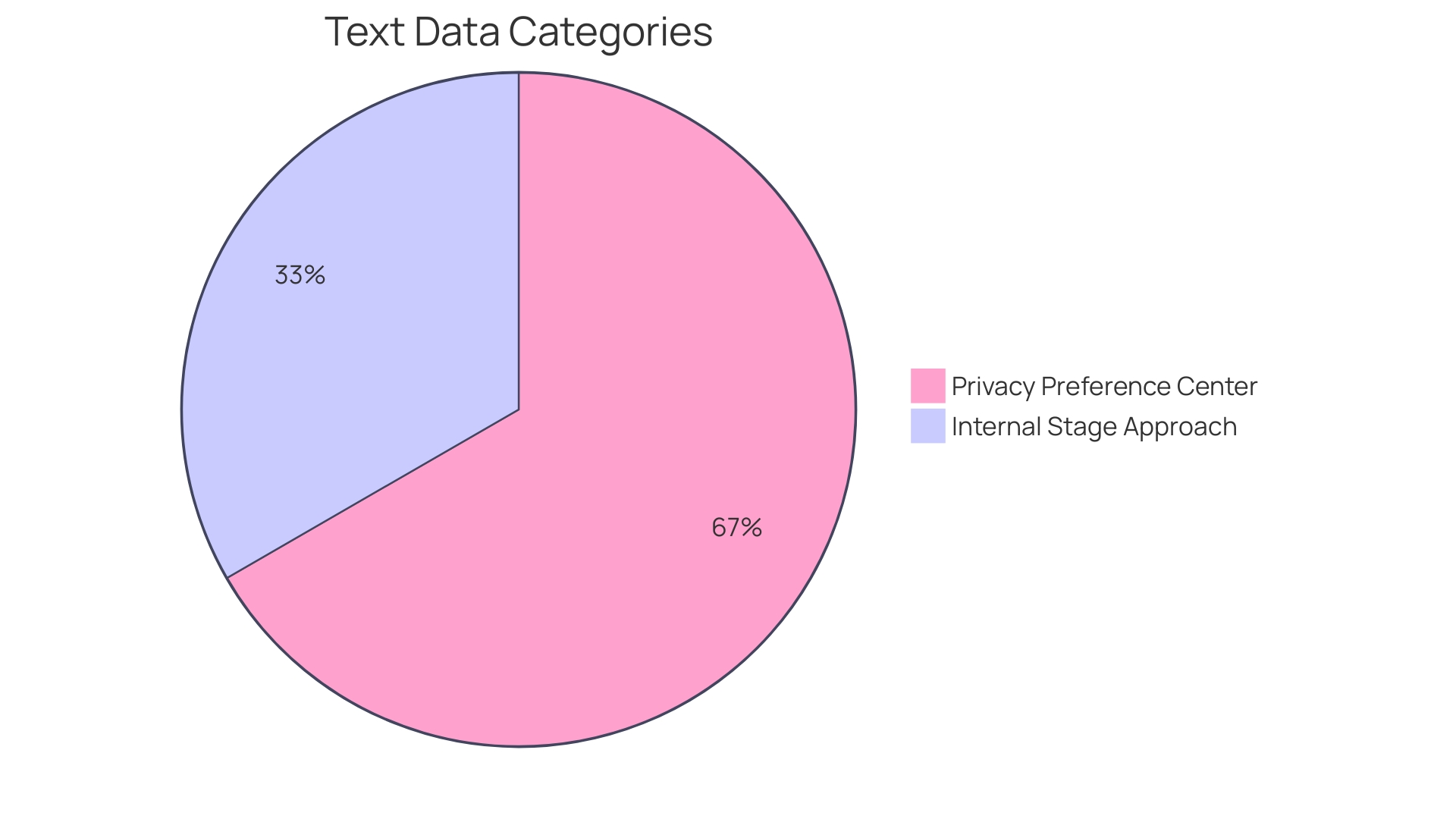

To begin with, uploading data into Snowflake requires an understanding of internal stages. This involves cloning repositories, generating datasets, and using the web interface to load data into tables. Once uploaded, you can execute queries and manage your datasets efficiently. This internal stage approach not only streamlines data handling but also upholds the principles of data integrity and error recovery.

In light of recent security breaches reported in the news, it has become increasingly important to follow best practices for securing your account. Key recommendations include transitioning from password-based authentication to more secure methods such as key-pair authentication or external OAuth for service users. This helps mitigate risks of credential compromise and unauthorized access. Furthermore, Snowflake's latest features and products offer additional layers of security, which are detailed in white papers and guides on best practices.

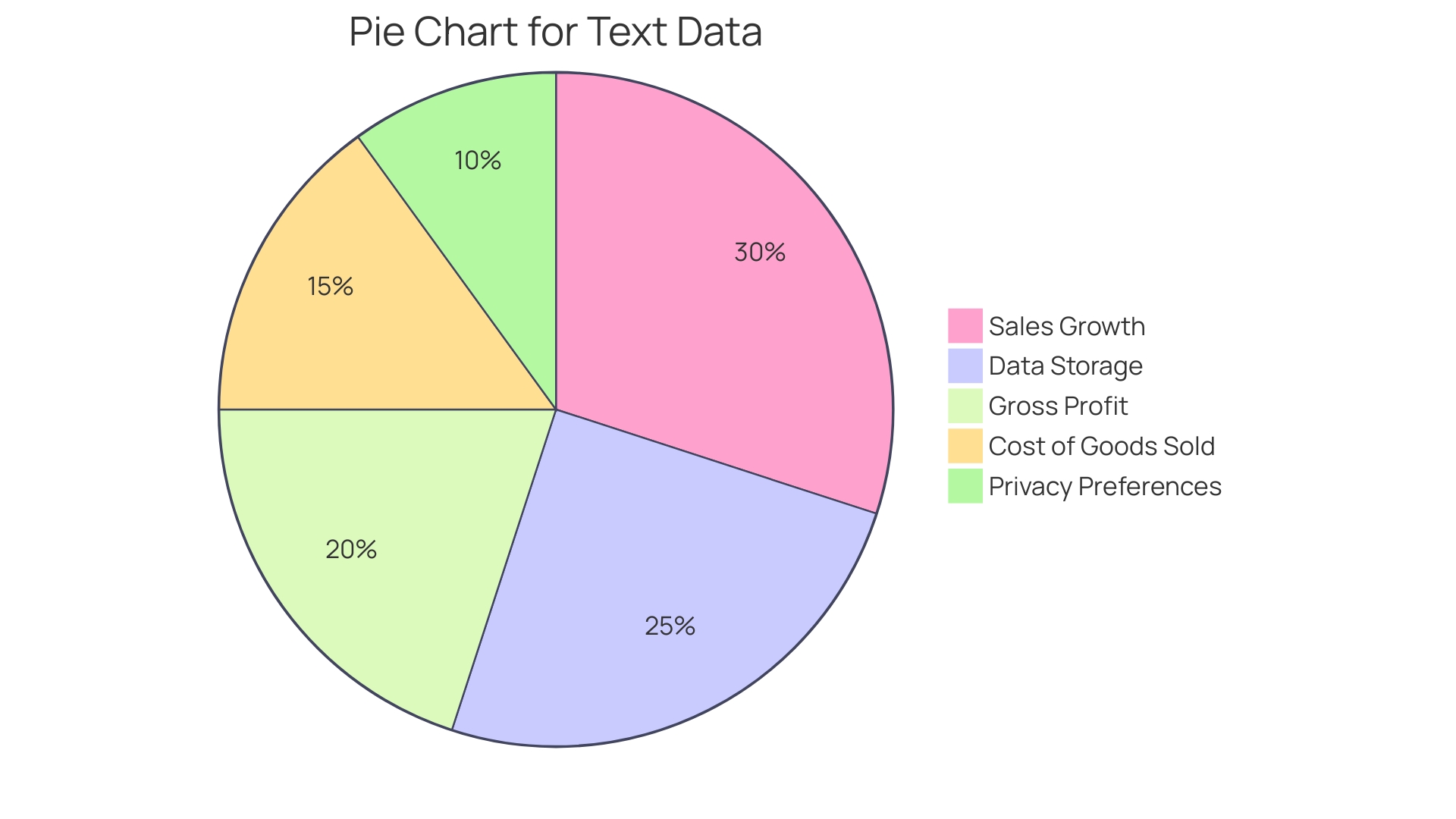

Your privacy preferences are respected within Snowflake's environment, similar to how websites handle cookies. You have control over the types of cookies and information you allow, ensuring a personalized and secure experience. Snowflake's user interface respects these preferences while providing the functionality and performance you expect.

Overall, accessing and managing your Snowflake environment is designed to be user-friendly and secure. It is built to support the needs of organizations that prioritize data security and efficient access control.

Snowsight and SnowSQL Interfaces

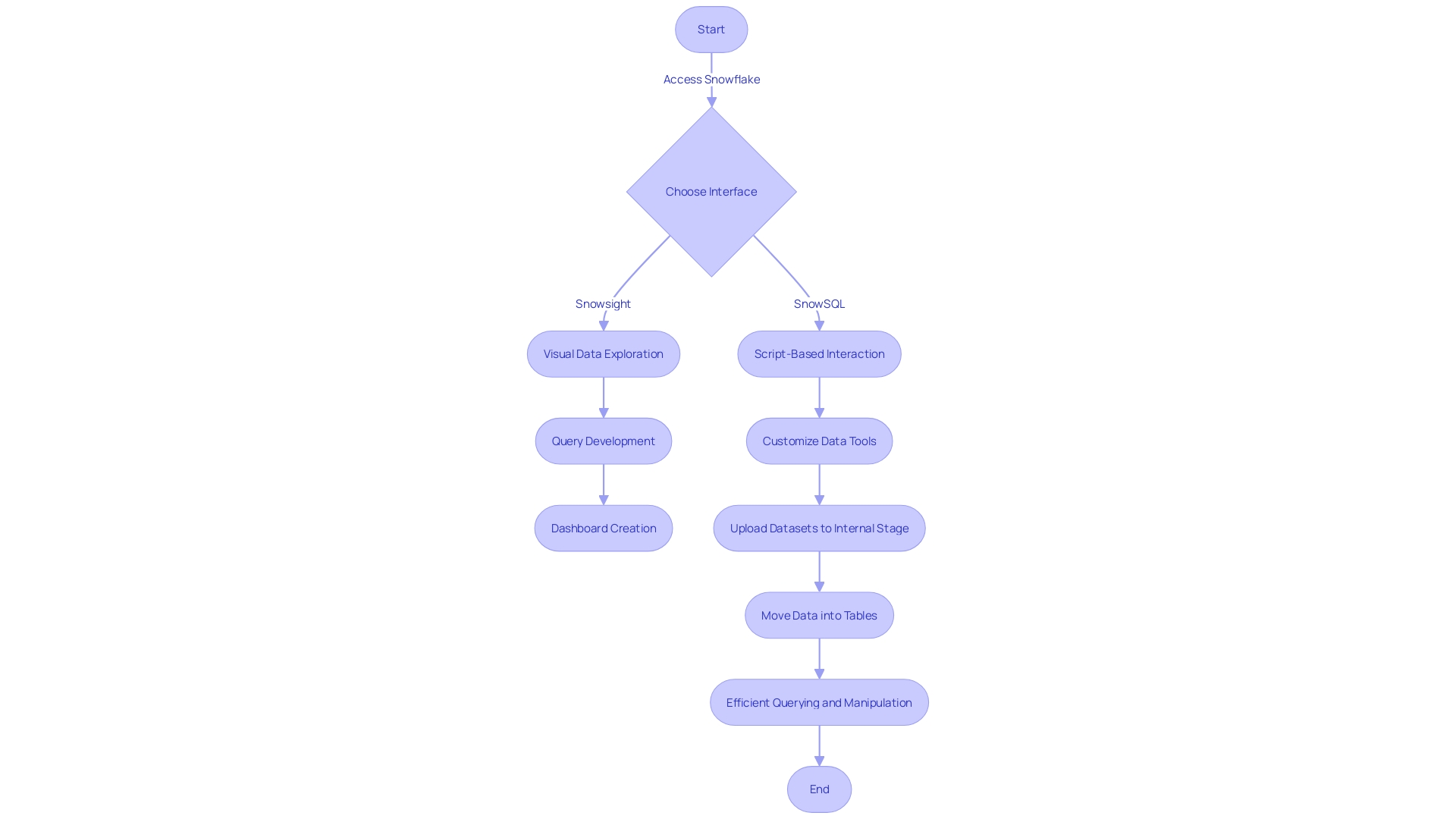

The Snowflake platform is equipped with two robust interfaces, Snowsight and SnowSQL, each designed to enhance the user experience and optimize data management. Snowsight, a web-based user interface, offers a visually intuitive environment for managing and analyzing your data. It provides a suite of tools for visual data exploration, query development, and dashboarding capabilities, making it an indispensable tool for data-driven decision-making processes. On the other hand, SnowSQL, a command-line client, allows for a more traditional, script-based interaction with Snowflake. It is particularly favored by users who require automation or prefer to integrate Snowflake operations into their existing scripts and workflows.

Emphasizing customization, Snowflake's diverse interfaces cater to various user preferences and operational requirements. As noted in recent discussions, there's no one-size-fits-all UI solution, and Snowflake’s API set allows for a high degree of personalization for targeted applications. This flexibility is crucial for organizations seeking to tailor their data tools specifically to their unique business goals.

In practice, the internal stage approach exemplifies Snowflake's adaptability. Users can upload datasets to an internal stage and seamlessly move data into tables, allowing for efficient querying and data manipulation. This process underscores Snowflake's capacity to handle complex data operations, which is invaluable for tasks such as time-series forecasting where historical data is leveraged to predict future trends.

Moreover, recent changes in the data technology landscape, like Snowplow's licensing updates, highlight the necessity for organizations to remain agile and informed about their data tooling options. Snowflake's commitment to providing an open and customizable platform offers a strategic advantage for businesses aiming to maintain flexibility and control over their data infrastructure.

Creating Databases, Schemas, and Tables

Navigating the intricate architecture of Snowflake's database requires a fundamental understanding of its data structuring elements: databases, schemas, and tables. When it comes to establishing a robust data foundation in Snowflake, one begins by uploading the dataset to an internal stage, followed by transferring it to a table where it can be queried for actionable insights. This movement of data is not merely a transfer; it's an intricate dance ensuring data integrity, error recovery, and consistency.

A case in point is the internal stage approach, where after cloning a repository and generating the dataset, it is crucial to upload the data to an internal stage. From there, copying the data to a table allows you to execute queries to yield the necessary outputs, similar to the process diagrammed by experts in the field. This reflects the sophisticated systems that databases are at their core, designed for fast, efficient, and reliable access to structured information, with tables existing both logically for user interaction and physically on disk.

To maximize the efficiency of Snowflake, one must engage with core principles of data modeling, transforming data jungles into insightful landscapes. This involves hands-on experiences with various modeling techniques to construct an effective cloud data warehouse. The structure of your data warehouse project is vital, and setting up an organized folder structure from the beginning, as demonstrated by the industry-standard dbt init command, is a wise starting point.

Beyond mere setup, it's essential to consider the schema design, which is akin to preparing a living product for the market. Discussions around data collection, ingestion, and downstream access are fundamental to ensure the database can handle the workload and avoid production pitfalls. Key practices include minimizing backfills or updates, particularly for append-only workloads like time-series data.

The conversation around database schema design isn't complete without addressing the scalability challenges. For instance, partitioning large PostgreSQL databases is a complex decision that depends heavily on the specific use case. The guiding principle is to test rigorously to determine the optimal partition size for your scenario.

As Snowflake continues to gain popularity, evidenced by its remarkable revenue growth from $334.4 million to $734.2 million in recent fiscal quarters, the importance of designing efficient and scalable database structures becomes ever more critical. It's a balancing act of optimizing compute, network, and warehouse costs while making necessary trade-offs as part of the process. By following these guidelines, you can create a Snowflake database that is not only powerful but also cost-effective and tailored to your organization's data needs.

Using Worksheets for Queries and DML/DDL Operations

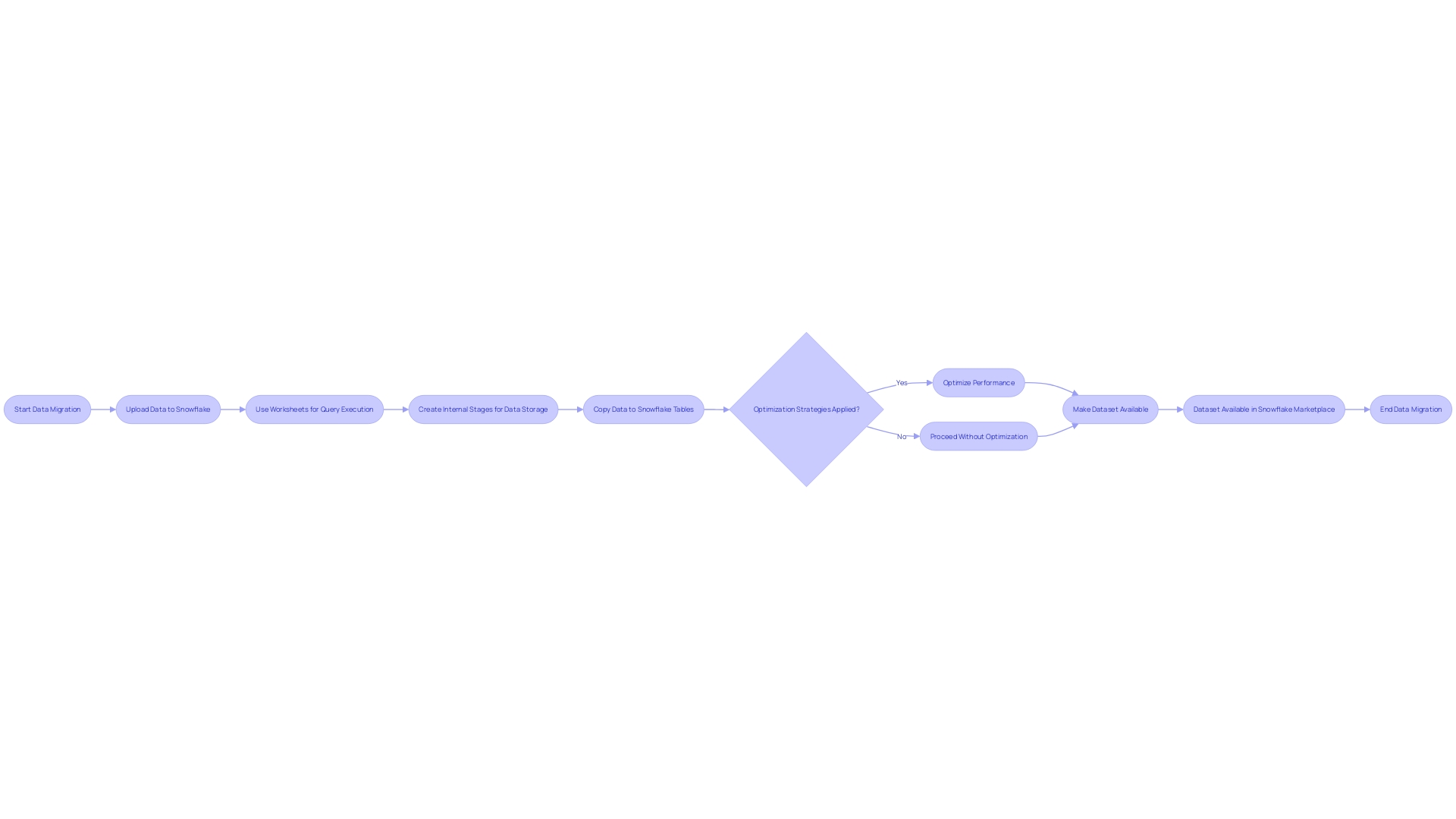

Snowflake's web interface incorporates a feature known as worksheets, which serves as an efficient platform for writing and executing SQL queries. These worksheets are integral to performing comprehensive Data Manipulation Language (DML) and Data Definition Language (DDL) operations. They facilitate a seamless transition from data upload to transformation, allowing users to execute complex queries with ease. For instance, the internal stage approach, a method for data migration, exemplifies the practical application of Snowflake's capabilities. After cloning a repository and generating a dataset, users upload their data into an internal stage. Subsequently, the data is copied into a table, enabling query execution to yield the desired results. This workflow underscores the importance of Snowflake's features in managing data integrity and consistency throughout the migration process.

Snowflake's robust data loading capabilities, alongside open-source tools and customized scripts, empower users to handle large and complex datasets effectively. Optimization strategies play a pivotal role in reducing compute, network, and warehouse costs. Techniques such as parallel processing, efficient reading of Postgres' Write-Ahead Logging (WAL), data compression, and incremental batch loading are instrumental in enhancing Snowflake's performance. Moreover, Snowflake Marketplace offers access to a wide array of datasets, facilitating the creation of realistic and complex data models without compromising customer data privacy. This ecosystem serves as a testament to Snowflake's commitment to providing comprehensive data solutions that adhere to privacy standards, ensuring users can navigate and manage preferences to their discretion. By understanding and leveraging these tools and methodologies, users can optimize query performance and execute data transformations and schema modifications with precision and efficiency.

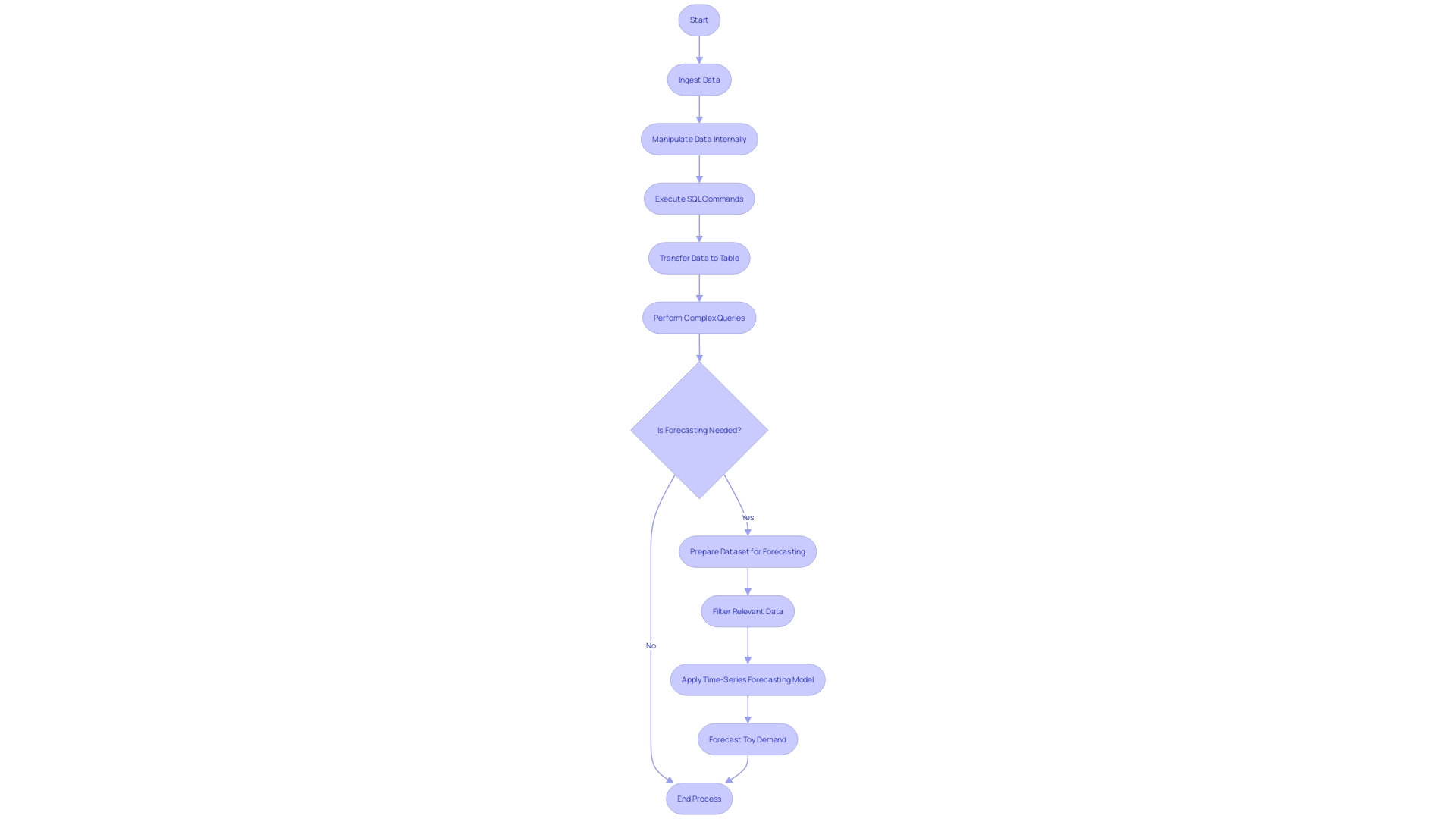

Sample Data Sets and Querying

Mastering the art of querying in Snowflake requires practical experience, and there's no better way to get started than by diving into real-world data scenarios. Explore the process of ingesting and manipulating data within Snowflake through the internal stage approach, which begins by uploading your dataset into an internal stage. Once the data is in place, you'll have the opportunity to execute SQL commands to transfer the data into a table for analysis. This hands-on approach is exemplified by the workflow that transitions measurement files into a table, setting the stage for complex query operations to generate valuable outputs.

Consider a case where forecasting is crucial, such as predicting future toy demand based on historical keyword searches. This task involves preparing a dataset with specific columns like timestamps and search frequencies. By creating a view that filters data relevant to 'toys,' you can apply a time-series forecasting model to anticipate future trends. Such exercises will deepen your understanding of Snowflake's capacity for data analysis and predictive modeling.

Moreover, with Snowflake's robust querying environment, illustrated by the staggering number of 4 billion queries it handles daily, you'll be exposed to a variety of use cases. From managing user privacy settings to enabling Ai's grasp of enterprise data through SQL, Snowflake demonstrates versatility and scalability.

Through these practical examples, you'll not only become proficient in Snowflake's query language but also gain insights into how Snowflake can be leveraged for sophisticated data management and analysis tasks. Whether you're dealing with structured or unstructured data, Snowflake provides a powerful platform for unlocking the full potential of your data assets.

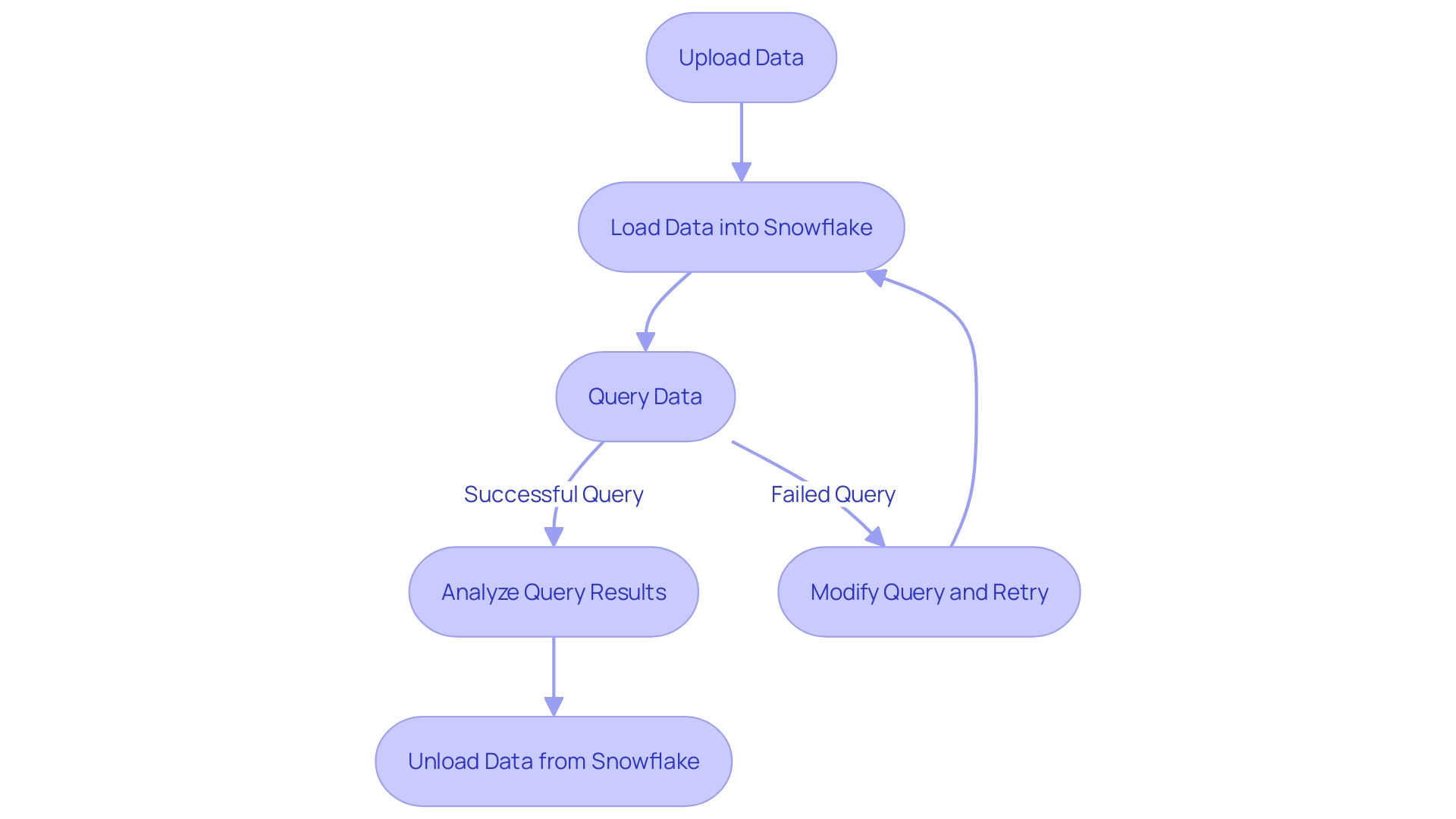

Data Loading and Unloading

Efficient data handling is fundamental to leveraging Snowflake's powerful data warehousing capabilities. Loading data into Snowflake can be achieved through various methods, catering to different scales and complexities of data management. Bulk loading is a common method employed for ingesting large volumes of data, while streaming is suitable for continuous, real-time data input. External tables provide a means to query data directly on cloud storage without moving it into Snowflake, offering flexibility in data management.

For example, utilizing an internal stage, data is first uploaded and then copied into a table for querying. This approach is often visualized in flow diagrams that delineate the steps from data upload to query output. In practice, integrating data lakes and warehouses is a vital part of modern data architecture. While data lakes house raw, unstructured data in formats such as Apache Hudi, Delta Lake, or Apache Iceberg, data warehouses store processed data ready for analysis. Maintaining synchronization between these storage systems, especially when dealing with large datasets, is a challenge that requires an efficient and up-to-date approach.

Unloading data, conversely, involves transferring data from Snowflake to external storage systems. This process must be handled with care, especially in light of privacy concerns and the need to manage data in compliance with privacy preferences and regulations. Whether loading or unloading data, Snowflake provides robust options to ensure data is handled securely, efficiently, and in alignment with the strategic data management goals of an organization.

Zero Copy Cloning and Data Management

Snowflake Database revolutionizes data management with its Zero Copy Cloning feature, enabling the creation of immediate, lightweight data clones. This technology sidesteps the need for duplicating storage, which not only conserves space but also reduces costs associated with data volume. By facilitating the effortless reproduction of datasets, Snowflake ensures that even as data scales, storage expenses do not escalate uncontrollably. This is especially crucial for businesses where data storage and query processing comprise a significant part of the operational budget.

Zero Copy Cloning operates by referencing the original data stored in Snowflake, rather than creating redundant copies, which streamlines the process of data analysis. For instance, Skai's omnichannel advertising platform leveraged this feature to enhance their system's performance. Despite an influx of data and additional features straining their UI, Zero Copy Cloning enabled them to manage their resources more effectively, avoiding the frustration of a slowing system.

Moreover, the seamless integration of data across various databases, as enabled by Zero Copy Cloning, presents a stark contrast to traditional ETL (extract-transform-load) processes. These often come with high error risks and additional costs. Instead, Snowflake's innovative approach allows data to be accessed from different sources without the need for physical data movement.

This method is particularly advantageous when performing complex queries that are both resource-intensive and time-sensitive. It ensures that data integrity is maintained, and recovery from errors is more manageable, making the data migration process consistent and reliable. Consequently, this not only optimizes performance but also minimizes compute, network, and warehouse expenditure, providing a cost-effective solution for organizations managing large datasets.

As the data platform market evolves, with significant players like Databricks and Microsoft emphasizing the importance of efficient data management, Snowflake's Zero Copy Cloning remains a critical asset. It exemplifies Snowflake's commitment to providing a flexible, high-performance data warehouse that adapts to the changing needs of businesses and the industry at large.

Key Features and Benefits of Snowflake

Snowflake Database excels with a suite of capabilities that cater to the needs of modern data-driven enterprises. Its architecture is designed to offer scalability, handling data growth effortlessly. The platform enables multiple users to execute diverse queries concurrently without performance degradation, showcasing its strong concurrency features. One of the standout aspects of Snowflake is its automatic optimization. The system dynamically adjusts computational resources to ensure efficient query processing, eliminating the need for manual tuning and reducing operational overhead.

Moreover, Snowflake's native support for semi-structured data, such as JSON, Avro, XML, and Parquet, allows organizations to ingest and analyze a wide array of data without the need for complex transformations. This is critical as businesses increasingly rely on various data formats to gain insights and drive decision-making.

In practice, the Internal Stage Approach demonstrates Snowflake's efficiency in data handling. After cloning a repository and generating a dataset, the data is uploaded into an internal stage and then copied into a table for querying. This streamlined process exemplifies Snowflake's ease of use in managing data workflows.

Furthermore, Snowflake's robust approach to access control combines discretionary and role-based access control models, enhancing data security. It ensures that only authorized users and roles have access to sensitive data, which is paramount in today's environment where data privacy is highly scrutinized.

In real-world applications, companies like Lex Machina, which processes extensive legal records, have turned to Snowflake to overcome challenges presented by managing large database clusters. The switch to Snowflake has allowed them to efficiently manage their databases and focus on building their product rather than spending time on database scripting operations.

As organizations seek to implement hybrid search strategies, combining semantic and traditional search, Snowflake provides valuable options. This adaptability to contemporary search methodologies further establishes its position as a comprehensive solution for data warehousing needs.

Performance and Query Optimization

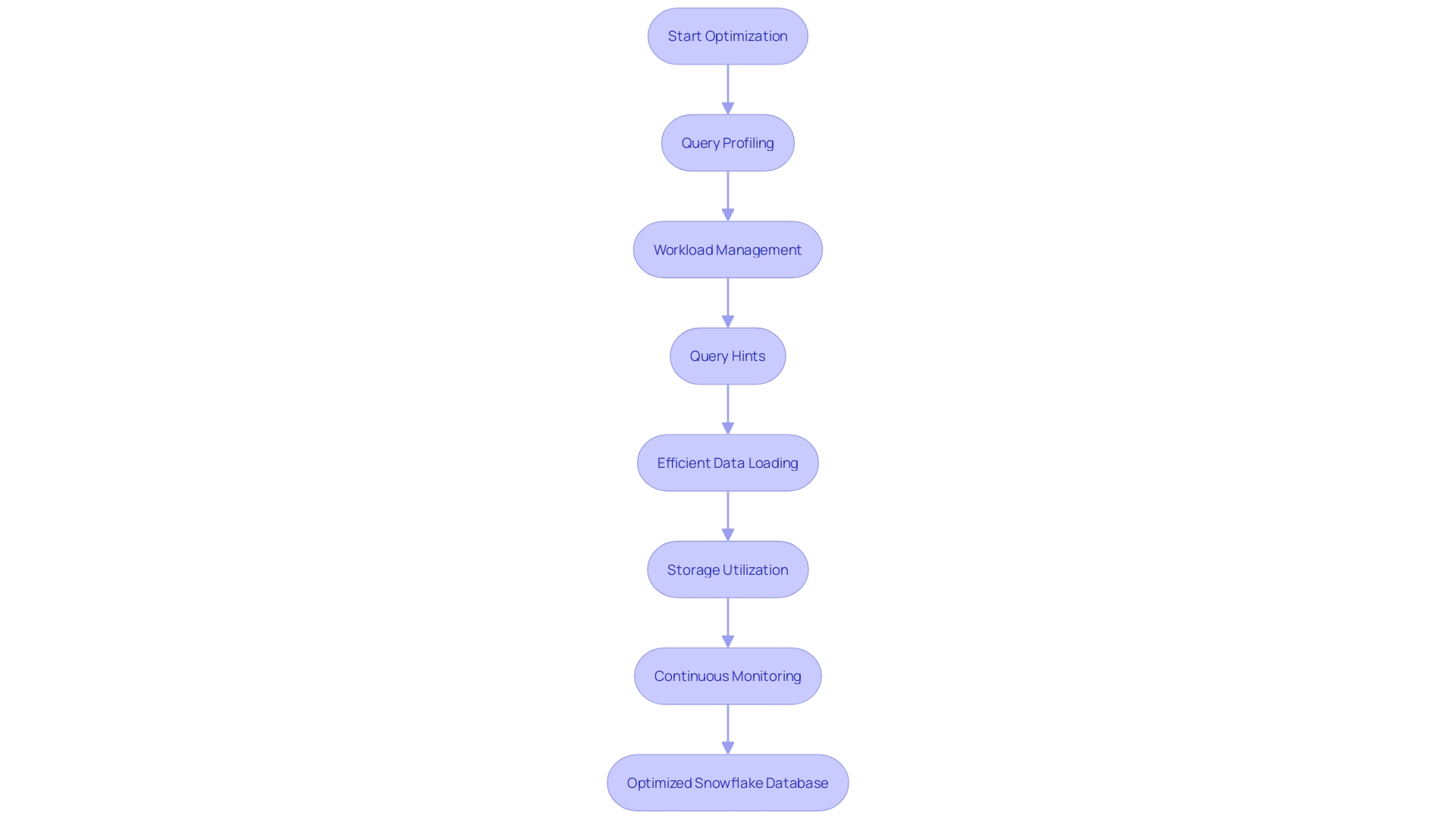

Snowflake Database, with its innovative architecture, provides numerous features to enhance query performance and data management. To optimize performance in Snowflake, it's vital to employ techniques such as query profiling and workload management effectively. Query profiling involves analyzing execution plans and refining queries based on the data's specific characteristics, ensuring a streamlined execution path for each query.

Query hints can also be a powerful tool, guiding the Snowflake optimizer to generate the most efficient query plans. It's akin to providing the optimizer with a map of the most direct routes to the data, potentially reducing resource utilization and improving response times.

Workload management is another critical aspect, especially when dealing with large datasets. For instance, pre-staging data by joining large tables and materializing the results can save considerable time and computational resources. This approach allows repeated access to aggregated data without the overhead of re-running complex joins. Moreover, leveraging in-memory tables can further expedite data retrieval, crucial for performance-centric applications.

Efficient data loading and storage utilization are also paramount. By using Snowflake's internal staging approach, data can be securely uploaded and copied into tables. This method not only streamlines data movement but ensures that the data is readily available for querying, minimizing delays in data processing.

A real-world example of Snowflake's performance optimization capabilities can be found in the way it handles compute costs. By monitoring and managing warehouse usage, Snowflake allows organizations to minimize idle time, thereby reducing compute costs which can constitute a substantial portion of expenses.

Continuous monitoring and iterative refinement are essential for maintaining optimal performance. As one expert puts it, "Efficient SQL query optimization is pivotal for ensuring high-performing database systems. Implementing these techniques demands a deep understanding of database structure, query execution plans, and the specific characteristics of the data being handled." By adopting such strategies, Snowflake users can achieve a responsive and scalable database environment tailored to their specific needs.

Security and Regulatory Compliance

Snowflake DB delivers robust security measures to safeguard sensitive data, integrating advanced encryption, stringent access controls, and comprehensive auditing capabilities. Snowflake's security framework is designed to maintain data confidentiality and integrity, providing peace of mind in an era where data breaches are not only common but can also be incredibly costly. According to a report by the Ponemon Institute, sponsored by IBM Security, the average cost of a data breach in 2023 had reached a record high. The importance of robust security measures cannot be overstated, and Snowflake's approach to access control—encompassing Discretionary Access Control (DAC) and Role-based Access Control (RBAC)—is pivotal in this regard. These controls ensure that only authorized individuals have access to certain data objects, with roles and privileges carefully managed to minimize the risk of unauthorized access.

Global compliance is another critical factor for companies operating across borders. Snowflake assists organizations in adhering to diverse regulatory requirements such as GDPR and HIPAA, showcasing its capability to handle data privacy laws from various regions. This is essential for cloud-first SaaS companies like Skyflow, which must navigate the complexities of global privacy regulations that dictate specific data handling and storage practices. With Snowflake, companies can confidently pursue their expansion strategies, knowing they are supported by a data warehouse solution that respects and complies with the intricate tapestry of international data protection laws.

Recent incidents highlighted in the news, such as the unauthorized access to a former Snowflake employee's demo account, underscore the necessity for robust security measures like those provided by Snowflake. The account in question, which lacked multi-factor authentication, did not expose sensitive data, demonstrating Snowflake's commitment to safeguarding customer information and maintaining a secure environment. Such incidents reinforce the importance of implementing multiple layers of security, including encryption and authentication protocols, to protect against potential threats.

Advanced Features and Use Cases

Snowflake DB's robust feature set offers a powerful suite of tools to address intricate data warehousing needs. It empowers organizations to enhance their data strategies with capabilities such as data sharing, materialized views, and the innovative time travel feature. For example, consider the hybrid search strategy, which combines the precision of semantic search with the broad reach of traditional keyword search. This approach not only supports complex search scenarios but also optimizes performance and cost efficiency.

A case in point is the predictive analysis of market demands, such as forecasting toy demand from historical keyword searches. By leveraging Snowflake's advanced processing capabilities and SQL query engine, users can generate accurate forecasts using time-series data models. This level of predictive insight is invaluable for businesses aiming to stay ahead of market trends.

Snowflake's flexible architecture, highlighted by the ability to customize through an extensive set of APIs, is crucial for developing tailored data applications. The platform's self-managed service simplifies the creation and management of enterprise-level data solutions. Furthermore, Snowflake's innovative internal staging and data storage practices are designed to balance the storage costs with query processing needs. These features exemplify Snowflake's commitment to providing a scalable, user-centric data warehousing solution that adapts to a wide array of business requirements.

Conclusion

Snowflake's architecture revolutionizes data warehousing with unmatched scalability and elasticity. Its decoupled storage and compute layers allow for independent management of resources. The internal staging area ensures data integrity and consistent performance.

Snowflake's substantial growth reflects the increasing demand for flexible and efficient data warehouse solutions.

Getting started with Snowflake involves a step-by-step setup process and efficient data loading techniques. Robust security measures safeguard data privacy. Snowflake's interfaces, Snowsight and SnowSQL, cater to various user preferences.

Navigating Snowflake's database architecture requires understanding databases, schemas, and tables. Organized folder structures and data modeling techniques optimize efficiency. Worksheets facilitate seamless data upload and complex query execution.

Mastering querying in Snowflake involves practical experience with real-world data scenarios. Snowflake provides a powerful platform for unlocking the full potential of structured and unstructured data assets.

Efficient data loading and unloading methods cater to different data management needs. Snowflake's internal staging approach ensures secure data upload. Zero Copy Cloning enables lightweight data clones without duplicating storage, reducing costs.

Snowflake's key features, such as scalability, concurrency, and native support for semi-structured data, make it a powerful solution for modern enterprises. Robust security measures and global compliance support data confidentiality and integrity. Advanced features like data sharing and materialized views enhance data strategies.

In conclusion, Snowflake's architecture and capabilities establish it as a trusted authority in data warehousing. Its innovative features and adaptability empower efficient data management and analysis. Snowflake marks a fundamental transformation in the data industry, where real-time access to data is essential for businesses to remain competitive.