Introduction

In the rapidly evolving landscape of cloud-native applications, understanding the intricacies of Kubernetes Services is paramount for organizations aiming to optimize their operational capabilities. As a pivotal component of the Kubernetes ecosystem, Services facilitate seamless communication among Pods, enabling dynamic interactions that are crucial for resilient microservices architectures.

This article delves into the definition, purpose, and various types of Kubernetes Services, highlighting their role in enhancing application performance, load balancing, and service discovery. Furthermore, it addresses best practices and troubleshooting strategies that empower teams to navigate the complexities of Kubernetes effectively, ensuring robust security and efficient resource management.

With the increasing adoption of Kubernetes across industries, mastering these concepts is essential for any organization striving to leverage the full potential of cloud technologies.

Understanding Kubernetes Services: Definition and Purpose

In the Kubernetes ecosystem, a service in Kubernetes is a vital abstraction that defines a logical grouping of containers along with a policy for accessing them. This abstraction establishes a stable endpoint, which is essential for interacting with a dynamic group of containers that may undergo changes due to scaling or updates. To understand what a service is in Kubernetes, it is essential to recognize its fundamental purpose, which lies in its ability to facilitate seamless communication between various components of a system, ensuring interactions remain uninterrupted despite underlying modifications to the Pods.

This functionality is particularly critical in microservices architectures, where components are often deployed and updated independently, thereby enhancing resilience and reliability. According to SlashData’s report, the container orchestration platform boasts a user base of over 5.6 million developers, representing 31% of all backend developers, underscoring its widespread adoption and the importance of effective service management. Furthermore, as organizations navigate the complexities of microservices, Fairwinds Insights assists platform engineers in rightsizing resource allocations, ensuring that compute resources are efficiently utilized.

With the growing complexity of cyberattacks, the continuous investment in advanced security platforms underscores the necessity for strong security measures in container deployments, especially as recent reports reveal that 28% of organizations have over 90% of workloads operating with insecure capabilities. As the development of container orchestration abstractions progresses into 2024, these components render cloud-native solutions an essential aspect of contemporary applications.

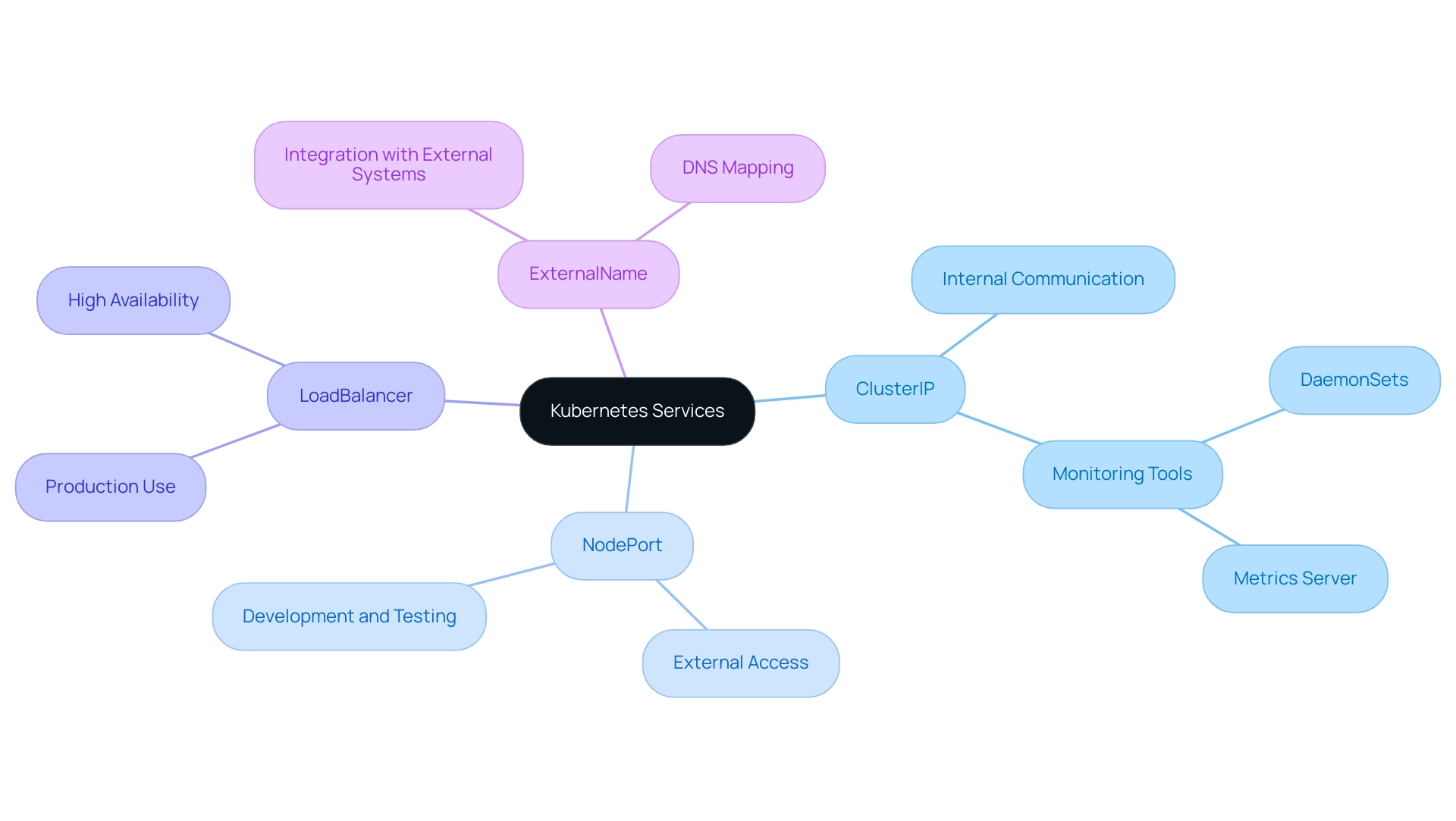

Exploring Different Types of Kubernetes Services: ClusterIP, NodePort, LoadBalancer, and More

Kubernetes encompasses a variety of types, and one important aspect is understanding what a service is in Kubernetes, as each type is tailored for specific operational needs. Understanding what a service is in Kubernetes is crucial for effective resource management and deployment strategies.

-

ClusterIP: This is the default type that exposes the resource on a cluster-internal IP, accessible exclusively from within the cluster.

It is especially well-suited for internal communication, ensuring secure interactions among systems without exposing them to external traffic. Monitoring the health and performance of these systems is essential, and Kubernetes metrics can be collected using tools like DaemonSets or a Metrics Server. -

NodePort: This type of offering extends the accessibility of resources by exposing them on each Node's IP at a static port.

This configuration allows external traffic to reach the application, making it advantageous for development and testing environments where quick access is essential. -

Load Balancer: Designed for production scenarios, the Load Balancer functionality provisions a dedicated load balancer that exposes the offering externally, providing a single, stable IP address for client access.

This is instrumental in managing incoming traffic effectively, ensuring high availability and reliability during peak loads.

As CloudBees noted,

This collaboration demonstrates CloudBees' confidence in the technology's potential and its essential role in container orchestration going forward.

- ExternalName: This type facilitates easier integration with external systems by mapping a resource to the contents of the external Name field (e.g., a DNS name).

This capability enables container orchestration applications to utilize external resources effortlessly.

As of 2024, container orchestration has experienced an expanding adoption landscape, with Brazil reporting 1,573 users employing various orchestration types. This statistic illustrates a wider trend of growing container orchestration adoption, which is essential for organizations to evaluate when deciding their operational strategies and type of usage. Additionally, the implementation of DevSecOps emphasizes the increasing significance of cooperation among development, operations, and security teams in effectively managing container orchestration.

Comprehending how to oversee these offerings and the implications of team collaboration will empower organizations to make informed choices that align with their operational goals.

Using Kubernetes Services to Expose Applications and Manage Service Discovery

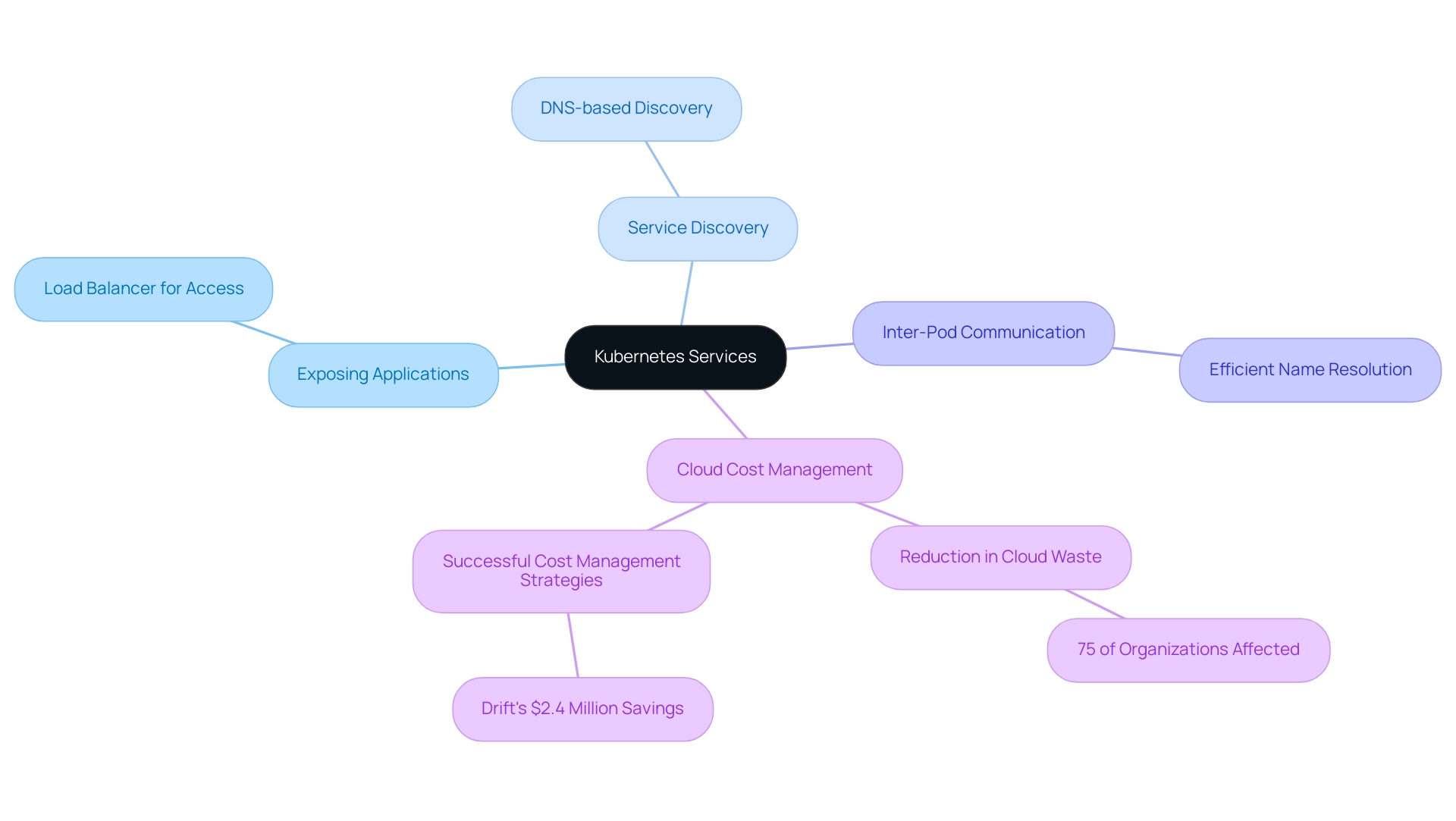

Understanding what is service in Kubernetes is crucial for exposing software to external clients and facilitating efficient resource discovery within clusters. To reveal a program, administrators can define a resource that targets the Pods running that program. For example, utilizing a Load Balancer solution allows a web application to be accessed over the internet via a stable IP address, facilitating user access.

Additionally, the platform improves inter-Pod communication through strong discovery mechanisms. By utilizing DNS-based resource discovery, Pods can efficiently resolve names to their respective IP addresses, thereby streamlining communication within the cluster. This capability is increasingly relevant as organizations seek to optimize their cloud infrastructure; recent statistics indicate that 75% of organizations are grappling with increased cloud waste.

Furthermore, with Google Cloud's IaaS solution increasing by 63.7% from 2020 to 2021 and the overall IaaS market anticipated to expand by over 30%, the significance of container orchestration in managing cloud resources becomes even more evident. As companies like Drift have shown, effective cloud cost management strategies can result in significant savings—Drift lowered its annual cloud expenses by $2.4 million, highlighting the importance of strategic management. Such advancements in container orchestration discovery not only enhance operational efficiency but also play a crucial role in contemporary cloud strategies, as noted by Veeam, which found that half of surveyed businesses view the cloud as vital to their data protection frameworks.

The trend of organizations effectively lowering cloud expenses, as demonstrated in several 'Cloud Cost Management Success Stories,' further emphasizes the significance of container orchestration in attaining these goals.

Ensuring Load Balancing and High Availability with Kubernetes Services

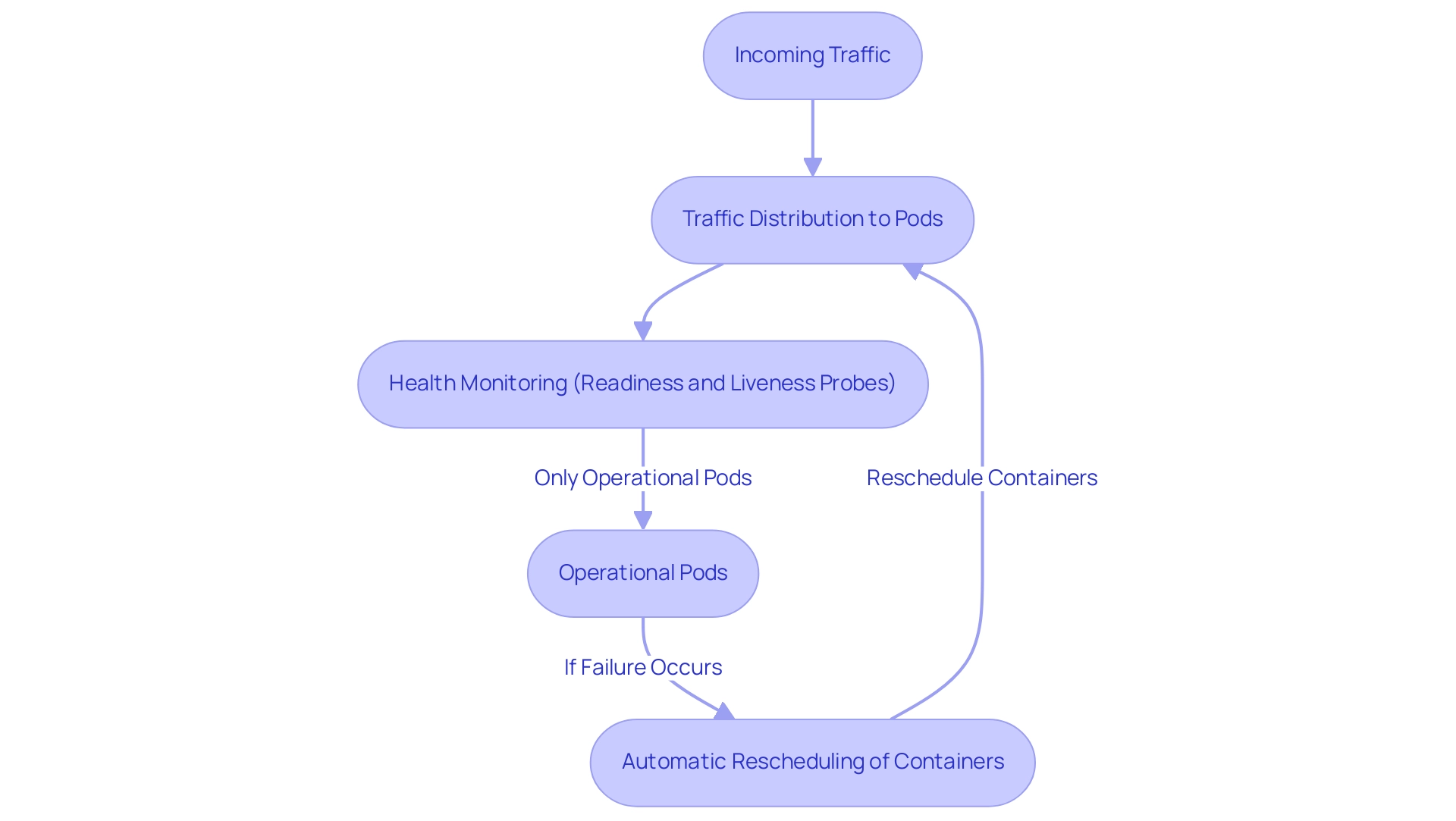

Container orchestration solutions provide inherent load balancing features by efficiently allocating incoming traffic among the units linked to a deployment. This distribution mitigates the risk of any single Pod becoming overwhelmed, thereby significantly enhancing application performance. To guarantee high availability, the platform is designed with the capability to automatically reschedule containers in case of failure, thereby preserving continuous access.

The platform's features, such as readiness and liveness probes, play a crucial role in monitoring Pod health. These probes ensure that traffic is only routed to operational Pods, which is vital for sustaining service reliability and performance, especially during peak loads or failure scenarios. A real-world example can be seen in an e-commerce platform that utilized container orchestration for load balancing during holiday sales.

This strategic use of container orchestration enabled the platform to achieve high availability, scalability, and optimal resource utilization, effectively handling significant traffic spikes while ensuring an uninterrupted user experience. As Avital Tamir, DevOps Lead at Lemonade, states, "Every once in a while there comes a tool that turns what used to be months of labor into a single line of code. Groundcover is a revolution in observability."

This emphasizes the transformative effect of the container orchestration platform on deployment processes. Furthermore, agents on a node can communicate with all pods on that node, which is essential for understanding what is service in Kubernetes and managing service performance. Additionally, it is essential to note that a network plugin supporting Network Policy enforcement is required to fully leverage the platform's capabilities.

As container orchestration continues to evolve, its load balancing features are expected to enhance even further in 2024, aligning with the growing demands for high availability and performance in cloud-native applications.

Best Practices and Troubleshooting for Kubernetes Services

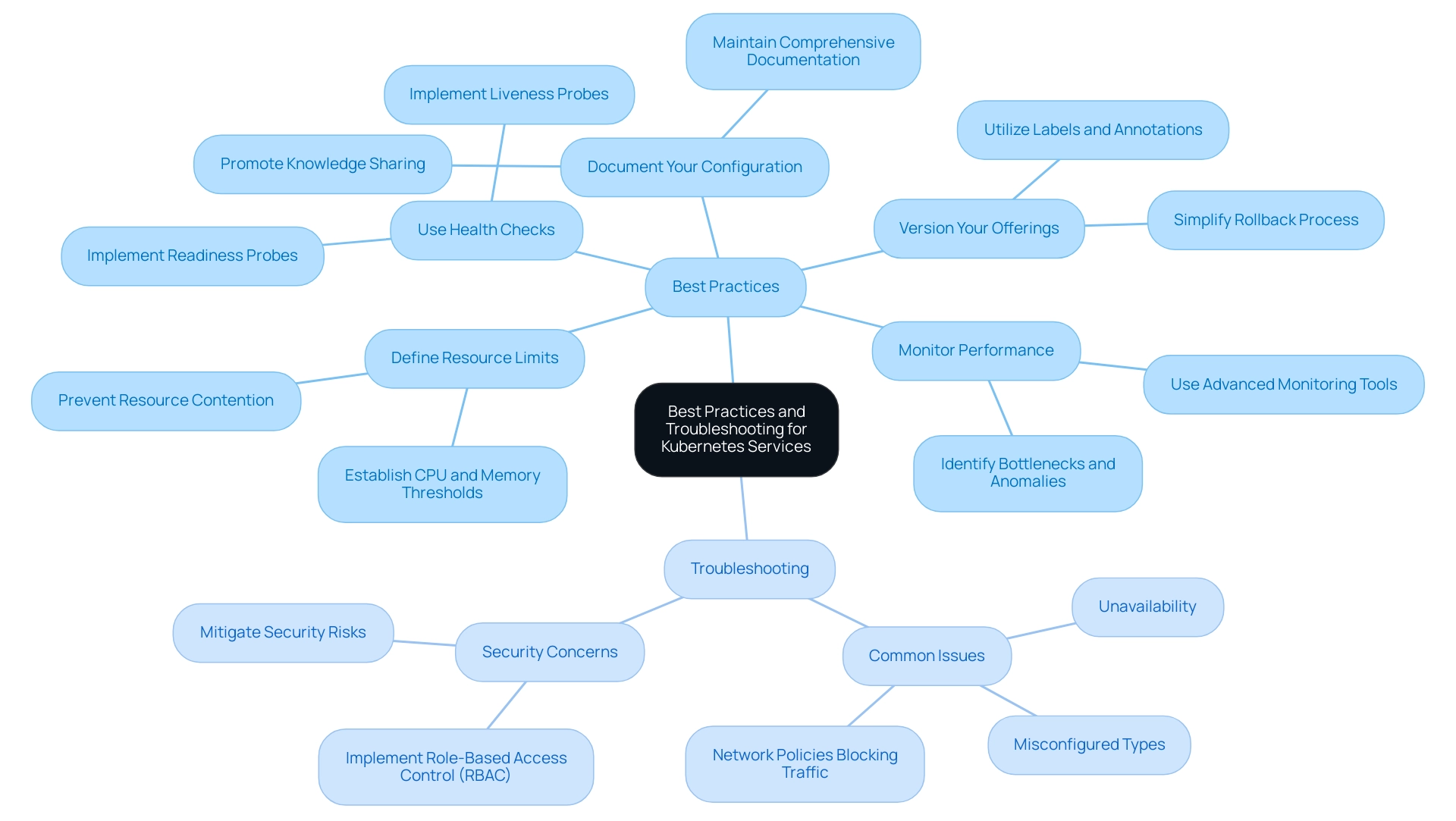

To effectively optimize Kubernetes functionalities, it is crucial to implement the following best practices:

- Define Resource Limits: Establish clear CPU and memory thresholds for containers to prevent resource contention, ensuring that no single application can monopolize cluster resources, which can lead to performance degradation.

- Use Health Checks: Implement readiness and liveness probes to actively monitor Pod health. This practice ensures that traffic is solely directed to Pods that are fully functional, thereby improving reliability and user experience.

- Version Your Offerings: Utilize labels and annotations for versioning your offerings, which simplifies the rollback process in the event of a failed deployment. This practice is vital for maintaining stability in a dynamic environment.

- Monitor Performance: Utilize advanced monitoring tools to continuously track performance and swiftly identify bottlenecks or anomalies. An effective monitoring strategy can lead to proactive adjustments, improving overall efficiency.

- Document Your Configuration: Maintain comprehensive documentation of setup configurations. This resource is invaluable for resolving issues and integrating new team members, promoting a culture of knowledge sharing within the organization.

Regarding troubleshooting, common problems may occur, such as unavailability, misconfigured types, or network policies unintentionally blocking traffic. Addressing these challenges requires a systematic approach: utilize container orchestration logs and events to diagnose problems effectively, understand what is service in Kubernetes by verifying service configurations for accuracy, and conduct connectivity tests between Pods to ensure seamless communication. Notably, security remains a significant concern for users of the container orchestration platform; according to the 2022 Red Hat Adoption, Security, and Market Trends Report, 94% of organizations experienced at least one security incident related to the platform in the past year.

This statistic underscores the importance of implementing Role-Based Access Control (RBAC) to mitigate risks by controlling user permissions and access to resources within the cluster. For instance, organizations that have implemented RBAC have reported a marked reduction in unauthorized access incidents, significantly enhancing their security posture. Additionally, with tools like CloudZero, users can merge containerized and non-containerized costs, track costs per customer, project, and feature, and receive alerts to prevent overspending on their Kubernetes budget, providing a contemporary perspective on cost management that is essential for Chief Technology Officers.

Conclusion

Kubernetes Services are integral to the successful deployment and management of cloud-native applications, serving as a vital mechanism for facilitating communication among Pods and enhancing application performance. By understanding the different types of services—ClusterIP, NodePort, LoadBalancer, and ExternalName—organizations can tailor their Kubernetes strategies to meet specific operational needs. Each service type plays a unique role, whether it be securing internal communications or managing external traffic, thus underlining the importance of selecting the right type for the intended use case.

Moreover, the capabilities of Kubernetes in service discovery and load balancing cannot be overstated. By allowing seamless interaction between applications and efficient traffic distribution, Kubernetes ensures high availability and reliability, which are essential in today’s dynamic cloud environments. The incorporation of best practices, such as:

- Defining resource limits

- Implementing health checks

- Maintaining thorough documentation

further fortifies the effectiveness of Kubernetes Services, enabling teams to navigate the complexities of microservices architectures with confidence.

As organizations increasingly adopt Kubernetes, mastering these service management principles will be crucial for optimizing operational capabilities and enhancing security. The continuous evolution of Kubernetes not only addresses the growing demands for efficient resource utilization but also reflects the broader trend of digital transformation across industries. By prioritizing effective service management, organizations can unlock the full potential of their cloud-native applications, ensuring they remain competitive in an ever-evolving technological landscape.