Introduction

DevOps metrics play a crucial role in software development and delivery, acting as a compass to navigate the complexities of the process. These metrics not only track progress but also shed light on areas that require attention, enabling teams to refine their processes for optimal performance. By prioritizing developer experience and leveraging qualitative research methodologies, organizations can capture meaningful data on productivity and satisfaction.

Surveys and direct engagement with developers can uncover insights into the barriers impeding their work, leading to strategies for improvement. In this article, we will explore the different categories of DevOps metrics, the significance of each category, and how organizations can collect and analyze these metrics effectively. Additionally, we will discuss best practices for implementing DevOps metrics and showcase case studies that demonstrate the transformative impact of these metrics on continuous improvement.

Why DevOps Metrics Matter

DevOps measures act as the guide for navigating the intricacies of program development and distribution. These metrics not only track progress but also illuminate areas that require attention, enabling teams to refine their processes for optimal performance. By leveraging qualitative research methodologies and prioritizing developer experience, organizations can capture meaningful data on productivity and satisfaction. Surveys, when used effectively, can uncover insights into the barriers impeding developers, informing strategies for improvement.

Incorporating developer feedback is a fundamental step toward enhancing the tools and processes they rely on. Engaging directly with developers can result in actionable tasks for improving the process of delivering the program, ultimately increasing productivity. As reported by industry leaders, such initiatives have led to significant backlogs of enhancements aimed at improving developer satisfaction.

The DevOps model represents a paradigm shift, advocating for continuous improvement of application development and release. Automation is central to this approach, streamlining the development lifecycle, reducing overhead, and expediting distribution. Practices like continuous integration, continuous delivery, infrastructure as code, and comprehensive monitoring are pivotal in aligning technological efforts with business objectives.

Evaluating the success of programs from the users' perspective relies heavily on customer satisfaction measurements, especially Customer Lifetime Value (CLV). These measurements offer understanding into customer involvement and the enduring benefit they obtain from the program.

The Developer Experience Lab's research underscores the importance of focusing on sustainable developer experiences rather than solely on productivity. Highly skilled individuals in the field of distribution are recognized for their capacity to maintain a harmonious equilibrium between productivity and reliability, as demonstrated by indicators like time taken to implement changes, frequency of deployment, rate of unsuccessful changes, and duration required to recover from failed deployments.

The integration of AI in development tools is gaining momentum, promising to enhance performance measures and outcomes. As the adoption of AI-powered tools increases, their influence on the industry and the continuous improvement of software capabilities will become more pronounced.

Understanding DORA Metrics

The Research and Assessment (DORA) measures, established by the eponymous team at Google, serve as a fundamental compass for organizations striving for excellence in the development and operations domain. They illuminate the path towards optimal performance by benchmarking key aspects of program delivery and operational health. Among these, Deployment Frequency and Lead Time for Changes stand out as pivotal indicators, revealing both the pace at which code transitions to production and the duration from commit to deployment. These measurements not only guide organizations in refining their DevOps strategies but also play a crucial role in maintaining program quality—a matter of paramount importance in sectors such as banking where M&T Bank, a venerable institution with over a century and a half of service, has embarked on a digital transformation journey. Amidst a landscape of strict regulatory demands and the imperative of secure, high-quality code, DORA metrics provide a lens through which banks like M&T can assess and elevate their development practices, ensuring maintainability, performance, and compliance. As industry research underscores, the connection between delivery proficiency and broader organizational health is undeniable. Elite performers who excel in balancing throughput with stability exemplify this link, achieving not only enhanced team and employee well-being but also setting a benchmark for aspirants. In light of such evidence, the call for continuous improvement is clear, mandating a regular assessment of outcomes at all organizational levels to foster a culture of incremental enhancement. With qualitative insights supporting these quantitative measures, teams are empowered to harness data effectively, navigating the complexities of development and steering towards a future where the developer experience is as much a priority as the output, with AI tools heralding the next wave of evolution in this domain.

Key DevOps Metrics Categories

A successful strategy for continuous delivery is not only about the velocity of deployment but also about the excellence and reliability of releases. To measure the efficiency of DevOps practices, various types of indicators offer understanding into elements of the development lifecycle. Deployment measurements, for instance, could encompass the frequency of deployments and the time taken to recover from failed deployments, which are crucial for comprehending the throughput and stability of software changes. Quality measurements, meanwhile, explore the change failure rate, providing a glimpse into how often deployments introduce critical issues.

Operational measurements are crucial in exposing the observability of a system, which is the capability to assess its current condition based on outputs like logs, measurements, and traces. Business measurements assist in linking the connections between technical performance and commercial results, while team measurements concentrate on the productivity and well-being of the development team.

Recent developments in DevOps tools and practices, such as Microsoft's Radius project, aim to abstract complexities in Kubernetes and promote multi-cloud strategies. This effort has the potential to change the way developers and operators specify and manage resources, affecting deployment and operational measurements.

Moreover, the importance of accurate and trustworthy data is paramount. For example, the CVSS (Common Vulnerability Scoring System) has introduced new measures for a more detailed vulnerability assessment, which can assist in improving quality measures. Insights from the DORA (Development Operations Research and Assessment) report emphasize the correlation between software delivery performance and overall organizational health, urging regular measurement of outcomes for continuous improvement.

In the field of AI, the progressive implementation of AI-driven tools is set to have a significant impact on performance measurements in software development. These tools offer to improve developer productivity and, as a result, the measurements linked to team performance.

To sum up, DevOps measurements cover various categories, each providing distinct insights that, when monitored and utilized effectively, can propel an organization's technological vision and innovation while guaranteeing alignment with business goals.

Deployment Metrics

Deployment measurements serve as an indicator for the effectiveness and accomplishment of software release procedures. Key indicators such as deployment frequency, change failure rate, mean time to recover, and change lead time are crucial in evaluating how swiftly and reliably code transitions from commitment to deployment. Organizations utilize these measurements not only to identify and alleviate bottlenecks but also to improve the overall deployment pipeline.

Recent research highlights the importance of deployment measurements in forecasting organizational, team performance, and even employee well-being. High-performing entities, identified as 'Elite performers', exhibit exceptional throughput and stability, often marked by frequent deployments and rapid recovery from failures. These outcomes are achieved through regular measurement and continuous refinement of software delivery processes.

Moreover, embracing AI tools has shown promising potential in boosting these performance measures. As the technology advances, its incorporation into development operations is expected to continue to have an impact and enhance deployment measurements. It's imperative that organizations apply these insights pragmatically, tailoring improvements to their unique contexts and challenges.

Lead-Time Metrics

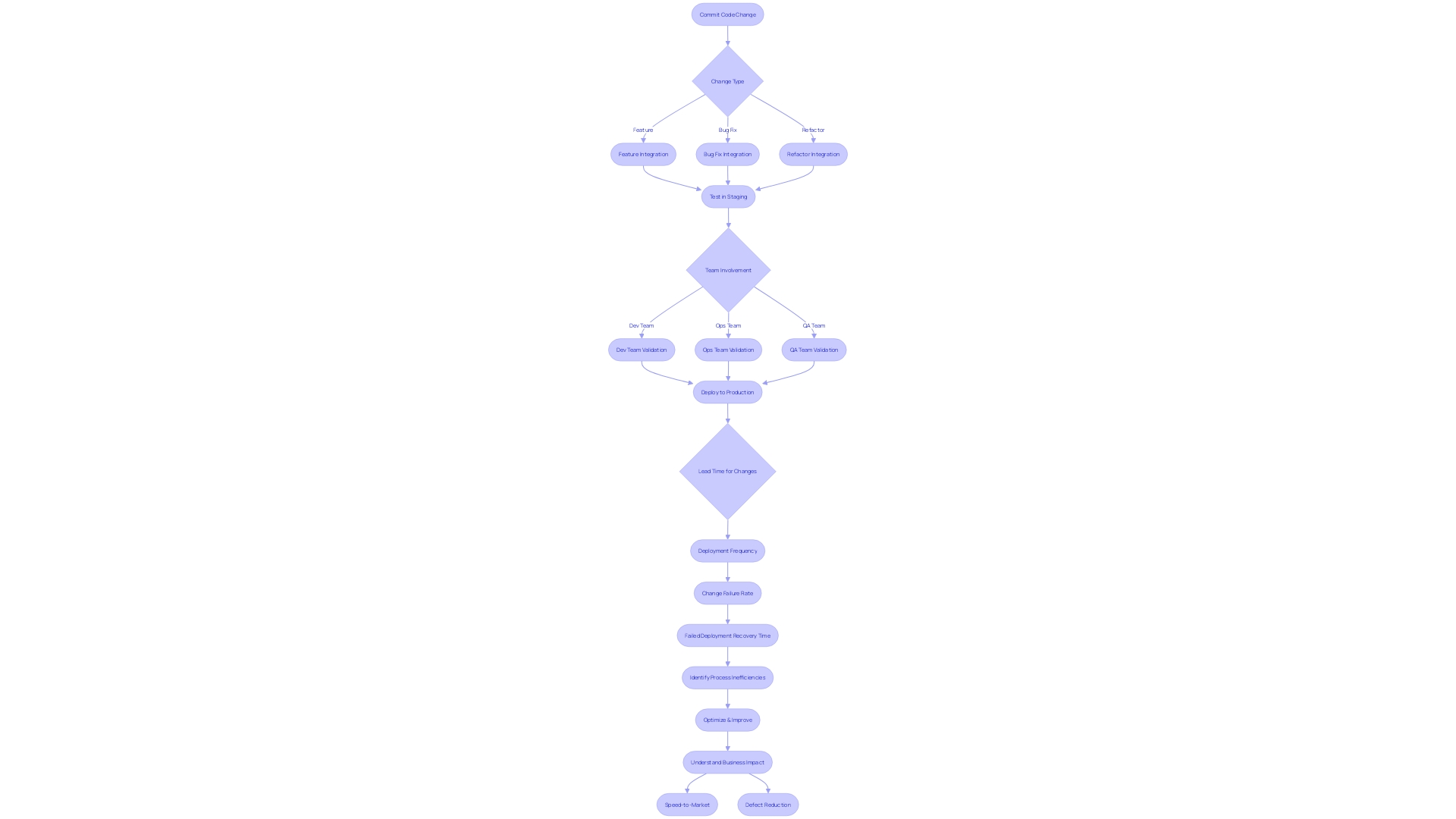

Metrics such as lead time for changes are pivotal in understanding the velocity of a development pipeline from inception to deployment. These measurements not only track the duration required to integrate code changes into a production environment but also can be dissected by change type or team involvement. The advantage of examining lead-time measures lies in identifying process inefficiencies, promoting an environment where optimization is not only a goal but a continuous practice. The research conducted by Markus Borg and presented in the Code Red paper of 2022 underscores the significant business impacts of code quality on speed-to-market and defect reduction. Implementing strategies based on empirical data like the maximum time for implementing a ticket, depending on code quality, can dramatically clarify the business advantages of maintaining a robust codebase. In the face of financial constraints and the emergence of transformative technologies, capturing, monitoring, and utilizing these qualitative measurements becomes a foundation for improving developer efficiency and project management, which has significantly evolved from the Scientific Management of the early 20th century to modern methodologies.

Quality Metrics

To guarantee the advancement and utilization of top-notch applications, it is essential to establish a strong collection of quality indicators. These measurements are not just figures; they are an advanced method of quantitatively evaluating the dependability and consistency of the program. Consider key indicators such as the defect escape rate, which measures the frequency of defects slipping through testing phases; mean time to detect, which gauges the swiftness of identifying issues; and mean time to resolve, which tracks the average time taken to rectify defects.

The importance of these measurements is highlighted by academic research, like that conducted by Paul Ralph and his team, who stress the value of developed evaluation methods in engineering. Moreover, a study highlighted in the 'Code Red' paper by Markus Borg et al. draws a direct correlation between code quality and critical business outcomes, including market responsiveness and defect rates, reinforcing the business value of a well-maintained codebase.

An examination of the past 15 years of the World Quality Report reveals an evolution in the quality engineering and testing domain, offering insights into how companies have been leveraging quality metrics to optimize their processes more effectively. The report emphasizes the importance of continuous improvement and measurement in achieving high delivery performance, as indicated by the four performance levels identified, including the elite performers who excel in both throughput and stability.

As industries continue to adopt AI, the usage of machine learning (ML) models in testing and quality assurance for applications is becoming more and more common. These models, which can be evaluated using measurements customized to specific tasks and data sets, are starting to change the way we approach quality in computer programs, from bug detection to predictive analytics.

Through the lens of Google's research on developer productivity, a 'theory of quality' emerges, elucidating four distinct types of quality that interact with each other. This theory serves as a foundation for understanding how quality measures can be leveraged to enhance developer satisfaction and productivity.

In summary, through careful monitoring and analysis of quality metrics, organizations can identify areas for process improvement, enhancing the overall quality and reliability of their applications, and, ultimately, delivering value to the business and its customers.

Operations Metrics

In the realm of software development, operational efficiency is paramount. Metrics such as mean time between failures (MTBF), mean time to restore (MTTR), and system availability are not just indicators of performance but are crucial for the continuous provision of value. For instance, TBC Bank, a leading institution in Georgia, has embraced the mission to expedite time-to-market for digital products. By optimizing their operations metrics, they aim to provide an exceptional banking experience for both customers and staff.

In today's competitive landscape, a 25% average increase in developer productivity, as reported by Turing's AI-accelerated development study, highlights the transformative power of generative AI tools in software project delivery. This aligns with the industry's overall surge in data-related professions and underscores the need for enterprises to adapt to this rapid evolution.

Furthermore, the utilization of Latent Generative Models (LGMs) for analyzing time series data exemplifies the innovative approaches in forecasting business trends and operational performance. LGMs, by capturing the joint distribution between variables, offer rich insights that are essential for strategic decision-making.

In synergy with these technological advancements, the Top 10 Software Development Tools for 2024 have been identified, focusing on features such as issue-tracking, sprint management, automation, and collaboration. Choosing the right development tools is crucial, as they significantly impact the success or failure of a project. Today's market is replete with a plethora of tools, each designed to meet specific needs, and selecting the one that aligns with your team’s technological requirements and preferences is a critical endeavor.

The history of productivity management, with its roots in Frederick Taylor's Scientific Management theory, has evolved to include methodologies like CPM and PERT. These methodologies have influenced the structuring of work and project management across various sectors.

Lastly, the relationship between code quality and business impact, as demonstrated in the Code Red research paper, is undeniable. High code quality directly correlates with faster speed-to-market and a reduced number of defects, making the case for investment in tools and practices that ensure a healthy codebase.

Business Metrics

The practices that combine technology advancements with business objectives, such as revenue enhancement, customer satisfaction amplification, and expedited time to market, are instrumental. For institutions like M&T Bank, with a heritage of over a century and a half, the digital transformation of the banking industry underscores the urgency of adopting agile development practices to meet the rising bar of consumer expectations and stringent regulatory demands. A crucial element in this transition is the implementation of Clean Code standards, guaranteeing the performance and maintainability of the system supporting vital banking operations.

Observing business measures is not just about understanding the direct influence on financial results; it's about fully comprehending how software development practices contribute to a strong developer experience (DevEx), which is vital for long-lasting productivity. Insights from the Developer Experience Lab and initiatives like the research by Microsoft and GitHub during the pandemic highlight the shift from simply maximizing developer output to enhancing the developer environment.

Utilizing qualitative indicators, like the ones suggested by the DevOps Research and Assessment (DORA), offers a structure to measure the effectiveness of application distribution. Key performance indicators include deployment frequency, change lead time, change failure rate, and recovery time from failed deployments. Elite performance levels, as identified by DORA, demonstrate that top-tier organizations maintain a balance of throughput and stability, setting a benchmark for continuous improvement in software delivery.

The incorporation of AI tools, as indicated by recent survey data, is still in its early stages, yet it already shows promise in enhancing performance measurements vital to businesses. As these technologies become more prevalent, their influence on DevOps practices and, subsequently, on business measurements, is anticipated to expand.

Team Metrics

Performance and collaboration measurements are crucial in optimizing the software development lifecycle. Key performance indicators such as team velocity, cycle time, and employee satisfaction offer insights into the efficacy of team dynamics and processes. The historical evolution of productivity management, from Frederick Taylor's Scientific Management to modern project management methodologies like CPM and PERT, underscores the significance of structured workflows and labor processes in enhancing industrial efficiency. This evolution has paved the way for a more sophisticated approach to managing projects across various sectors, including IT and services. Emphasizing qualitative metrics, organizations can capture and leverage data to bolster developer productivity, which is particularly pertinent in the current climate of fiscal prudence and rapid technological advancement. For example, the 'Code Red' study emphasizes the tangible business benefits of high code quality, revealing that a strong codebase can expedite market release and minimize defects. Furthermore, engaging developers in conversations about their experiences can yield a wealth of actionable insights to refine software delivery and boost productivity. By adopting a data-driven approach to measuring team performance, companies can foster an environment conducive to continuous improvement and innovation.

How to Collect and Analyze DevOps Metrics

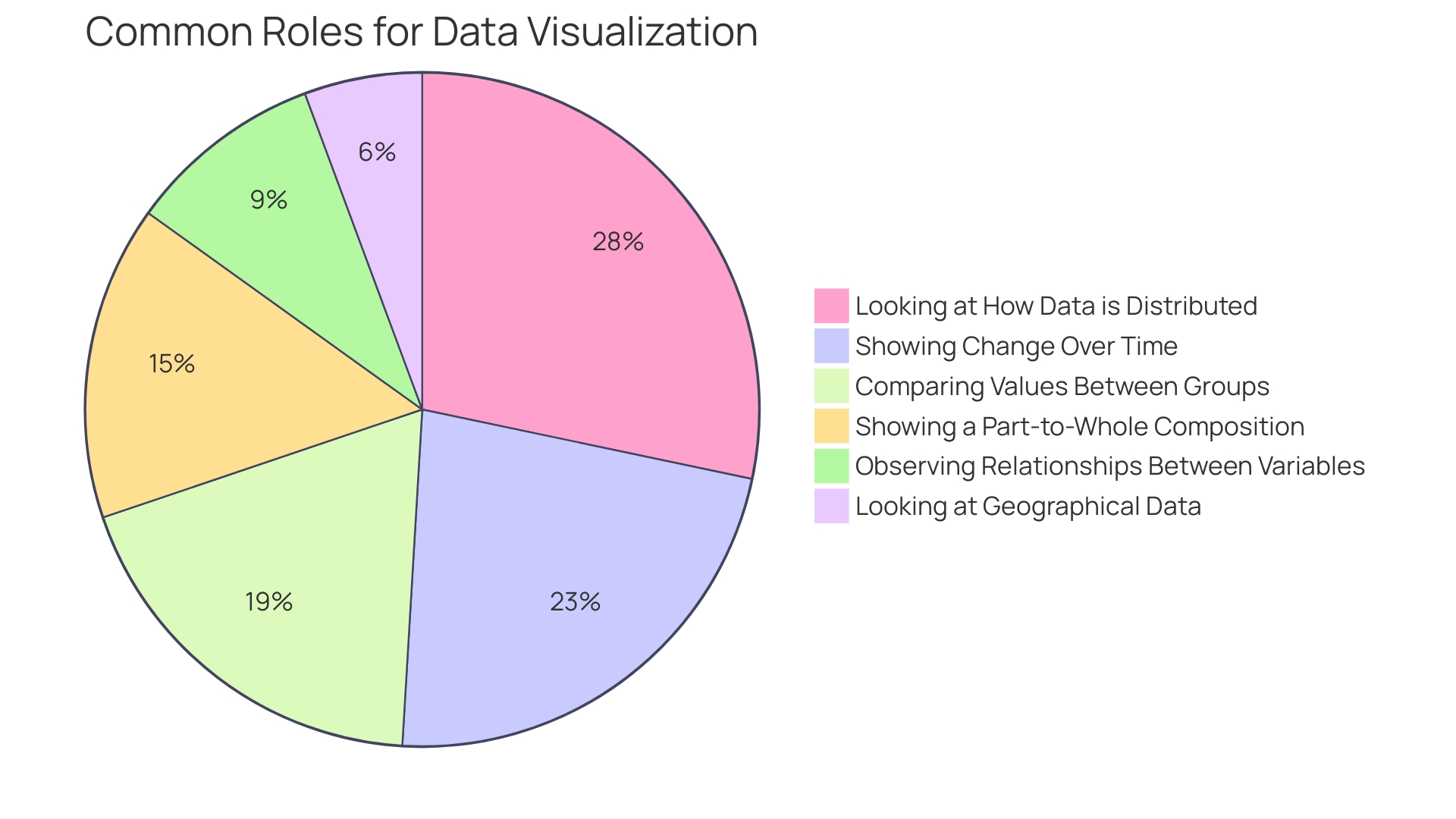

Utilizing measurements of effective software development practices requires a well-defined approach. Organizations must pinpoint the precise metrics they wish to monitor, uncover the sources of data, and craft strategies for its aggregation. Post-collection, the data must undergo rigorous analysis to extract actionable insights. This often encompasses the deployment of data visualization tools, the construction of insightful dashboards, and the application of robust statistical methods.

For example, telemetry—the art of collecting diverse signals such as logs, traces, and operational metadata—is the starting point for comprehending our systems' behavior. A strategic plan for telemetry includes identifying which signals to capture and determining the methods of capture. Once telemetry is in place, organizations can transition from simply reacting to issues to proactively managing and improving their workflow efficiency, security, and predictability.

Moreover, the integration of tools like Apache DevLake, currently incubating at The Apache Software Foundation, and the Splunk App for Data Science and Deep Learning, which recently updated to version 5.1.1, exemplifies the technological advancements supporting these endeavors. These tools enable organizations to keep pace with directives like the T+1 compliance directive, necessitating the settlement of all USA trades in no more than one day, by providing predictive analytics capabilities.

The core of DevOps is to promote ongoing enhancement in application development and distribution. This is achieved through a nexus of automation tools designed to streamline the software development lifecycle and bridge the gap between development, operations, and quality assurance. Practices such as continuous integration, continuous delivery, and infrastructure as code are instrumental in this process, reducing overhead and accelerating market delivery time.

In summary, the careful gathering and examination of performance measurements is not just an operational duty but a strategic effort that, when carried out with accuracy and the appropriate tools, can greatly improve an organization's ability to accomplish its business goals.

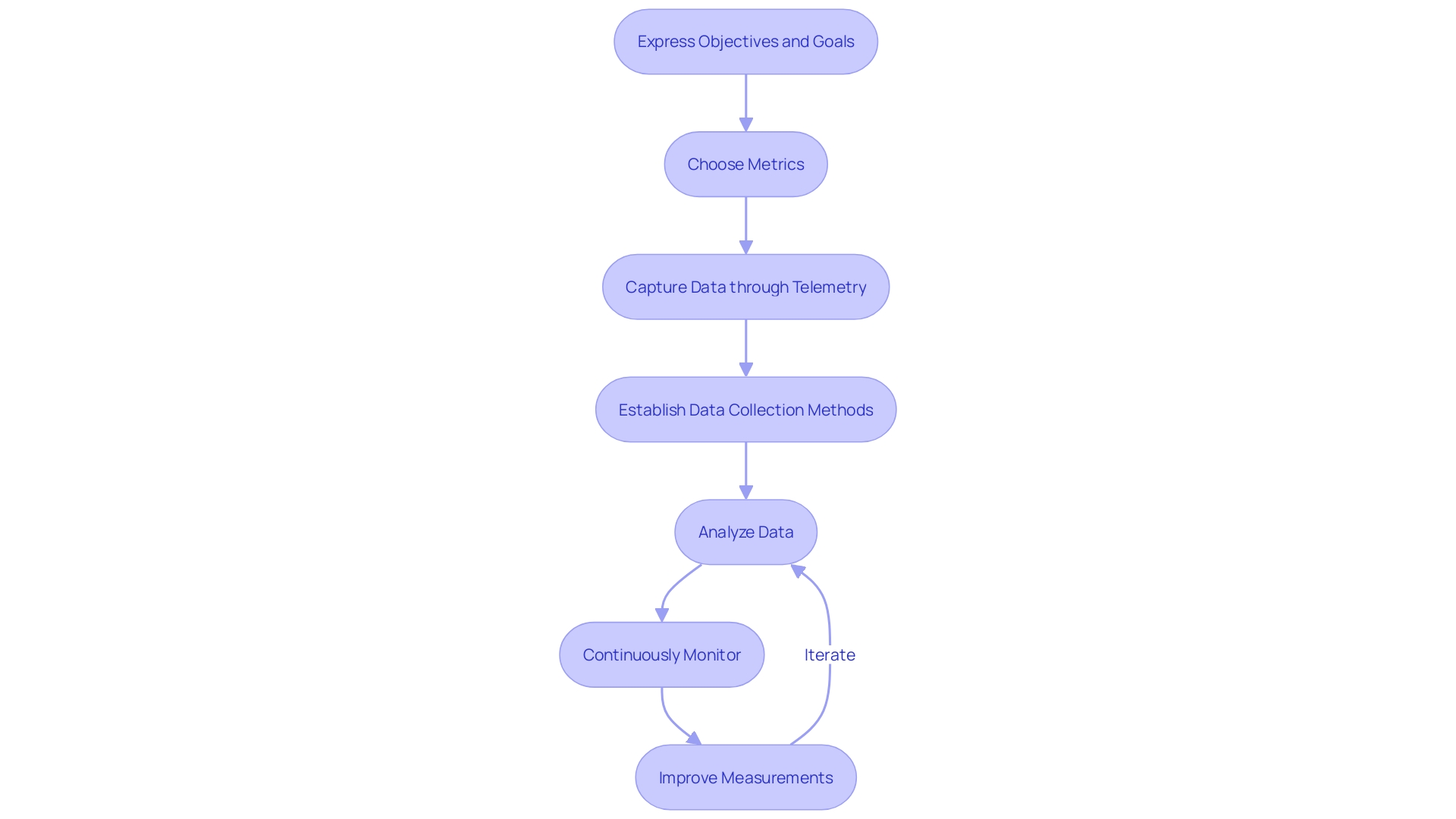

Best Practices for Implementing DevOps Metrics

When implementing measurement system for development and operations, it is crucial to follow a structured approach for meaningful outcomes. Start by expressing the objectives and goals that the DevOps measurements aim to support. Metrics should be carefully chosen to ensure they offer valuable insights and align with your strategic targets. Telemetry, the process of gathering signals like logs, traces, and operational metadata, is essential in capturing the data needed for these measurements. It is imperative to plan what to capture and how, to have a clear view of system operations.

To guarantee the dependability of the measurements, establish strong data collection methods. This could involve integrating advanced tools such as the Splunk App for Data Science and Deep Learning, which is continually updated to provide the latest features and ensure secure, bug-free performance. To ensure the integrity of collected data, it is essential to verify its precision as accurate and reliable information is crucial for meaningful measurements in software development and operations.

Analyzing the data transforms it into actionable insights. Visual representations and reports should be clear and concise to facilitate informed decision-making. It's important to keep in mind that the measurements for development and operations are ever-changing; they need continuous monitoring and improvement. By consistently monitoring these metrics, organizations can pinpoint improvement areas and enhance their measurement and analysis processes.

In this framework, the rise of a new development approach has represented a crucial change towards continuous improvement in the creation of applications. Automation tools essential for the integration and continuous improvement of infrastructure, as well as code and configuration management, have connected development, operations, and quality assurance. Automating these fundamental aspects of the development lifecycle not only enhances processes but also aligns them more closely with business objectives, resulting in reduced overhead and faster market release.

Finally, it's worth noting that developer experience (DevEx) has emerged as a vital aspect, with a focus on creating an optimal environment for developers rather than merely increasing output. Insights from the Developer Experience Lab, a collaboration between Microsoft and GitHub, highlight that improving developers' work environments can lead to better productivity outcomes without the adverse effects of burnout or reduced retention.

Case Studies: Using DevOps Metrics for Continuous Improvement

DevOps methodologies, when implemented successfully, can have a transformative effect on the development and distribution processes within organizations. By embracing qualitative measurements, companies can meaningfully track and enhance developer productivity, a domain pivotal in the current era of fiscal tightening and technological innovation. The Developer Experience Lab's research highlights the significance of optimizing the developer experience (DevEx) to ensure sustainable gains in productivity, rather than solely focusing on output. These insights are reinforced by the return of the 'Elite' performance level in the latest industry analysis, which acknowledges organizations that achieve both high throughput and stability in distribution of programs.

To validate these findings, take into account the measures used to assess software delivery performance: change lead time, deployment frequency, change failure rate, and failed deployment recovery time. These metrics are not only indicative of an organization's technical prowess but also predict team performance and employee well-being. Elite performers stand out by excelling in these areas, demonstrating the feasibility and benefits of a well-implemented DevOps strategy.

Moreover, the integration of AI in development tools is on the rise, with a majority of surveyed professionals already incorporating some form of AI into their workflows. While full-scale adoption may be on the horizon, the current focus is on continuous improvement. Organizations are encouraged to regularly measure outcomes at all levels to foster high performance, as per the insights from DORA's research.

These advancements and findings are crucial in a landscape where developer productivity is a critical concern. By utilizing surveys and other tools to identify and alleviate bottlenecks, organizations can improve the developer experience, leading to enhanced performance and well-being. This strategy can be seen as a shift from traditional outcome-focused approaches to a more holistic view that prioritizes sustainable development practices.

Conclusion

In conclusion, DevOps metrics are crucial for software development and delivery. By prioritizing developer experience and leveraging qualitative research, organizations can capture meaningful data on productivity and satisfaction. Different categories of metrics, such as deployment, quality, operations, business, and team metrics, provide insights into various aspects of the development process.

Implementing DevOps metrics requires a structured approach. Organizations must choose metrics aligned with their goals and establish reliable data collection methods. Analyzing the data and transforming it into actionable insights is essential for continuous improvement.

Automation tools like continuous integration and delivery streamline processes and align them with business objectives.

Case studies highlight the transformative impact of DevOps metrics. By optimizing the developer experience and focusing on sustainable productivity, organizations achieve high performance and employee well-being. The integration of AI in development tools enhances performance measures and outcomes.

In summary, DevOps metrics enable organizations to navigate software development effectively. By continuously monitoring and refining these metrics, they drive continuous improvement, align technological efforts with business goals, and deliver value to both customers and the organization.