Introduction

In the rapidly evolving landscape of data management, AWS Glue stands out as a comprehensive solution for organizations seeking to streamline their Extract, Transform, Load (ETL) processes. As a fully managed service, AWS Glue offers a suite of powerful tools designed to simplify data integration, enhance operational efficiency, and provide valuable insights. This article delves into the essential components of AWS Glue, exploring how its Data Catalog, Crawlers, and ETL Jobs contribute to effective data management.

Furthermore, it examines best practices for achieving operational excellence in AWS Glue data pipelines, underscoring the importance of automation, robust error-handling, and real-time monitoring. By leveraging AWS Glue's capabilities, organizations can not only optimize their data workflows but also drive innovation and maintain a competitive edge in their respective industries.

Key Components of AWS Glue

'AWS service is a fully managed ETL (Extract, Transform, Load) solution created to simplify and speed up integration for analytics.'. At its essence, AWS integrates various essential elements that collaborate to deliver a smooth information management experience.

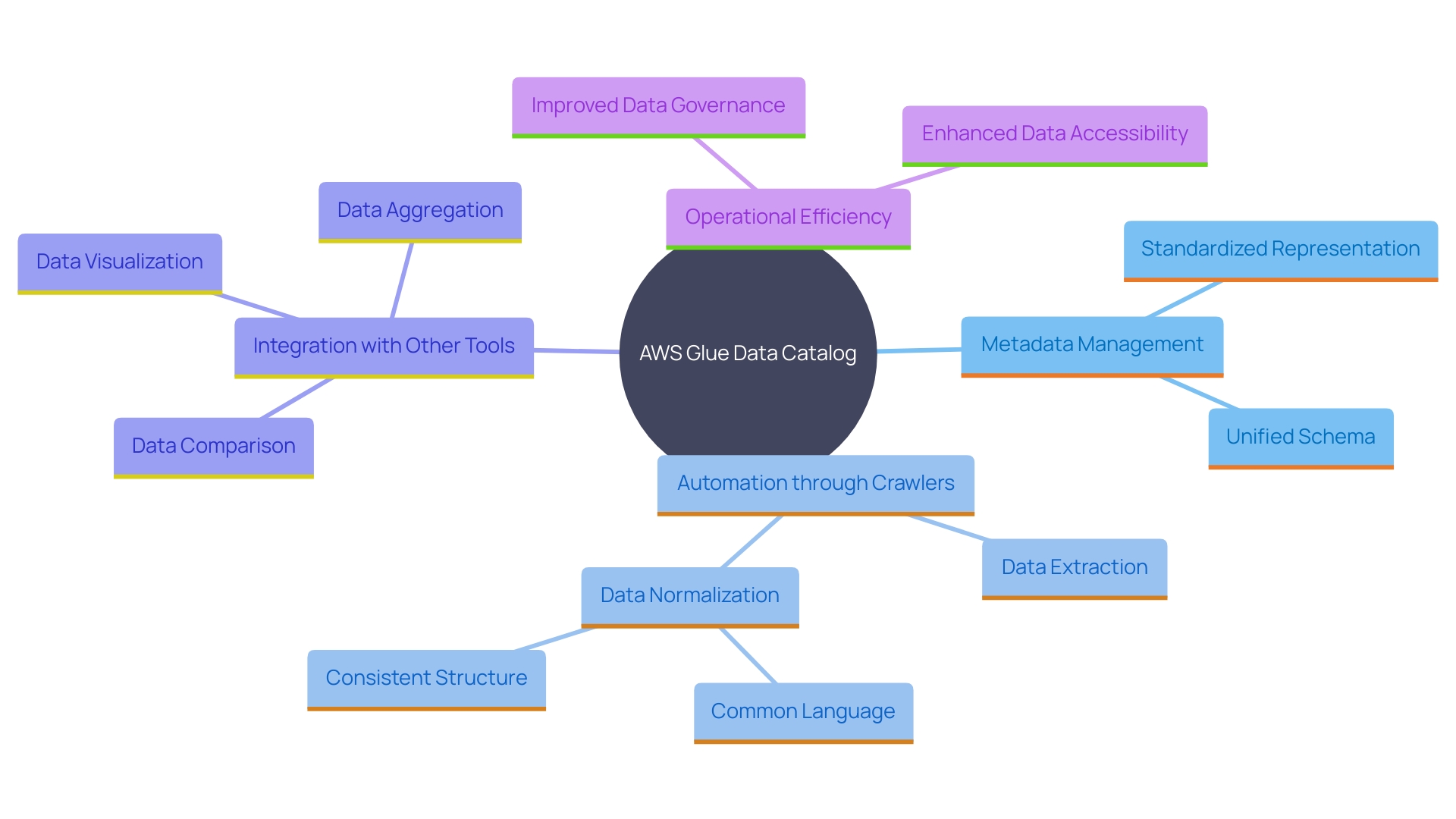

The Data Catalog serves as a centralized metadata repository, allowing users to store, retrieve, and manage metadata effectively. This feature is especially advantageous for organizations transferring their on-premises information repositories to the AWS Cloud, as it enables the validation and comparison of migrated content, ensuring accuracy and completeness.

Crawlers automate the discovery of information sources, making it simple to recognize and catalog content across various environments. This automation not only decreases the manual effort needed but also improves the reliability of information discovery processes.

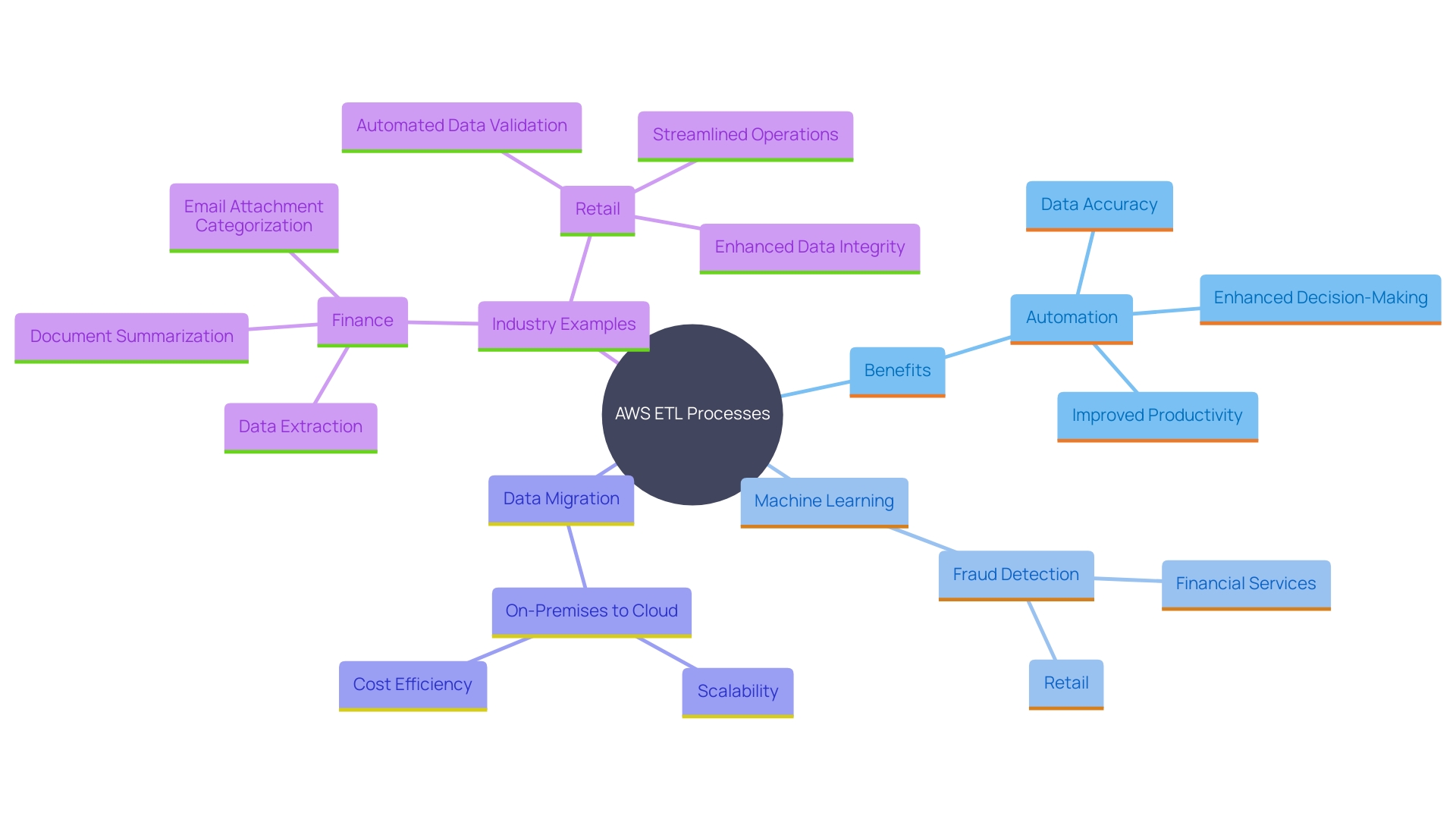

Glue Jobs allow users to execute ETL processes with minimal effort. These jobs can be customized to fit specific business needs, making it possible to transform and load information into target destinations effectively. For instance, in the retail industry, ETL automation can consolidate sales information, manage inventory levels, and provide real-time insights into sales performance and stock availability.

Adhesive Triggers provide a strong method for planning and event-based job commencement. This feature guarantees that ETL jobs operate at optimal times or in reaction to specific events, improving the efficiency and responsiveness of workflows.

Utilizing AWS's extensive range of tools, organizations can tap into the potential of information to foster innovation, enhance operations, and acquire valuable insights without the challenges of conventional information management systems.

Leveraging AWS Glue Data Catalog and Crawlers for Efficient Data Management

The AWS Glue Data Catalog offers a strong and enduring metadata repository for managing information assets across various sources. This platform empowers users to efficiently organize, manage, and query their information, significantly enhancing governance and accessibility. Automated crawlers meticulously scan information sources, updating the Data Catalog with comprehensive schema details. This automation facilitates the management of extensive information lakes, ensuring accuracy and representation. Businesses transferring their information to the AWS Cloud can utilize the Data Catalog to verify information after the transfer, an essential step to prevent project failures. This system, integrated with tools like Amazon EMR and Apache Griffin, offers a configuration-based approach for validating large datasets, thereby streamlining information management processes. 'As shown by GoDaddy's effort to improve batch processing tasks, the AWS Catalog plays a crucial role in boosting operational efficiency and generating business insights through its organized and automated information management capabilities.'.

Streamlining ETL Processes for Efficient Data Engineering

Utilizing AWS service for ETL processes streamlines information workflows and reduces manual interventions, enabling engineers to focus on quality and governance. The integrated transformations and job scheduling functionalities significantly contribute to automating intricate, distributed applications, greatly improving productivity. For example, financial institutions can utilize ETL automation to extract transaction information from various sources, apply machine learning for fraud detection, and load results into real-time monitoring systems, thus ensuring early fraud detection and reducing errors.

Moreover, organizations moving on-premises information stores to AWS Cloud can benefit significantly from Glue by validating large datasets post-migration, using tools like Amazon EMR and Apache Griffin. This approach ensures information accuracy and project success without the time-consuming development of custom solutions.

'Implementing efficient information models and optimizing information flows can significantly enhance performance and reduce latency.'. This is clear in retail firms that merge information from various point-of-sale systems, ensuring uniform formats and immediate inventory insights, thereby enhancing sales performance and decision-making.

Bosch's creation of a lean, evolutionary information platform for efficient green energy production exemplifies the potential of automated ETL workflows. By focusing on sustainability and leveraging deep engineering expertise, Bosch has created solutions that not only meet rising electricity demands but also contribute to a greener future. The evolution of ETL processes towards automation highlights the necessity of these advancements in contemporary information transformations.

Best Practices for Operational Excellence in AWS Glue Data Pipelines

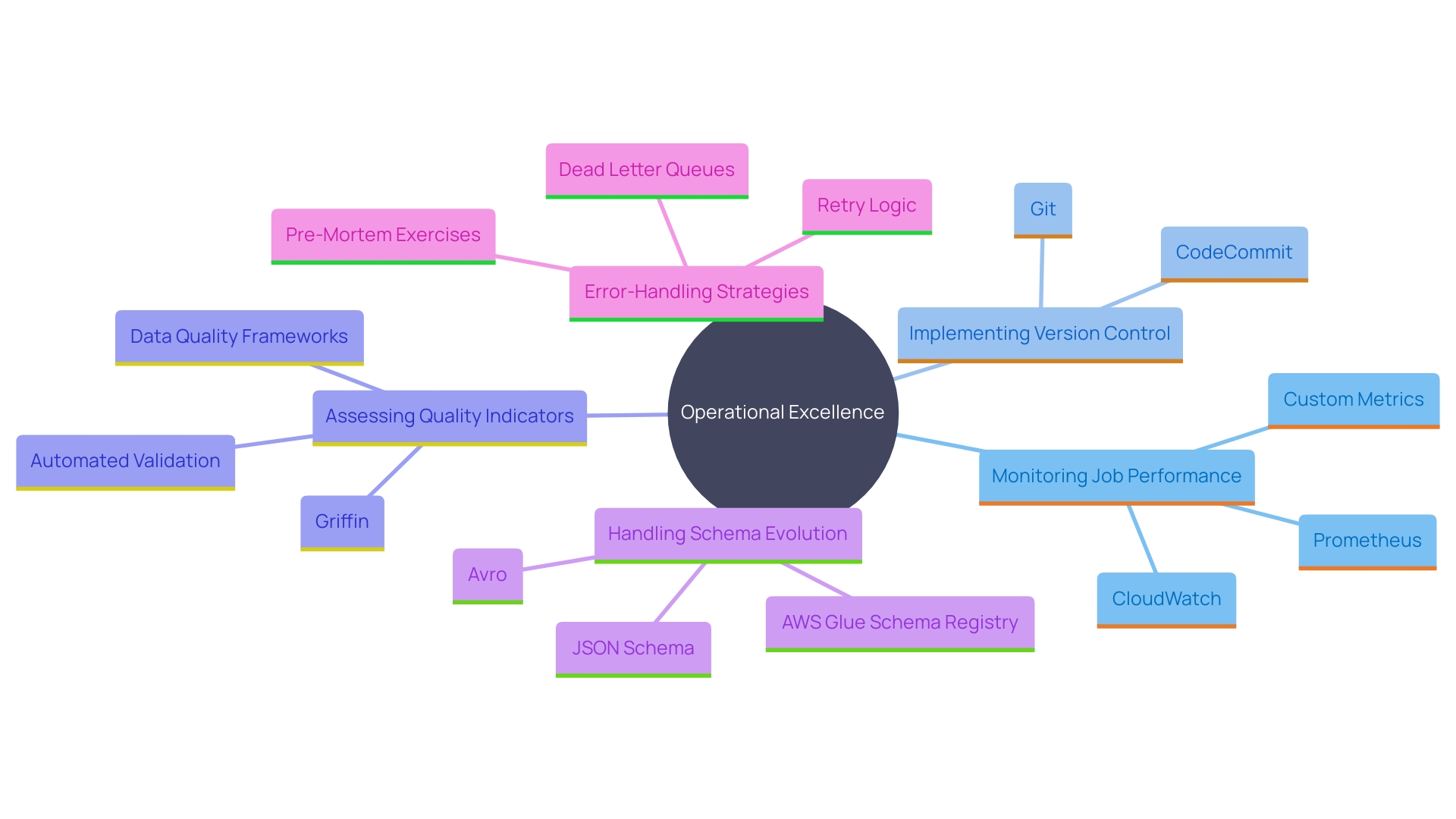

To achieve operational excellence in AWS Glue information pipelines, organizations should adopt several best practices. Monitoring job performance through AWS CloudWatch is critical, as it allows for real-time insights into job executions and helps identify potential bottlenecks. Implementing version control for ETL scripts ensures that changes can be tracked and reverted if necessary, promoting a stable and reliable development environment. Frequently assessing quality indicators is also crucial; tools such as Apache Griffin can automate validation processes, comparing extensive datasets to ensure precision and integrity.

Additionally, designing pipelines that can handle schema evolution is vital for maintaining flexibility and adaptability in dynamic environments. Incorporating robust error-handling strategies, such as setting up standardized notifications and a comprehensive operational event management process, can significantly enhance the resilience and maintainability of the pipelines. This approach not only streamlines the handling of data discrepancies but also ensures that operational events are efficiently managed from notification to resolution.

Conclusion

The exploration of AWS Glue reveals its essential role in modern data management through its key components, such as the Data Catalog, Crawlers, and ETL Jobs. These features collectively simplify the complexities of data integration, enabling organizations to efficiently manage and analyze their data assets. The centralized Data Catalog enhances metadata management, while automated Crawlers facilitate the discovery and organization of data, ultimately leading to improved data governance and accessibility.

Streamlining ETL processes with AWS Glue not only reduces manual interventions but also allows data engineers to focus on ensuring data quality and governance. By automating complex workflows, organizations can leverage real-time insights and enhance decision-making capabilities. The integration of AWS Glue with other tools further strengthens its utility, particularly for enterprises transitioning to the AWS Cloud.

This automation is exemplified in various industries, from retail to finance, where organizations utilize AWS Glue to gain valuable insights and drive operational efficiency.

Adopting best practices in AWS Glue data pipelines is crucial for achieving operational excellence. Continuous monitoring, version control, and robust error-handling strategies are instrumental in maintaining the resilience and reliability of data workflows. By designing adaptable pipelines that can accommodate schema evolution and implementing automated validation processes, organizations can ensure data integrity and enhance their overall data management capabilities.

Embracing these practices positions businesses to harness the full potential of their data, driving innovation and maintaining a competitive edge in an ever-evolving landscape.