Introduction

Kubernetes has emerged as a cornerstone in modern software architecture, revolutionizing the way applications are deployed, managed, and scaled. At the heart of this powerful orchestration platform lie two fundamental components: Services and Deployments. While both play essential roles, their functions and applications differ significantly.

Services ensure stable and reliable communication between Pods, acting as the backbone for networking within a Kubernetes cluster, while Deployments focus on managing the lifecycle of these Pods, enabling seamless updates and scaling strategies. Understanding the interplay between these elements is vital for organizations aiming to leverage Kubernetes effectively. This article delves into the intricacies of Kubernetes Services and Deployments, exploring their unique functionalities, best practices for implementation, and real-world scenarios that illustrate their impact on application performance and reliability.

Through this comprehensive examination, the importance of mastering both components becomes clear, equipping technology leaders and developers with the knowledge necessary to enhance their cloud-native strategies.

Understanding Kubernetes Services

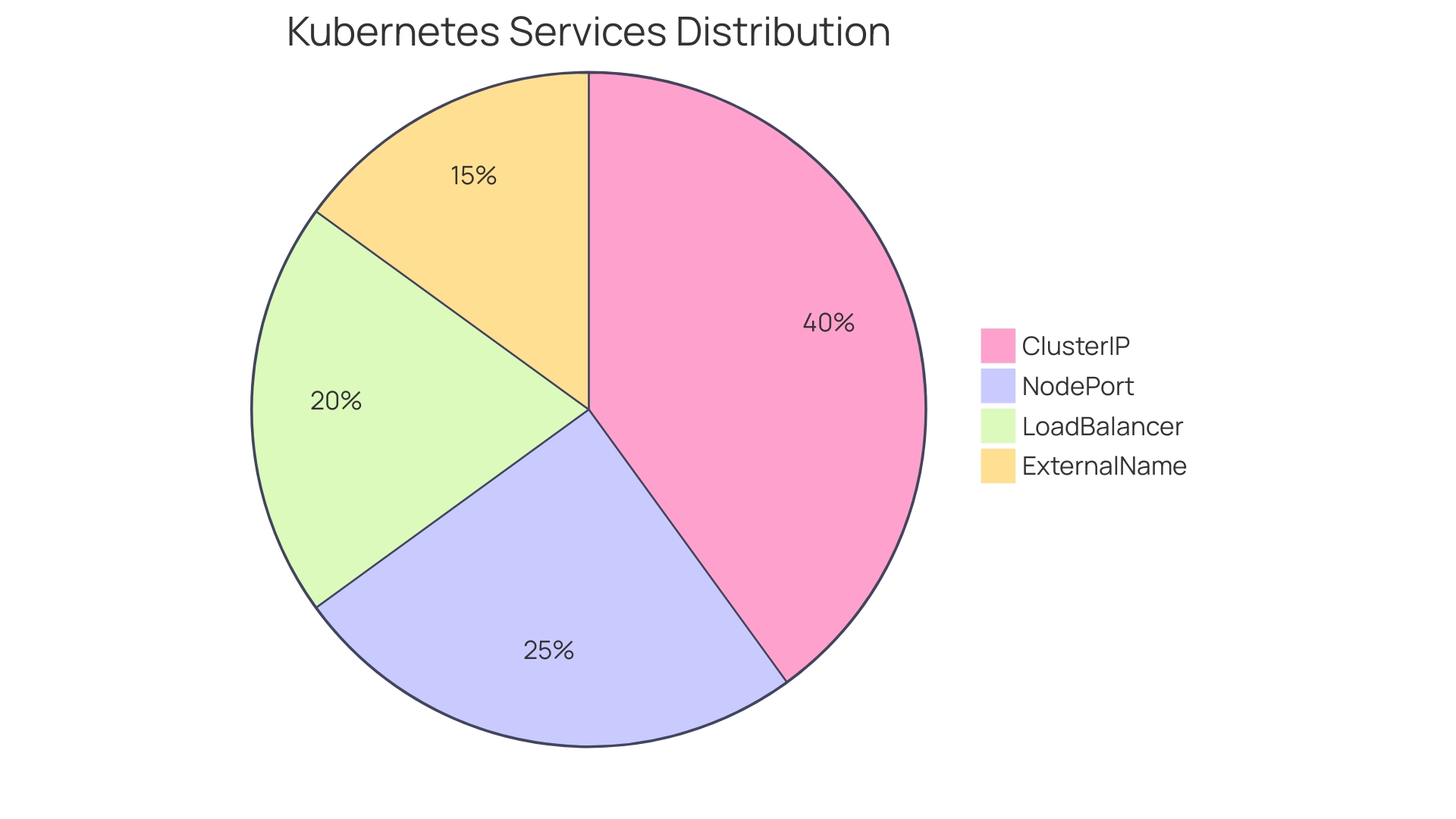

Kubernetes Services play a crucial role in ensuring dependable access to a collection of containers within a cluster, acting as stable endpoints that facilitate seamless network communication among various software components. This abstraction allows developers to focus on application logic rather than the fluctuating details of the underlying components. There are several types of Services, including ClusterIP, NodePort, LoadBalancer, and ExternalName, each tailored to specific traffic routing and accessibility needs.

ClusterIP is the default Service type, providing internal access to containers while remaining inaccessible from outside the cluster. In contrast, NodePort exposes the Service on each Node's IP at a static port, making it accessible externally. Load Balancer, conversely, integrates with cloud provider load balancers to distribute traffic across containers, while ExternalName permits aliasing external services.

By leveraging these diverse Service types, organizations can create a resilient architecture that adapts to the ephemeral nature of Pods—given that Pods can be terminated or replaced at any time. This adaptability is crucial in managing high availability and scalability, especially as applications grow in complexity. A recent survey indicated that 88% of respondents utilize Containers in their development and production environments, with 71% specifically using an orchestration tool, underscoring its significance in modern software development practices.

Furthermore, organizations such as Chess.com demonstrate the effectiveness of container orchestration services in practice. With a user base exceeding 150 million and hosting over ten million chess games daily, Chess.com has adopted a strong container orchestration system to manage its growing demand. James Kelty, Head of Infrastructure at Chess.com, emphasizes that their IT framework integrates public cloud and on-premises solutions to optimize accessibility for users worldwide. This strategic deployment enables Chess.com to maintain high performance and reliability, crucial for sustaining user engagement in a competitive landscape.

In summary, container orchestration services are essential for maintaining software stability and scalability, ensuring that developers can focus on delivering high-quality programs without being bogged down by the complexities of infrastructure management.

Understanding Kubernetes Deployments

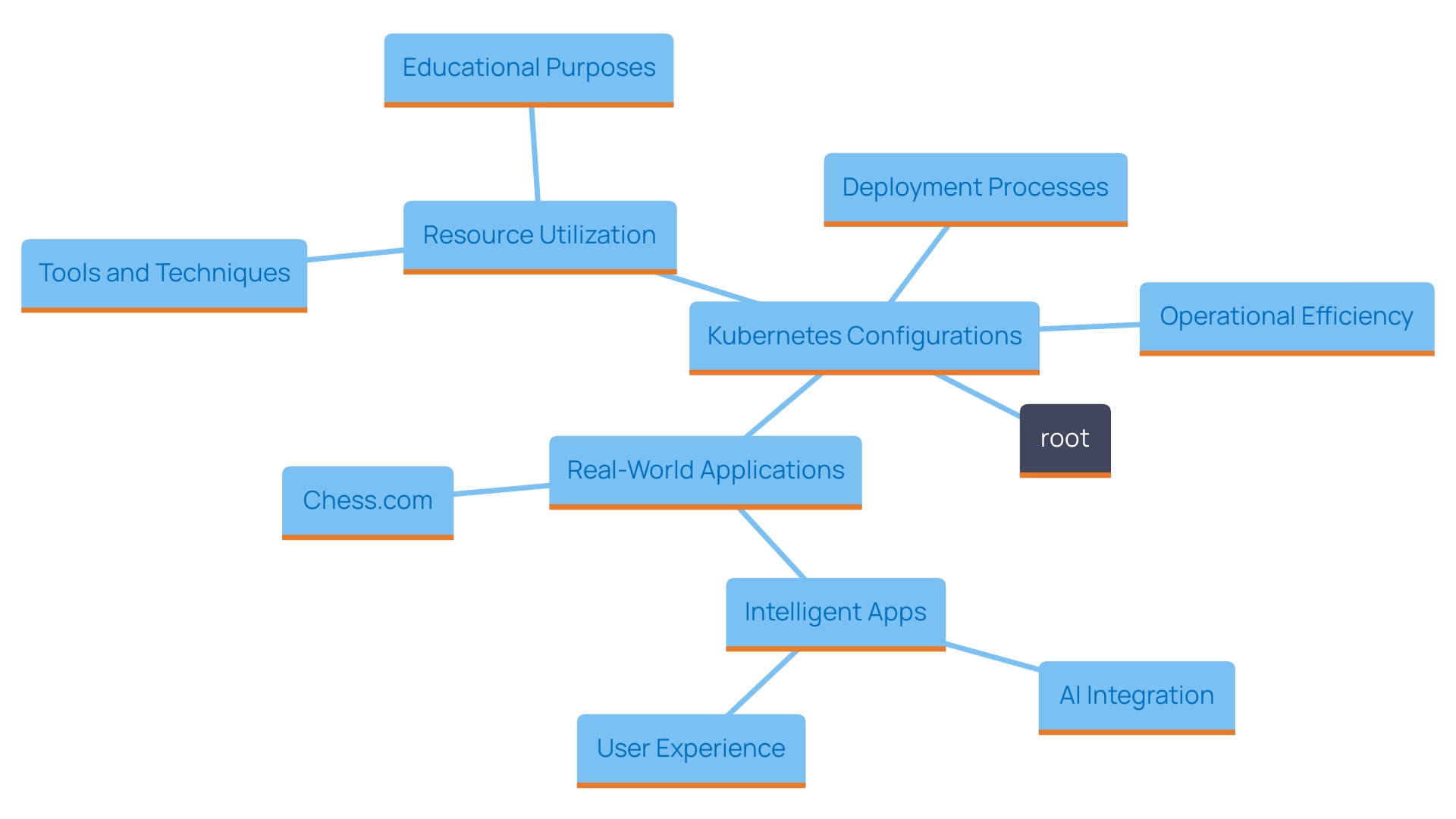

Kubernetes configurations play a crucial role in managing the rollout and scalability of Pods, ensuring that the desired state of a system is consistently maintained. This powerful abstraction enables organizations to achieve seamless rollouts and rollbacks, facilitating software updates without incurring downtime. By specifying parameters such as the number of replicas, update strategies, and health checks, the deployment process provides a robust framework for managing the application lifecycle.

A key aspect of container orchestration Deployments is their ability to enhance operational efficiency, particularly in large-scale environments. For instance, a deployment controller manages a set of identical containers, ensuring that the specified number is consistently running. When a deployment is created, it dictates the container image and the desired number of replicas. The system autonomously manages the orchestration of these Pods, effectively serving as a meticulous overseer that ensures reliability and availability.

The increasing acceptance of cloud-native solutions emphasizes the significance of utilizing technologies such as container orchestration. According to a recent survey conducted by the Linux Foundation, organizations are increasingly investing in container orchestration, with significant interest in optimizing deployment processes. This trend reflects a broader shift towards enhancing operational agility and minimizing toil—tasks that distract developers from core activities, such as writing code.

For instance, Chess.com, a prominent platform with more than 150 million users, utilizes container orchestration to support its vast infrastructure. James Kelty, the Head of Infrastructure at Chess.com, emphasizes that a stable IT infrastructure, encompassing both public cloud and on-premises solutions, is vital for delivering a seamless user experience. "By providing a digital version of chess, the company has been able to reach a more global audience," he noted, highlighting the platform's role in scaling infrastructure to meet user demands efficiently.

In summary, container orchestration setups not only simplify Pod management but also lead to considerable enhancements in application reliability and scalability, making them a vital element in the contemporary technology landscape.

Key Differences Between Kubernetes Services and Deployments

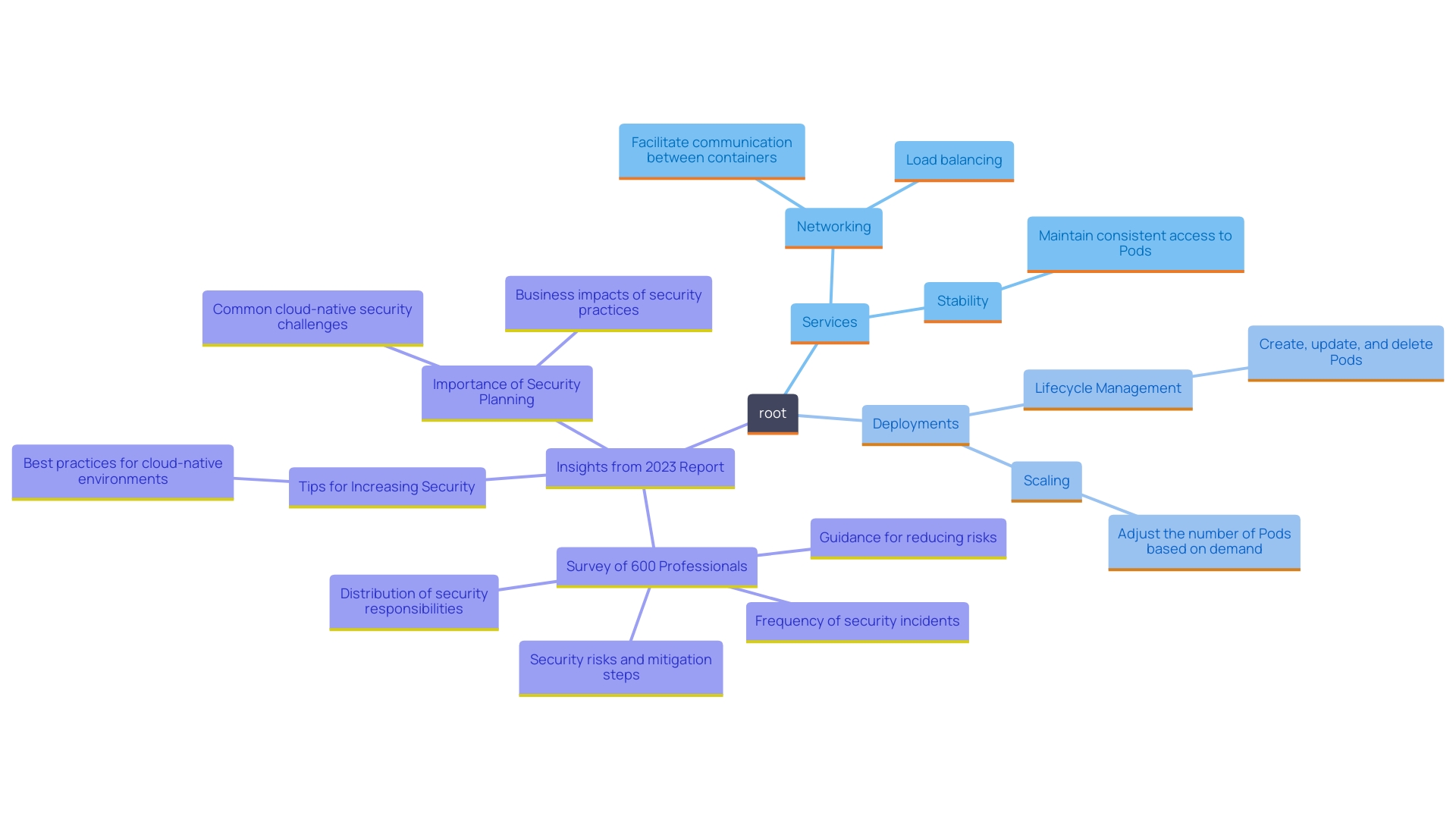

Within the container orchestration ecosystem, the roles of Services and Deployments are fundamentally distinct yet complementary. Kubernetes Services specialize in networking, facilitating both internal and external communication for containers. They create a stable interface that abstracts the underlying components, thus enabling seamless accessibility. This is especially crucial considering the temporary nature of these entities, which can be ended unexpectedly. A Service captures the state of these containers and maintains a consistent IP address, directing traffic to active containers while avoiding those that are down.

Conversely, the orchestration mechanisms concentrate on overseeing the lifecycle and status of containers. They are accountable for scaling systems and ensuring that the desired number of Pods is always running. When updates are required, Deployments facilitate a smooth transition to new software versions, thereby maintaining operational integrity. This duality allows organizations to leverage container orchestration for not only connectivity but also robust application management.

'The significance of grasping these ideas is highlighted by information from the 2023 Containerization in the Enterprise Report, which shows that 88% of participants use containers in their development and production settings, with 71% choosing the orchestration platform.'. This widespread adoption highlights the necessity for developers and technology leaders to grasp the intricacies of these components to maximize resource utilization effectively. As Angelos Kolaitis, a senior software engineer at Canonical, stated regarding the latest container orchestration release, the focus is on simplifying complexity while ensuring reliable workload management. This shift emphasizes the critical role of Services and Deployments in delivering dependable production services.

Scenarios for Using Kubernetes Services

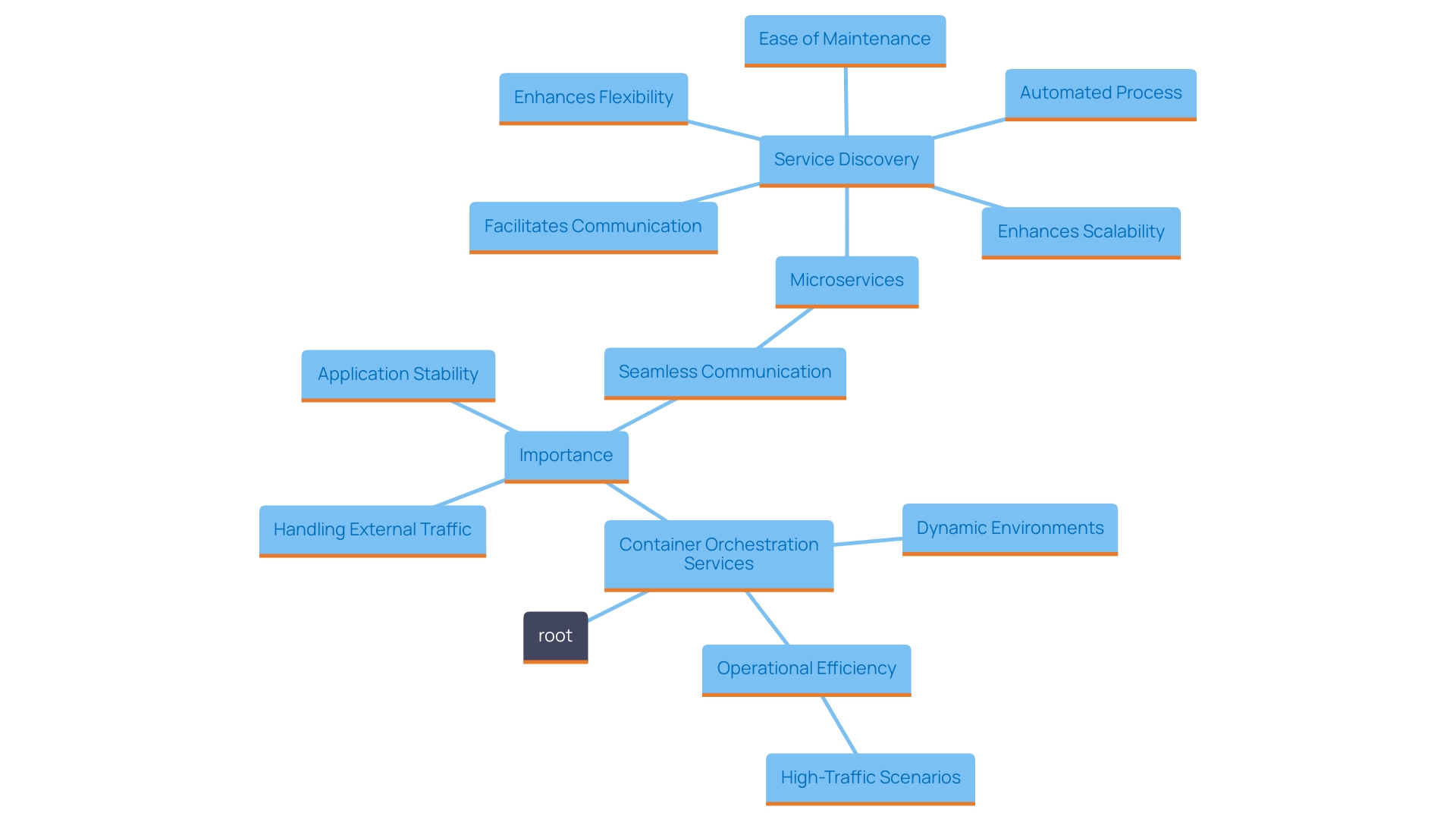

Container orchestration services play a crucial role in establishing robust network endpoints essential for application stability. They become indispensable in microservice architectures where seamless communication between services is vital. For example, when microservices interact, a Kubernetes Service facilitates this communication without the need for tracking the fluctuating IP addresses of individual containers. The dynamic nature of these entities, where they can be created and destroyed frequently, makes it challenging to maintain stable communication lines without the abstraction provided by Services.

Moreover, Services are pivotal when programs need to be accessible externally. A LoadBalancer Service type, for instance, is specifically designed to handle incoming external traffic, directing it to the appropriate Pods behind the scenes. This is especially advantageous for high-traffic online services, such as those run by the Dunelm Group plc, which experiences over 400 million sessions each year through its digital platform. By utilizing Services, organizations can ensure that their systems remain responsive and reliable even as user demand fluctuates.

In addition, container orchestration Services facilitate service discovery, allowing Pods to dynamically locate and connect to one another. This feature is vital for preserving the operational efficiency of microservices, particularly as systems expand. As mentioned in a recent report, companies increasingly adopt container orchestration microservices for their software architectures, enhancing flexibility and scalability while reducing the risk of downtime. The capability to separate service endpoints from the foundational infrastructure illustrates the efficiency of the platform in managing intricate systems.

Scenarios for Using Kubernetes Deployments

Container orchestration Deployments play a crucial role in managing software updates and scaling efforts effortlessly. They are especially beneficial when introducing a new version of a software, allowing managed rollouts that reduce downtime and keep users uninterrupted. For example, a Deployment allows for the specification of a container image along with the desired number of replicas. This guarantees that the container orchestration system activates the necessary quantity of instances, preserving service availability even during changes.

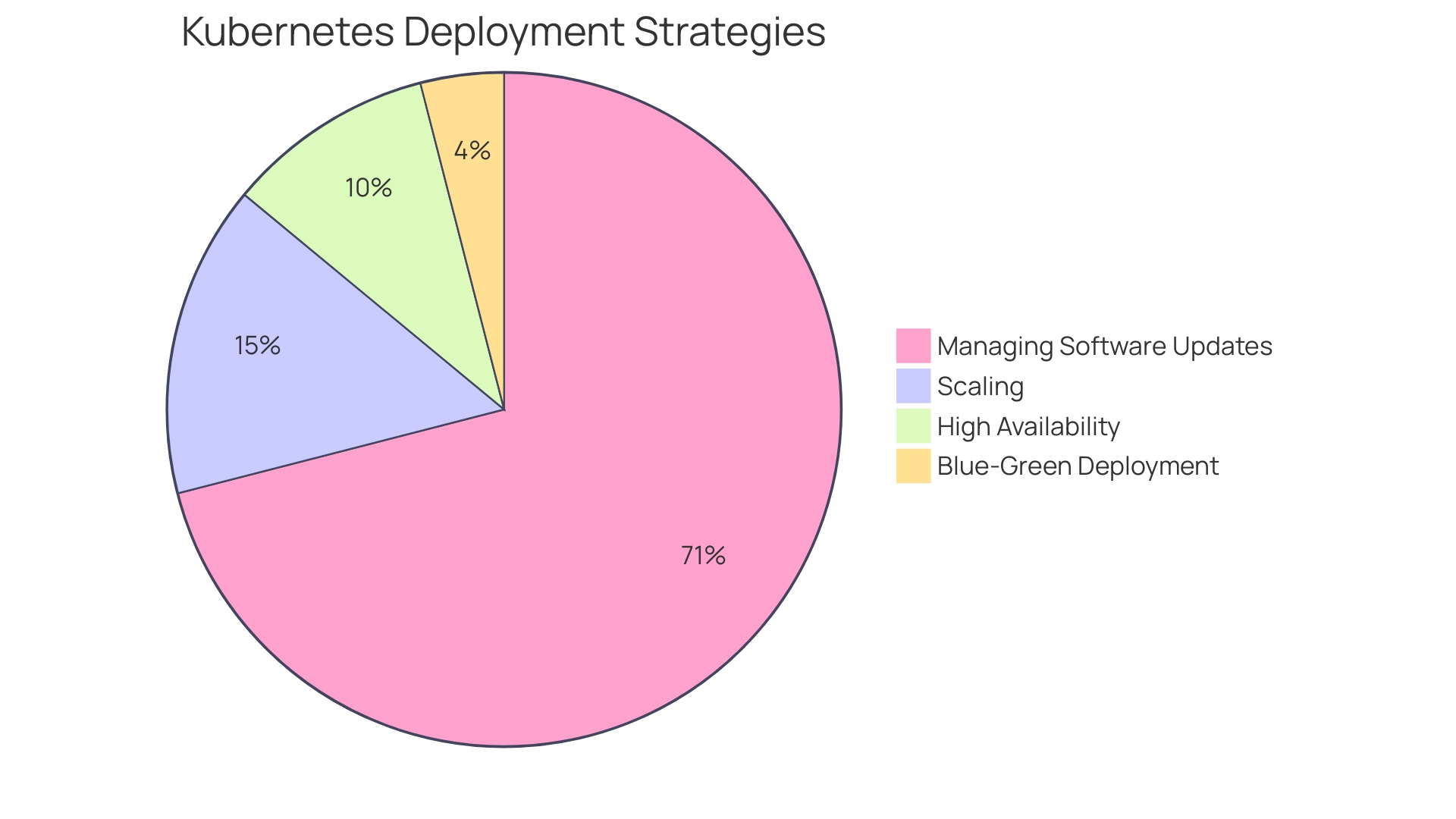

In situations where programs require auto-scaling, the configurations dynamically modify the quantity of Pods in response to real-time traffic needs. This flexibility is crucial in maintaining optimal performance and resource utilization. As emphasized in the 2023 Kubernetes in the Enterprise Report, a notable 71% of organizations using Kubernetes are employing it for such deployment strategies, underscoring its significance in modern software management.

Moreover, Deployments are essential for ensuring high availability of software. They continuously monitor the specified number of Pods, automatically replacing any that fail, thereby maintaining the system's operational integrity. This feature is particularly useful in environments where zero downtime is critical, such as during updates or maintenance.

An effective strategy to enhance this process is the Blue-Green Deployment model. This approach involves maintaining two identical environments, labeled blue and green, allowing teams to switch between versions without disrupting user experience. By ensuring continuous availability, organizations can significantly reduce the risks associated with software releases.

As organizations develop and embrace GitOps principles, which emphasize declarative and version-controlled infrastructure management, the advantages of container orchestration become even more evident. This methodology allows for precise control over software states, facilitating easy audits, rollbacks, and reproducibility, which are invaluable in complex, cloud-native environments.

Combining Kubernetes Services and Deployments

Utilizing Kubernetes Services and orchestrations together offers a powerful approach for handling cloud-native software. Deployments play a crucial role in ensuring that the required number of containers is consistently available, providing stability and reliability to applications. On the other hand, Services act as a stable interface for accessing these Pods, facilitating seamless communication and interaction.

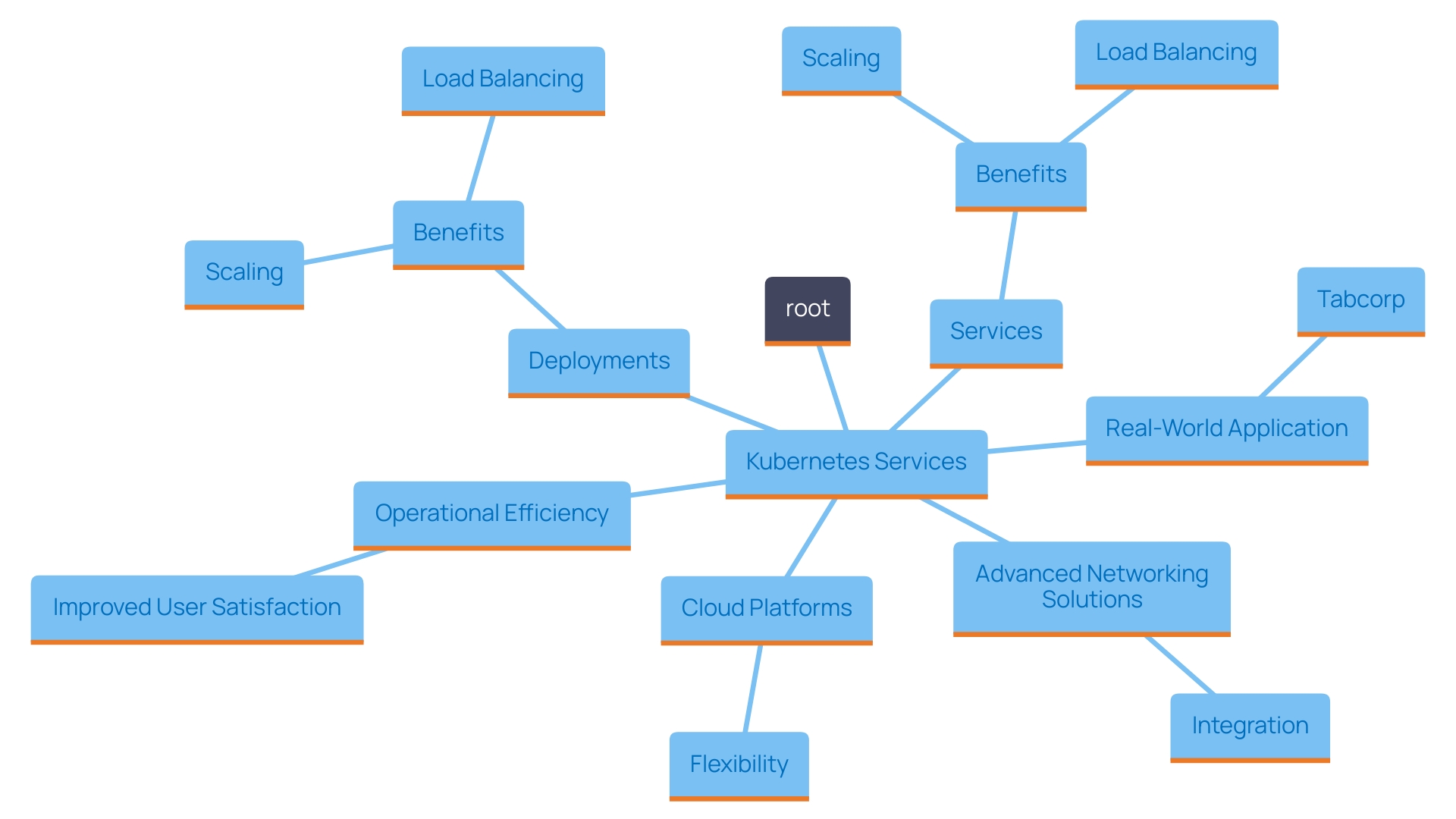

This combination not only enhances scaling capabilities but also optimizes load balancing and streamlines updates. In fact, a recent report highlighted that organizations adopting cloud-native approaches, such as those leveraging Kubernetes, experience significant improvements in operational efficiency and user satisfaction. The capability to execute programs across different cloud platforms, such as AWS, Google Cloud, and Microsoft Azure, offers unmatched flexibility. This adaptability is particularly crucial in today's fast-paced digital landscape where the demand for scalable applications continues to rise.

In a real-world case, Tabcorp, the largest provider of wagering and gaming products in Australia, faced challenges with a fragmented development environment, deploying multiple services directly to hardware. This inconsistency hampered their development lifecycle. By combining their container orchestration system with their existing infrastructure, they streamlined their deployment processes, resulting in a more coherent and efficient environment.

As the industry expands, the combination of container orchestration with advanced networking solutions, like VMware NSX, further enhances this synergy. This integration not only simplifies the management of cloud-native solutions but also enhances security and flexibility, allowing organizations to react promptly to shifting market demands. Therefore, by efficiently leveraging both container orchestration services and management systems, organizations can greatly enhance their software robustness and user satisfaction.

Best Practices for Choosing Between Services and Deployments

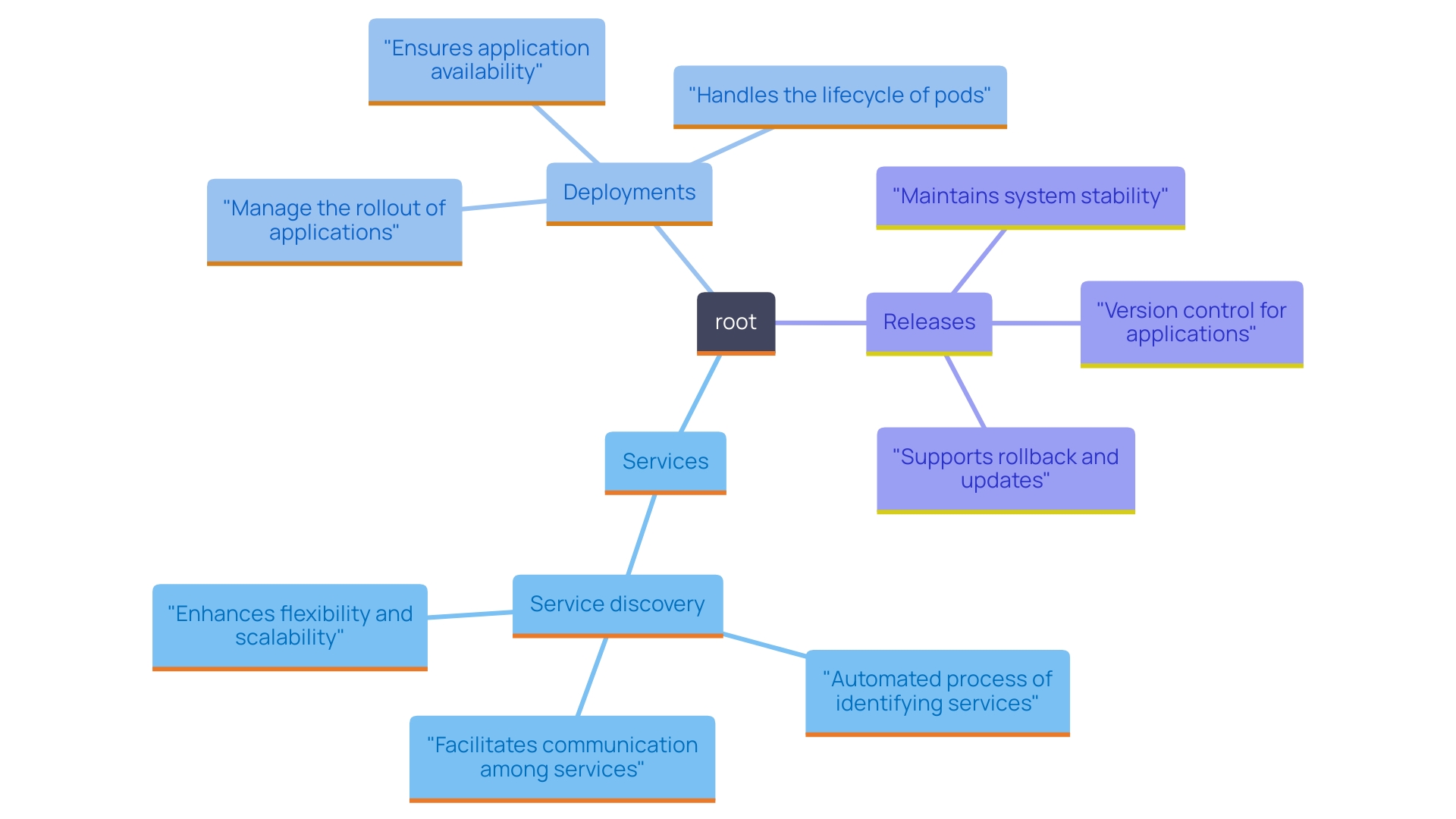

Grasping the differences between Services and other configurations in Kubernetes is crucial for enhancing software management and ensuring a resilient structure. Services act as the networking layer, facilitating seamless communication and exposure of software, while Deployments concentrate on managing the lifecycle of programs, including scaling and updates.

In a microservices architecture, where systems are decomposed into smaller, independent services that collaborate via APIs, Services become particularly vital. They facilitate communication among containers by maintaining a consistent IP address, even as these units may be ephemeral—able to be created or destroyed at any time. This capability ensures that your software remains resilient and accessible, as the Service dynamically routes traffic to healthy Pods. For instance, in an e-commerce platform, services like Catalog, Cart, and Payment can independently scale and update without disrupting the overall functionality.

Releases, on the other hand, play a critical role in managing updates and maintaining application availability. When you create a Deployment, you specify parameters such as the container image and the desired number of replicas. Kubernetes manages the creation of the required containers and ensures that the specified number stays functional. As a result, these processes enable a more streamlined and automated approach to rollouts and rollbacks, enhancing the agility of your development process. As pointed out by industry experts, 'A deployment controller manages a set of identical Pods that constitute your software.'

By leveraging both Services for communication and Deployments for lifecycle management, organizations can achieve a more efficient and scalable application architecture—key components that resonate with current trends in software development. As the landscape evolves, this understanding will not only promote better architectural decisions but also align with the principles of continuous delivery and service autonomy that underpin modern software engineering.

Conclusion

Kubernetes Services and Deployments are integral components that together enhance the management and scalability of cloud-native applications. Services provide a stable networking interface, ensuring reliable communication between Pods, while Deployments facilitate effective lifecycle management, allowing for seamless updates and scaling. The interplay between these elements is crucial for organizations aiming to optimize their application performance and reliability.

Understanding the distinct roles of Services and Deployments empowers technology leaders and developers to make informed architectural decisions. Services offer the necessary abstraction to maintain communication in dynamic environments, while Deployments ensure that applications remain available and resilient during updates. This duality not only simplifies operational complexities but also enhances overall user experience.

As the adoption of Kubernetes continues to rise, mastering these components becomes essential for organizations seeking to leverage modern software practices. By integrating Services and Deployments effectively, businesses can achieve higher operational efficiency, scalability, and reliability—key factors that contribute to sustained success in an increasingly competitive digital landscape.