Introduction

In the rapidly evolving landscape of cloud computing, deploying applications on AWS EKS Fargate represents a significant advancement in how organizations manage containerized workloads. This article provides a comprehensive guide to deploying applications on AWS EKS Fargate, starting with the necessary prerequisites and progressing through the creation and configuration of an EKS cluster, the setup of kubectl, and the deployment of a sample application. Each step is meticulously detailed to ensure that users can follow along and implement these practices effectively.

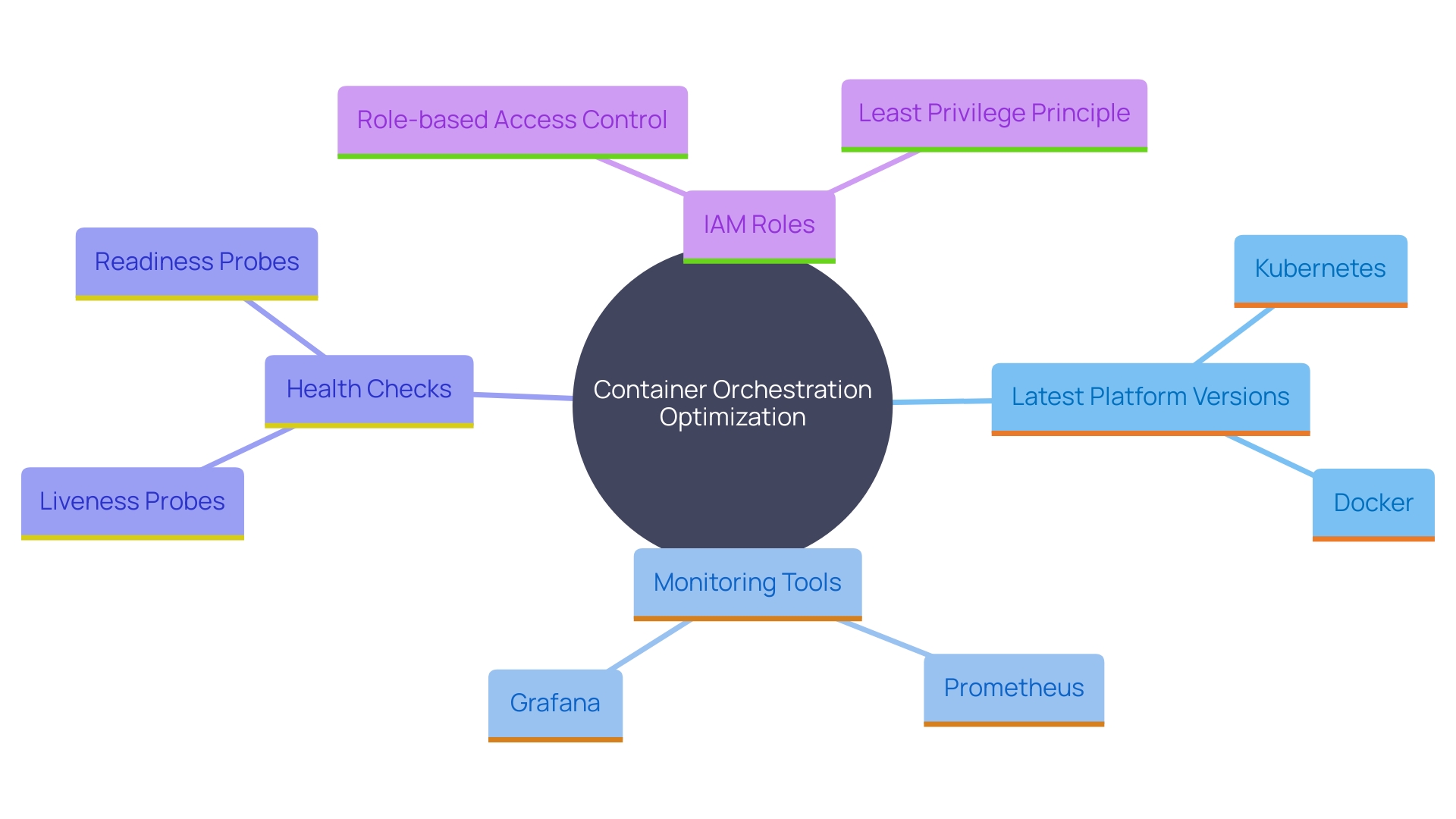

Additionally, the article delves into best practices for deploying applications on EKS Fargate, highlighting the importance of leveraging the latest Kubernetes versions, implementing robust monitoring tools, integrating health checks, and utilizing IAM roles for secure permissions management. It also addresses common troubleshooting techniques to resolve deployment issues, ensuring a smooth and efficient operational experience.

By following this guide, users will gain the expertise needed to optimize their use of AWS EKS Fargate, ensuring their applications are both scalable and resilient in a serverless environment.

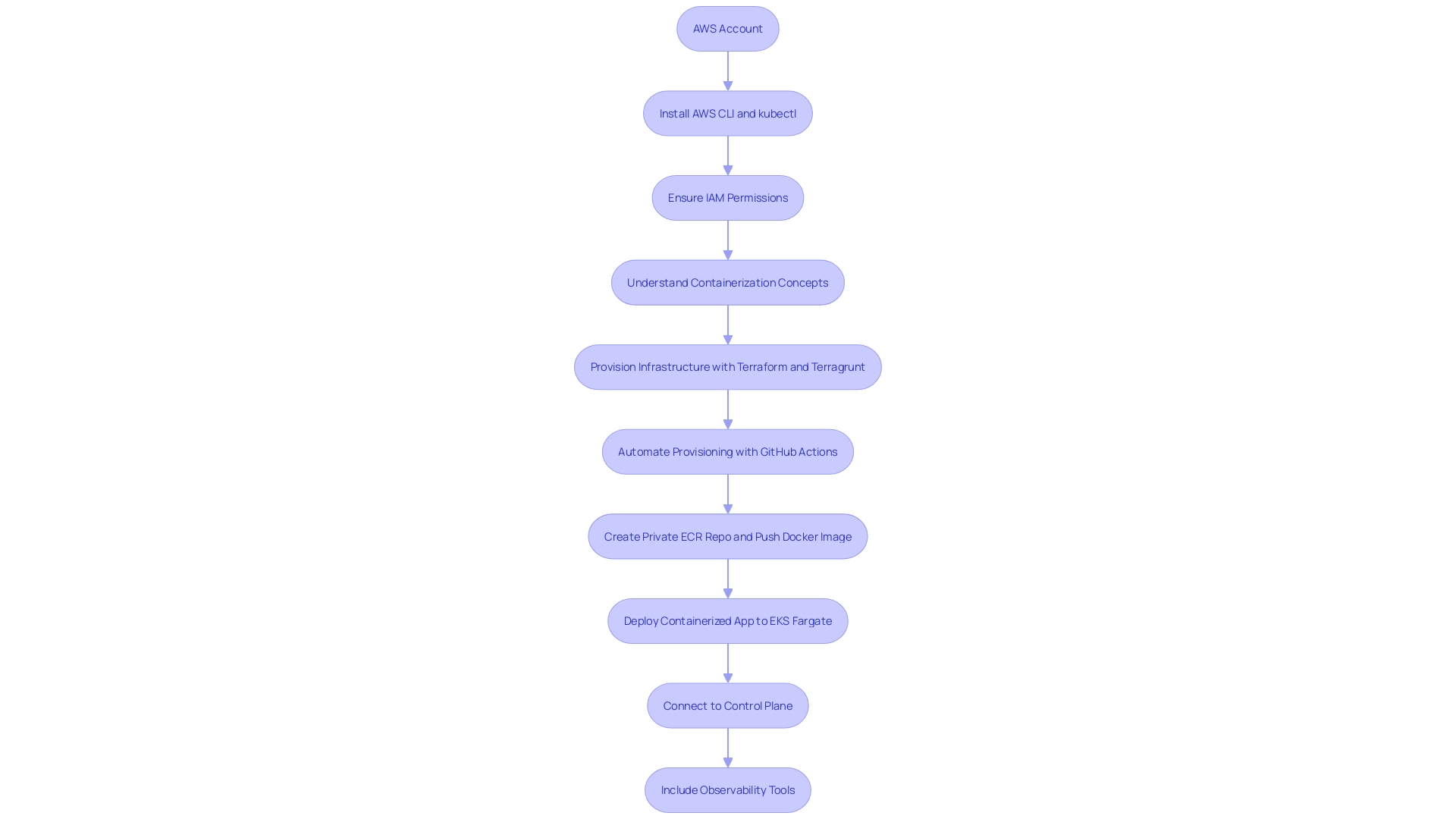

Prerequisites for Deploying on AWS EKS Fargate

Before deploying applications on AWS EKS Fargate, it is crucial to ensure you have the following prerequisites in place:

- An AWS account with the appropriate permissions to create EKS clusters and related resources.

- AWS CLI installed and configured with access to your AWS account.

- kubectl installed for managing container orchestration environments.

- IAM permissions to manage EKS and Fargate resources.

- Familiarity with containerization concepts and Docker.

Establishing an EKS environment entails combining different open-source tools and AWS services, necessitating knowledge in both AWS and container orchestration. Each unit in the grouping is responsible for connecting to the control plane to fetch information related to Kubernetes resources such as containers, secrets, and volumes to run on the host machine. Each EKS environment must include a minimum of one worker node to launch the user’s containers.

It's also beneficial to have observability tools in place to gather data within your EKS cluster and the surrounding AWS infrastructure. This assists in recognizing performance bottlenecks, overallocated resources, and understanding where your containerized applications are operating.

Kubernetes and EKS have matured significantly over the years, with many standard practices developing across the industry. Adhering to these best practices guarantees that your groups are structured according to established conventions, minimizing potential problems and ensuring your configuration aligns with industry standards.

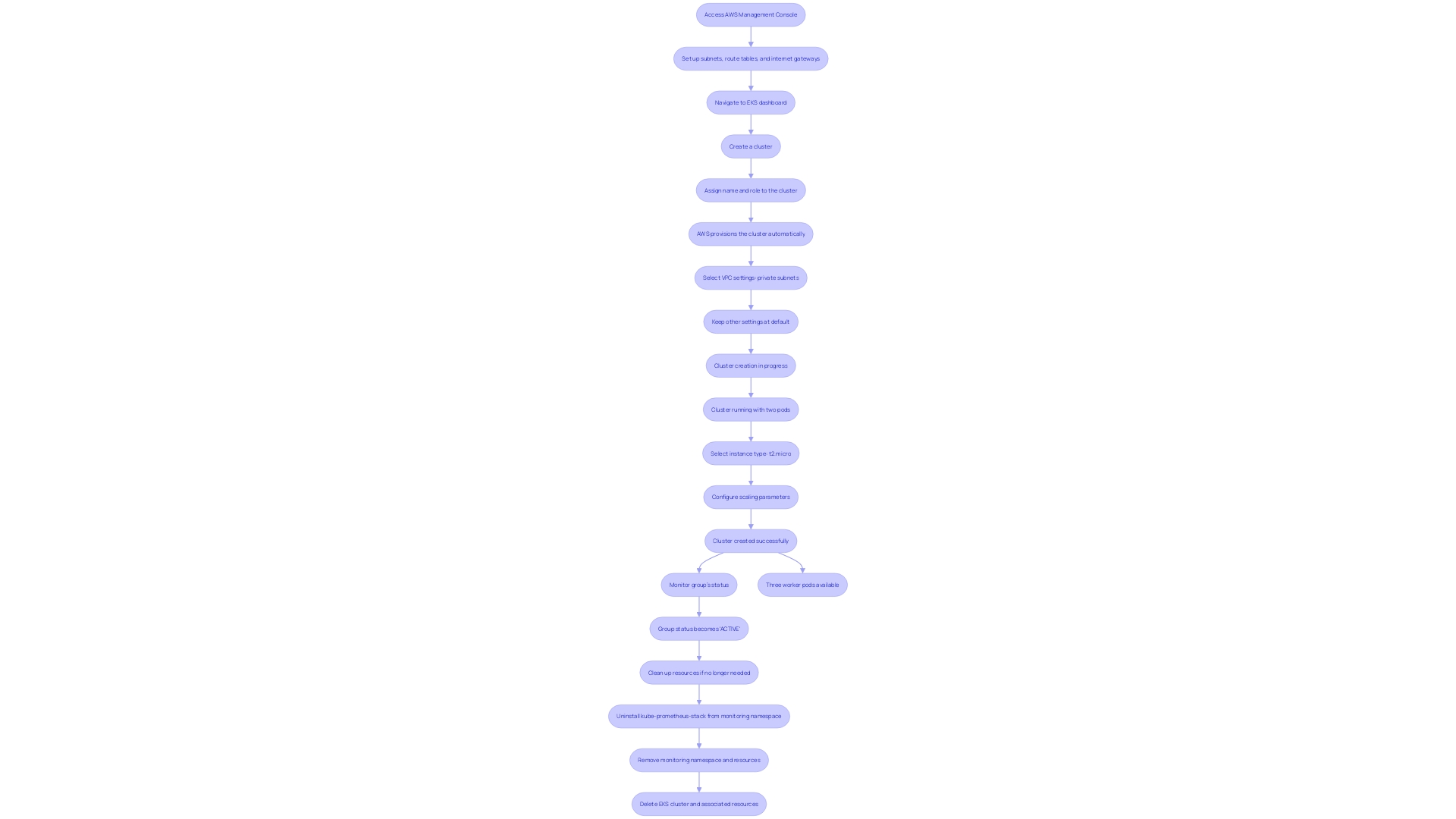

Step 1: Create an EKS Cluster with Fargate

- Open the AWS Management Console and head to the EKS service. 2. Select the 'Create group' button to initiate the process. 3. Fill in the necessary group settings, including the group name, Kubernetes version, and VPC configurations. For instance, choosing VPC settings like private subnets can enhance security but may require specific subnet configurations. 4. In the 'Cluster configuration' section, opt for 'Fargate' as your compute type. Fargate provides a serverless compute engine, allowing you to run containers without managing the underlying infrastructure, thus avoiding the complexity of EC2 instance sizing and upfront expenses. 5. Thoroughly examine all the settings and move forward to establish the group. 6. Monitor the status of the group until it changes to 'ACTIVE', indicating that the group is fully operational. Utilizing managed services like Fargate can streamline your operations while keeping costs aligned with your specific needs.

Step 2: Configure kubectl for EKS Cluster

-

Begin by installing the AWS IAM Authenticator if it is not already installed on your machine. This tool is essential for authenticating your AWS credentials with Kubernetes.

-

Update your kubeconfig file to access your Amazon EKS environment. Run the command:

aws eks --region <region> update-kubeconfig --name <cluster_name>

This command ensures that your kubeconfig is correctly configured to communicate with your EKS cluster. If you leave out the --kubeconfig option, the access file will be generated in ~/.kube/config, which could lead to conflicts if you have several environments. To manage multiple configurations, create a directory such as ~/.kube/configs and set the KUBECONFIG environment variable to the desired file path, for example:

export KUBECONFIG=${HOME}/.kube/configs/demo-eks-kubeconfig

- Verify your setup by running:

kubectl get svc

This command checks if you can successfully access your cluster. If you face an error indicating that kubectl is attempting to connect to a container orchestration system on your local device, make sure that your KUBECONFIG variable is directed to the appropriate configuration file.

Step 3: Create a Fargate Profile

-

Access your EKS cluster via the console and navigate to the 'Fargate profiles' tab. This step enables you to control your containerized workloads without the trouble of dealing with the fundamental infrastructure, as Fargate effectively assigns the necessary resources for your software.

-

Select 'Create Fargate profile'. This action initiates the creation of a profile that will enable you to run your containers without having to manage EC2 instances, ensuring you only pay for the computing resources you use.

-

Provide a name for the profile and choose the appropriate namespace for your application. By doing this, you streamline the deployment of your Kubernetes workloads, leveraging Fargate's ability to manage containers within specified namespaces or labeled groups.

-

Optionally, you can add selectors to determine which containers should run on Fargate. This flexibility ensures that you can optimize resource allocation and cost by specifying containers that benefit the most from Fargate's capabilities.

-

Review your settings and proceed to create the profile. Once the profile is created, EKS will manage the lifecycle of your containerized applications, allowing you to focus on solving business problems without worrying about the underlying infrastructure.

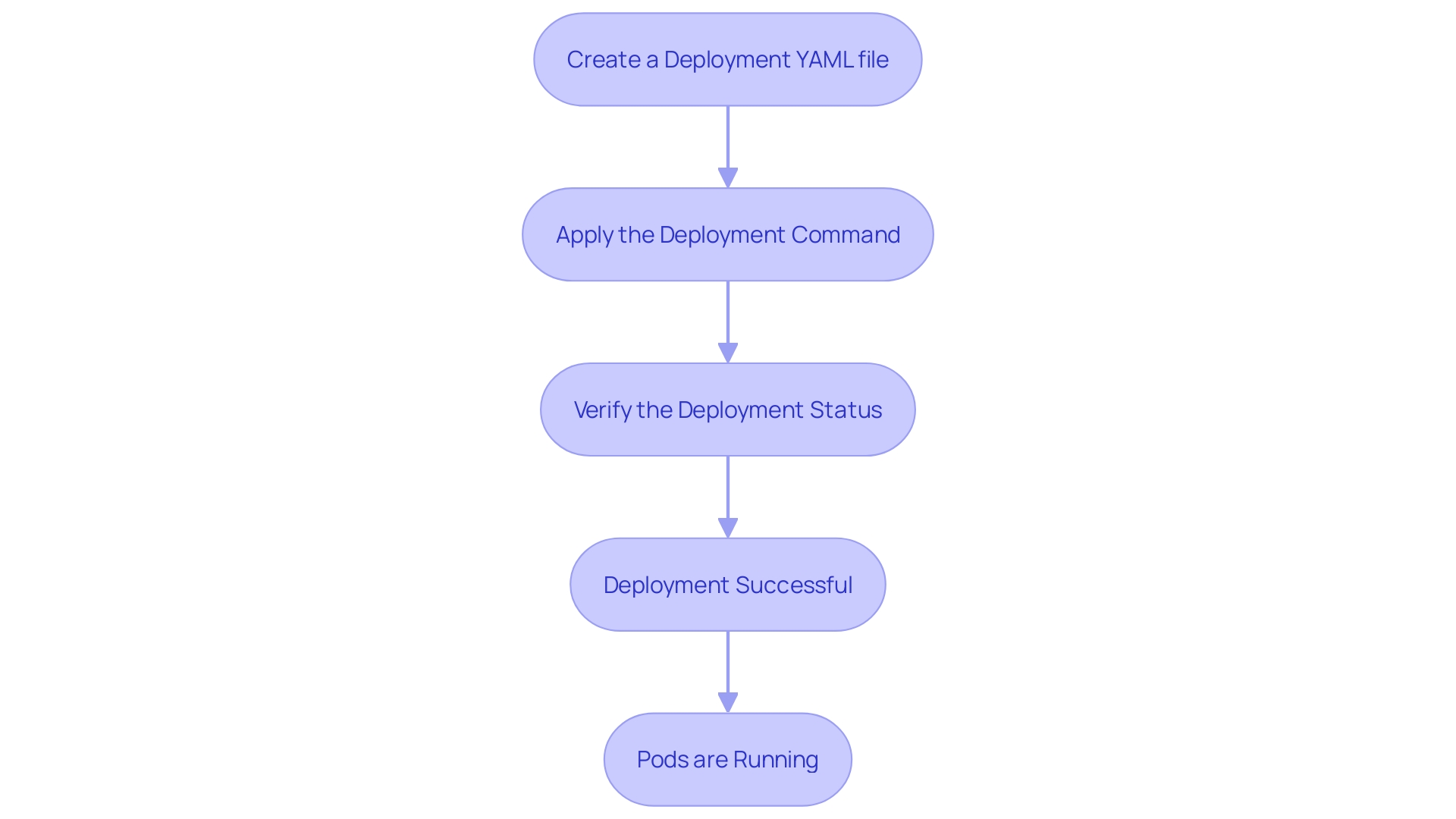

Step 4: Deploy a Sample Application on EKS Fargate

To deploy your application using Kubernetes, follow these steps:

- Start by making a deployment YAML file for your software. In this file, you need to specify the container image and the necessary configurations. This ensures that your software is defined in a consistent and reproducible manner, streamlining deployment and scaling.

- Once your YAML file is ready, apply the deployment using the command:

kubectl apply -f <deployment_file.yaml>This command uploads and initiates the deployment based on the configurations defined in your YAML file. - Verify that your deployment was successful by executing:

kubectl get deployments -n <namespace>This command provides a list of deployments in the specified namespace, allowing you to check the status and ensure everything is running as expected.

By following these steps, you utilize the platform's capabilities for automated deployment, scaling, and management, ensuring your software is robust and highly available. Kubernetes' built-in mechanisms for auto-scaling and health checks will help maintain optimal performance and resilience, adapting to varying workloads and minimizing downtime.

Step 5: Verify and Manage Deployments on EKS Fargate

-

To verify the condition of your deployment containers, run the command:

kubectl get pods -n <namespace>. This command provides a detailed overview of all pods running within the specified namespace, including their current state and any potential issues. -

For accessing logs of a specific pod, use:

kubectl logs <pod_name> -n <namespace>. This is crucial for troubleshooting and monitoring your software's behavior in real-time, giving you insights into the pod's operational history. -

To scale your application, employ:

kubectl scale deployment <deployment_name> --replicas=<number> -n <namespace>. Scaling enables you to adjust the number of pod replicas to meet varying workload demands efficiently. The container orchestration platform's auto-scaling feature ensures optimal resource allocation by automatically adjusting the number of replicas based on real-time metrics such as CPU, memory usage, and custom metrics like requests per second.

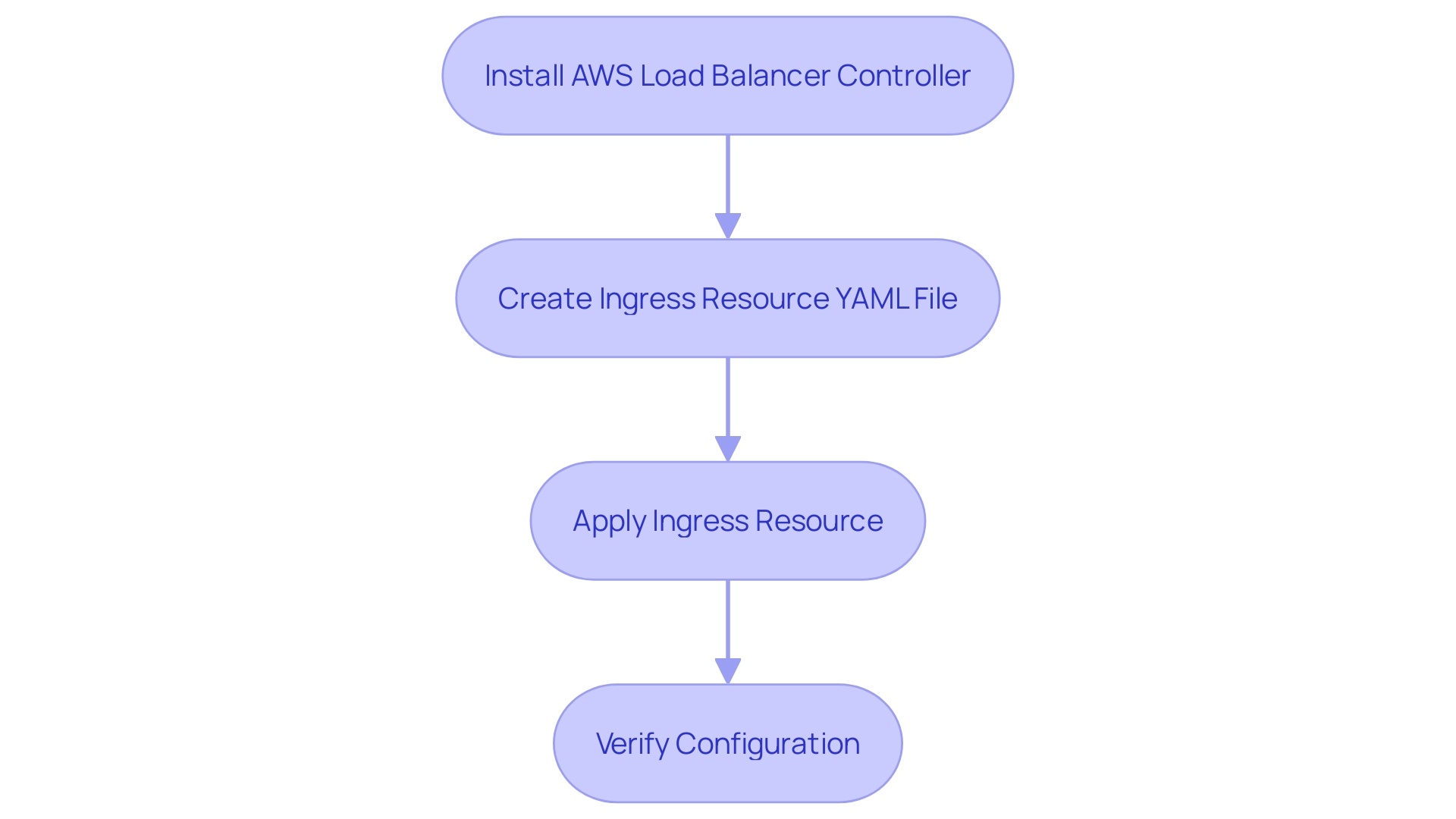

Configuring Ingress with AWS EKS Fargate

To seamlessly manage ingress traffic within your Kubernetes cluster, follow these steps:

-

Install the AWS Load Balancer Controller: Begin by integrating the AWS Load Balancer Controller into your environment. This controller is pivotal for automating the provisioning, management, and configuration of Amazon Elastic Load Balancers (ELB).

-

Create an Ingress Resource YAML File: Define your program's routing rules by constructing an ingress resource YAML file. This file will include the specifications for how traffic should be directed to various services within your cluster.

-

Apply the Ingress Resource: Utilize the command

kubectl apply -f <ingress_file.yaml>to deploy your ingress resource. This step ensures that your routing rules are implemented and that the load balancer is properly configured. -

Verify the Ingress Configuration: After applying the ingress resource, verify the configuration to ensure it aligns with your deployment requirements. You can access your program through the load balancer to confirm that traffic is being routed correctly.

Implementing these steps not only simplifies the routing configuration but also leverages the power of the AWS Load Balancer Controller to manage complex application deployments efficiently. This approach is especially beneficial for organizations operating multiple Amazon EKS clusters and dealing with diverse microservices architectures.

Best Practices for Deploying Applications on EKS Fargate

- Utilize the most recent stable version of the container orchestration platform to access cutting-edge features and essential security updates. This ensures your system remains robust and up-to-date, minimizing vulnerabilities and enhancing performance.

- Implement comprehensive monitoring tools such as Amazon Managed Service for Prometheus and Amazon Managed Grafana to gain insights into resource utilization. This helps in identifying performance bottlenecks and optimizing costs by avoiding overallocated resources.

- Integrate health checks and readiness probes to ensure that your applications are running smoothly and can handle traffic effectively. This proactive approach minimizes downtime and improves reliability.

- Utilize IAM roles for service accounts to manage permissions securely. By restricting access based on the principle of least privilege, you can significantly bolster the security of your container orchestration environment and protect sensitive data.

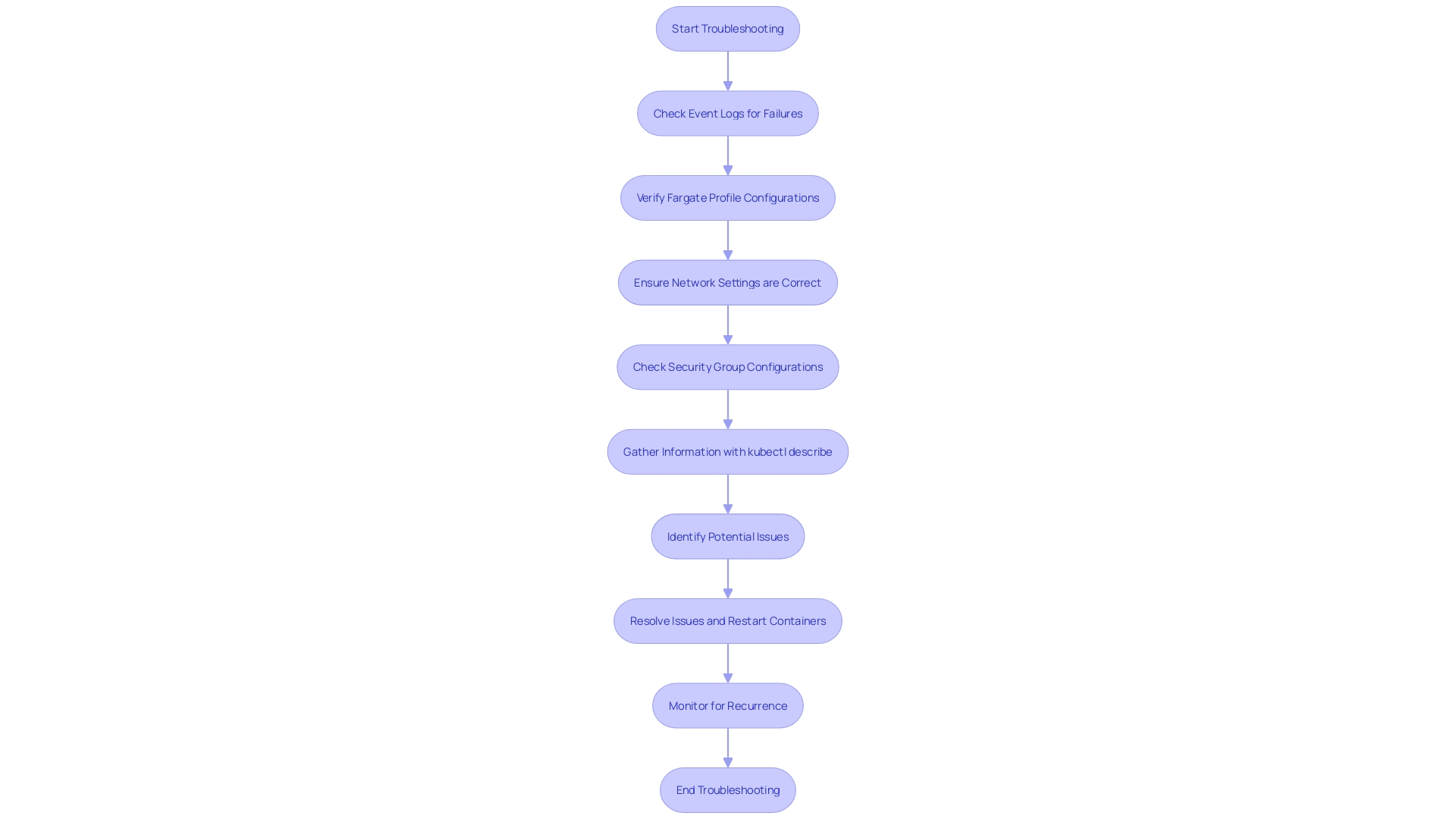

Troubleshooting Common Issues with EKS Fargate Deployments

- If containers fail to start, check the event logs using:

kubectl describe container <container_name> -n <namespace>. This command fetches detailed information about the pod's status, including events that might have caused the failure. - Ensure your Fargate profile matches the namespace and selectors of your deployment. Fargate allows you to run containers without managing servers, and correctly configuring the profile ensures the right allocation of computing resources, potentially reducing costs by only paying for what you use.

- Verify network settings and security group configurations for proper access. In our analysis, security-related topics comprised 12.3% of overall discussions in Kubernetes forums, highlighting the importance of maintaining secure configurations. Proper network settings and security groups are crucial for ensuring that your pods can communicate as expected and remain secure.

Conclusion

Deploying applications on AWS EKS Fargate offers organizations a powerful and efficient method for managing containerized workloads in a serverless environment. The outlined prerequisites emphasize the importance of having a well-configured AWS account, familiarity with Kubernetes concepts, and essential tools like AWS CLI and kubectl. Establishing a solid foundation is crucial for ensuring a smooth deployment process.

The step-by-step guide provided covers the creation and configuration of an EKS cluster, the setup of kubectl, and the deployment of applications. Each step is designed to guide users through the complexities of managing Kubernetes resources effectively. Furthermore, the integration of Fargate simplifies infrastructure management, allowing organizations to focus on application development without the burden of server maintenance.

Best practices highlighted throughout the article reinforce the importance of using the latest Kubernetes versions, implementing robust monitoring solutions, and ensuring secure permissions management through IAM roles. Additionally, troubleshooting techniques are essential for quickly addressing common issues, ensuring that deployments remain stable and efficient.

By adhering to these guidelines and best practices, organizations can optimize their use of AWS EKS Fargate, achieving scalability and resilience in their applications. This comprehensive approach not only enhances operational efficiency but also positions organizations to leverage the full potential of cloud-native technologies.