Introduction

In the rapidly evolving landscape of cloud computing, Kubernetes has emerged as a pivotal player, particularly within the Amazon Web Services (AWS) ecosystem. As organizations increasingly embrace microservices architectures to enhance scalability and resilience, understanding the role of Kubernetes becomes essential for harnessing its full potential.

This article delves into the intricacies of deploying and managing Kubernetes on AWS, from the foundational steps of setting up a cluster to exploring deployment options such as Amazon EKS versus manual configurations. It also highlights best practices for effective management and troubleshooting strategies to address common challenges.

With Kubernetes projected to play a significant role in the future of cloud technologies, this comprehensive guide aims to equip organizations with the knowledge necessary to thrive in a cloud-native environment.

Understanding Kubernetes and Its Role in AWS

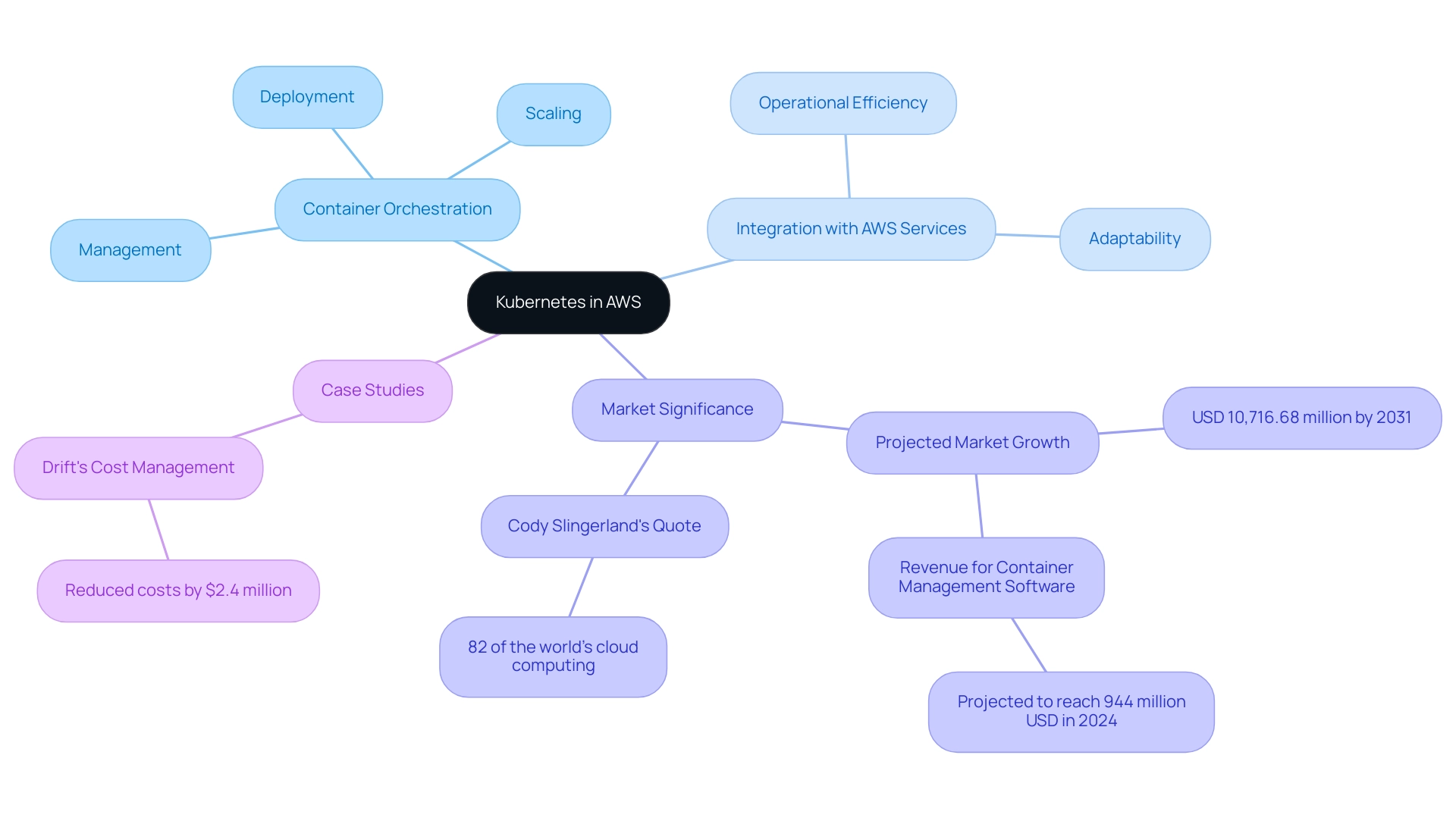

The platform, commonly referred to as K8s, stands as a pivotal open-source container orchestration solution that automates critical functions such as deployment, scaling, and management of containerized applications. Within the k8s aws framework, container orchestration emerges as a powerful tool for overseeing microservices architectures, enabling developers to efficiently deploy and scale applications in a cloud-native environment. Its integration with various AWS services fosters enhanced operational efficiency and adaptability, essential for contemporary businesses.

Moreover, this system is engineered to support high availability and fault tolerance, making it a preferred choice for organizations aiming to drive innovation and growth in the cloud. As the market for containers and related security is expected to attain USD 10,716.68 million by 2031, the significance of k8s aws as an orchestration platform in AWS becomes increasingly clear, establishing it as a foundational technology in the changing terrain of cloud computing. Cody Slingerland observes that together, two major areas represent 82% of the global cloud computing sector, emphasizing the importance of the technology in this vast market.

Additionally, case studies such as Drift's successful implementation of container orchestration demonstrate its effectiveness in cloud cost management, with the organization reducing costs by $2.4 million, showcasing real-world applications of this powerful orchestration tool.

Setting Up Your Kubernetes Cluster on AWS: Initial Steps

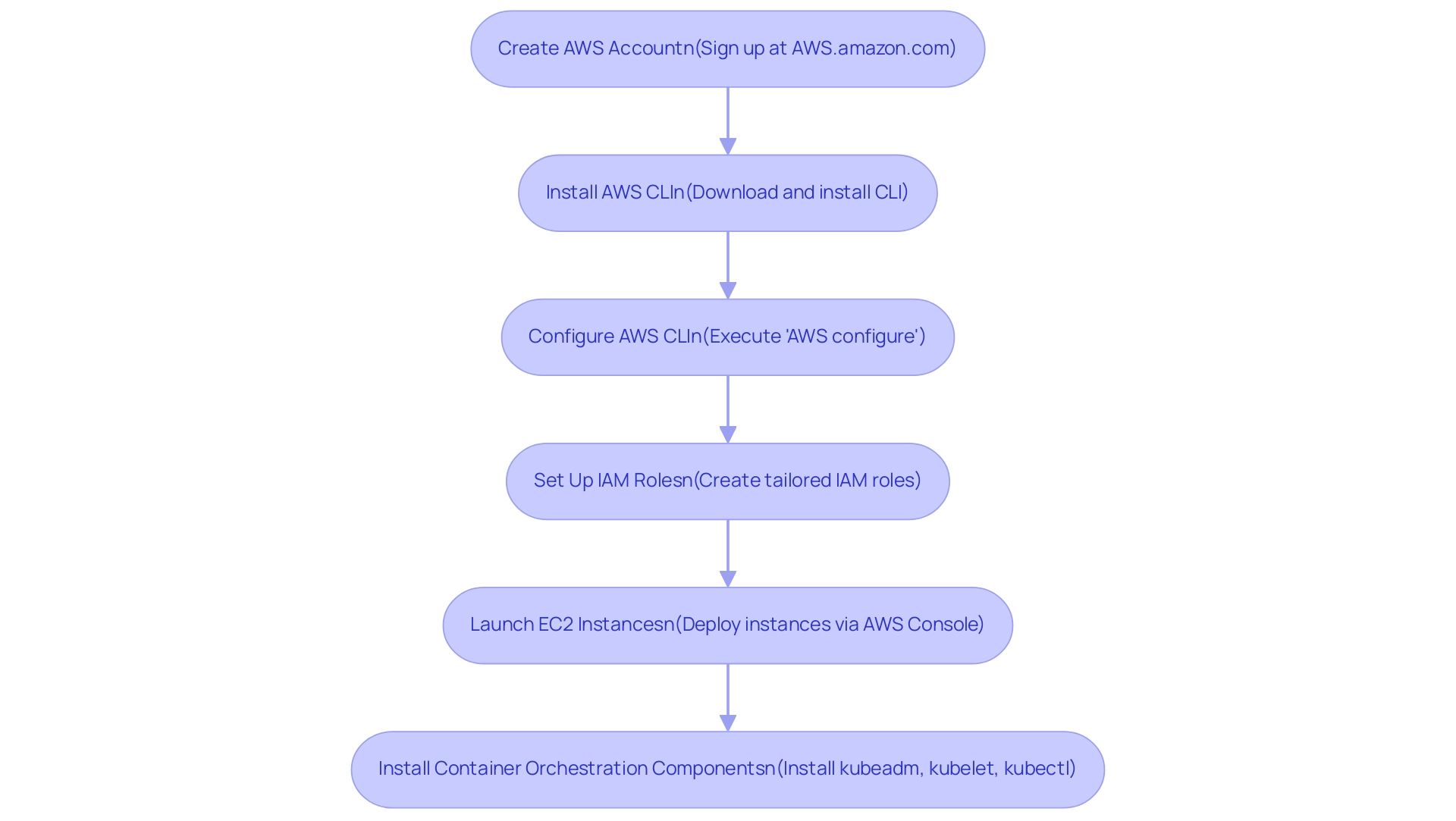

To effectively establish your container orchestration cluster on AWS, adhere to the following foundational steps:

- Create an AWS Account: If you do not currently possess an AWS account, initiate the process by signing up at AWS.amazon.com.

- Install AWS CLI: Download and install the AWS Command Line Interface (CLI), enabling management of AWS services directly from your terminal.

- Configure AWS CLI: Execute

AWS configure, providing your AWS Access Key ID, Secret Access Key, preferred region, and output format to set up your CLI environment. - Set Up IAM Roles: Create IAM roles tailored for your container orchestration nodes, ensuring secure interactions with other AWS services.

- Launch EC2 Instances: Utilize the AWS Management Console to deploy EC2 instances that will operate as your container orchestration nodes. Select an instance type that aligns with your application's specific requirements.

- Install Container Orchestration Components: SSH into the EC2 instances and install critical container orchestration components—

kubeadm,kubelet, andkubectl—utilizing your preferred package manager.

These preparatory steps will lay the groundwork for successfully deploying a k8s aws container orchestration cluster in your AWS environment. As cloud computing continues to be acknowledged as a contemporary data protection approach—an insight echoed by half of surveyed businesses—this foundational setup positions your entity for scalable and efficient operations. Furthermore, with the platform's value projected to reach an incredible USD 9.69 billion by 2031, embracing these technologies will not only enhance your infrastructure but also drive informed decision-making and innovation.

This is further exemplified by AWS's pivotal role in digital transformation, where it provides access to advanced cloud technologies that empower businesses to derive actionable insights from data.

Exploring Deployment Options: EKS vs. Manual Setup

When deploying container orchestration technology using k8s aws on AWS, companies generally face a crucial decision between leveraging Amazon EKS (Elastic Kubernetes Service) or opting for a manual setup.

-

Amazon EKS: This managed service streamlines the process of running k8s aws container orchestration technology by overseeing the control plane. EKS, which is a service within k8s aws, not only offers automated updates but also guarantees scaling and improved protective measures. This allows development teams to concentrate on application deployment rather than getting bogged down in infrastructure management. With an impressive uptime of 99.95% for clusters utilizing Azure Availability Zone and 99.9% for those that do not, as reported for Azure Kubernetes Service (AKS), organizations looking for a quick, scalable, and secure deployment option may find k8s aws particularly appealing.

-

Manual Setup: In contrast, a manual setup involves configuring k8s aws directly on EC2 instances. While this approach offers superior control and customization, it demands a higher level of technical expertise and ongoing management. Organizations with distinct needs or those looking to reduce expenses may consider this approach attractive; however, it presents considerable challenges, especially regarding protection and updates. For instance, case studies reveal that managed EKS nodes in k8s aws allow for seamless one-click updates, whereas self-managed nodes require users to assume full responsibility for security and maintenance. This distinction highlights the importance of considering the operational burden associated with each option. Adolfo Delorenzo succinctly summarizes these considerations, stating, 'We hope the above summary helps you choose which service is best for your group.' By emphasizing the need to weigh these factors, DeLorenzo's insight reinforces the critical nature of this decision.

Ultimately, the choice between EKS and manual setup hinges on an organization’s specific needs, technical capabilities, and budgetary constraints.

Best Practices for Managing Kubernetes on AWS

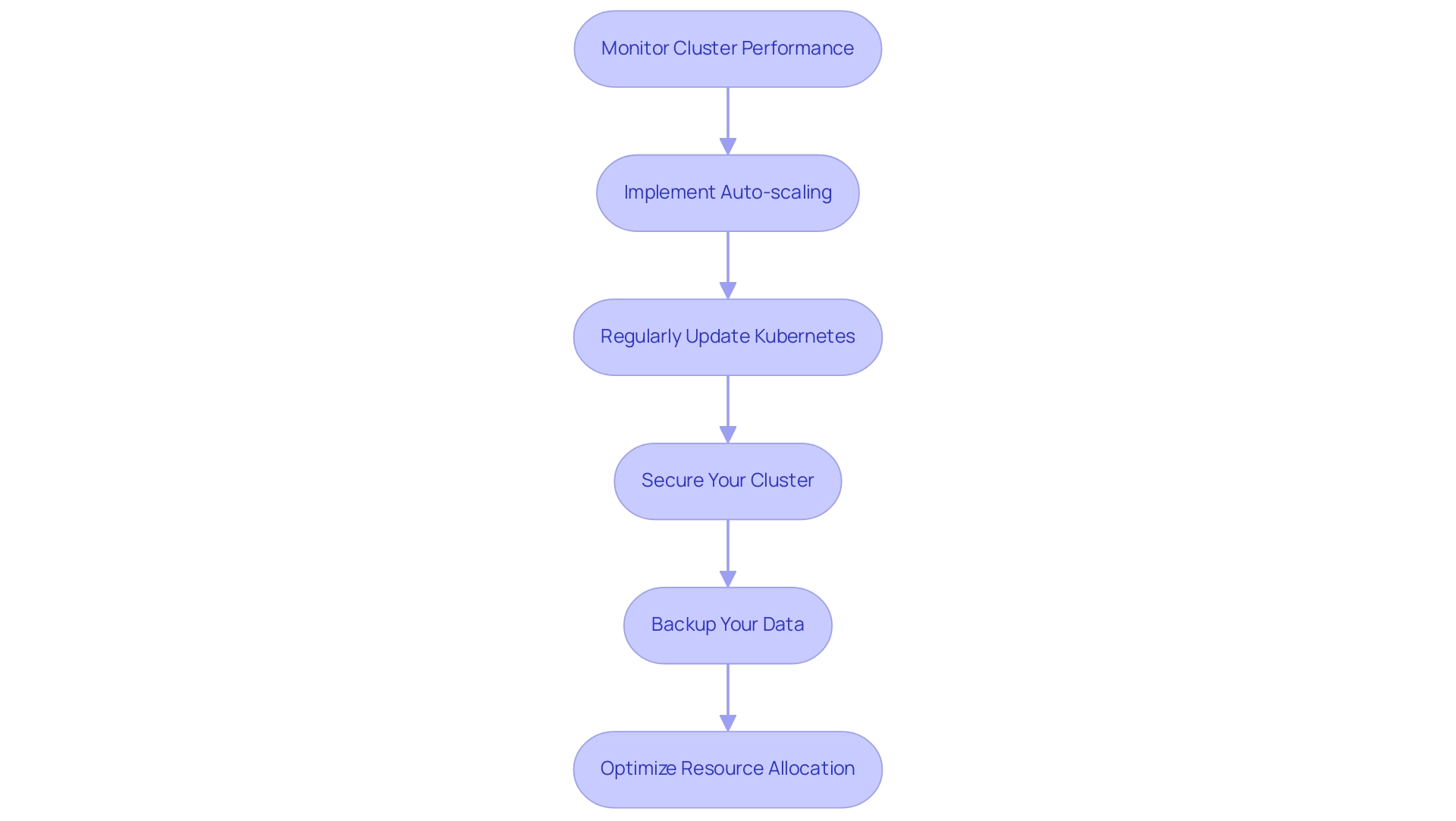

Effectively managing your container orchestration environment on AWS requires adherence to several best practices designed to optimize performance and security:

-

Monitor Cluster Performance: Utilize AWS CloudWatch in conjunction with metrics to gain comprehensive insights into the health and performance of your cluster. This enables proactive identification of issues before they escalate.

-

Implement Auto-scaling: Configure the Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler to dynamically adjust resources based on real-time demand. The HPA automatically scales the number of pods in response to observed CPU utilization, thereby ensuring efficient resource management. For instance, a successful case study demonstrated how HPA was set up to dynamically scale pods in response to CPU usage, significantly improving resource allocation and management.

-

Regularly Update Kubernetes: Keeping your Kubernetes version current is essential. Regular updates not only introduce the latest features but also improve protection by providing critical patches that shield your environment from vulnerabilities.

-

Secure Your Cluster: Implementing role-based access control (RBAC) and network policies is crucial for enhancing security. These measures restrict access to sensitive resources and ensure that only authorized personnel can interact with the cluster.

-

Backup Your Data: Implement a robust backup strategy for your container orchestration configurations and persistent volumes. Regular backups are vital to prevent data loss and ensure quick recovery in case of an incident.

-

Optimize Resource Allocation: Define resource requests and limits for your pods to ensure efficient utilization of your cluster’s resources. Setting appropriate limits—such as 1Gi for memory and 500m for CPU—can help prevent resource contention and maintain overall system stability. As one DevOps engineer noted, 'Our biggest issue is how complex it is to the layperson. It slowed us down dramatically. We could have been at least twice as far as we are now.' This highlights the significance of clear resource management methods.

By following these best practices, you can greatly improve the reliability and efficiency of your deployments using k8s aws, ultimately resulting in a more robust and responsive infrastructure.

Troubleshooting Common Kubernetes Issues on AWS

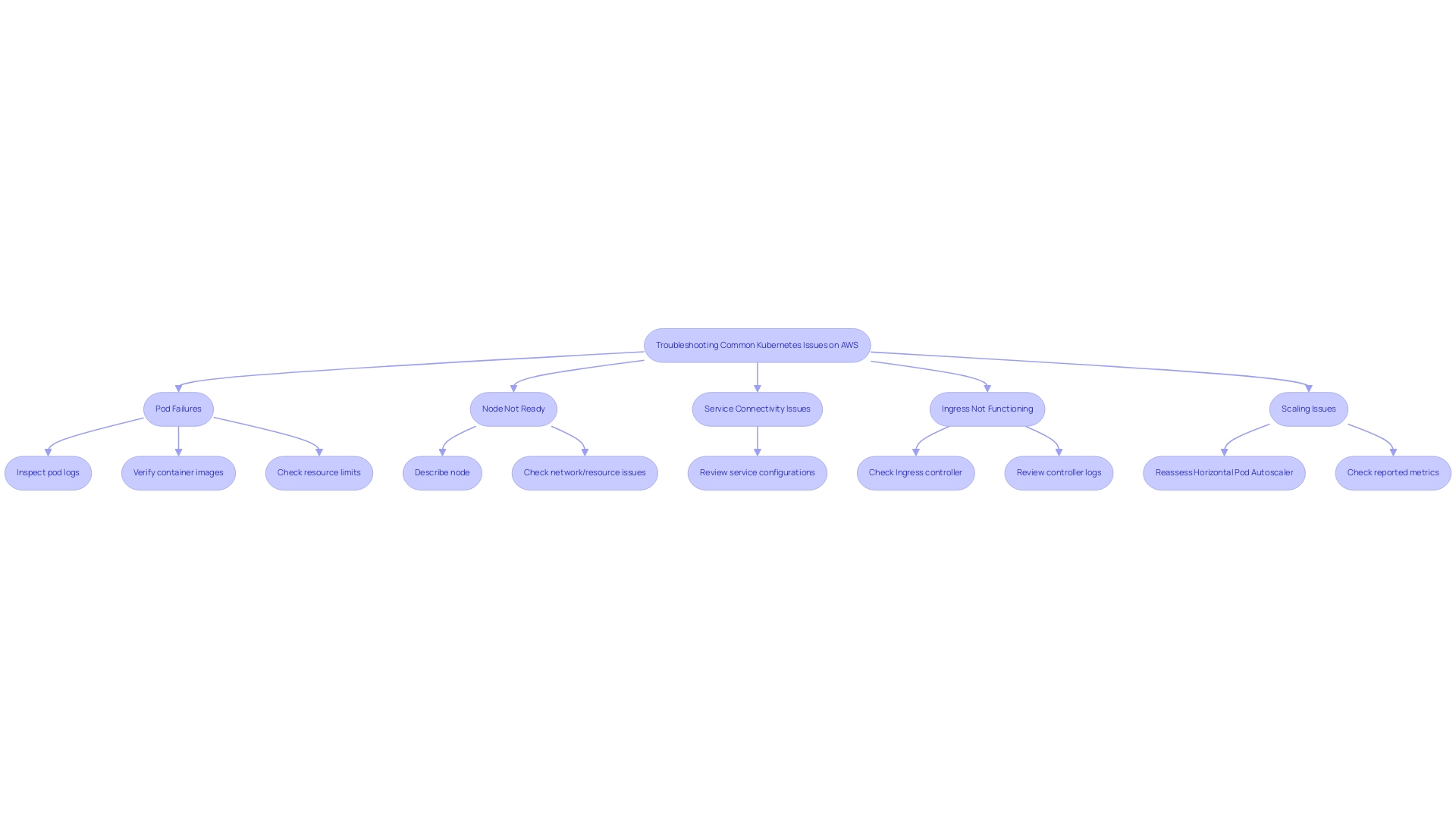

Deploying and managing k8s aws presents a variety of challenges that require systematic troubleshooting to ensure optimal performance. Below are key issues frequently encountered, along with effective resolutions:

-

Pod Failures: Begin by inspecting pod logs with

kubectl logs <pod-name>to pinpoint errors. Verify that container images are accessible and that resource limits, such as CPU and memory, are not exceeded. With 54% of entities recognizing protection as a primary challenge, ensuring strong access control and image scanning is essential. As noted by Veeam, "Cloud computing is a modern data protection strategy," emphasizing the need for secure practices in cloud environments. -

Node Not Ready: If a node is labeled as 'NotReady', utilize

kubectl describe node <node-name>to investigate further. Check for underlying network or resource allocation issues that may be impacting node health. Regular monitoring and alerts can preemptively address potential downtimes, reinforcing the importance of a proactive approach in cloud environments. -

Service Connectivity Issues: Confirm that your services are accurately defined and that no network policies are obstructing traffic flow. Use

kubectl get svcto review service configurations. It's vital to ensure that communication between services is seamless, as connectivity issues can severely impact application performance. -

Ingress Not Functioning: If Ingress traffic routing fails, check that the Ingress controller is operational and properly configured. Review the controller’s logs for any anomalies that could hinder traffic management. According to industry insights, effective data protection strategies depend on the reliability of such components, particularly as entities increasingly view cloud computing as essential for disaster recovery.

-

Scaling Issues: If pods are not scaling as anticipated, reassess the Horizontal Pod Autoscaler settings and ensure that relevant metrics are being reported accurately. Understanding pod failure rates in deployments is essential for optimizing scaling strategies and maintaining application resilience. The investment in advanced protection platforms emphasizes how enterprises are proactively tackling challenges, ensuring strong safeguards in their cloud environments.

By systematically following these troubleshooting steps, organizations can swiftly address common issues in k8s aws, thereby safeguarding the health and security of their environments. This approach aligns with the findings that half of surveyed businesses view cloud computing as crucial for their data protection strategy, particularly in the context of disaster recovery.

Conclusion

Kubernetes stands as a transformative force within the AWS ecosystem, enabling organizations to effectively navigate the complexities of cloud-native architectures. By automating the deployment, scaling, and management of containerized applications, Kubernetes not only enhances operational efficiency but also provides the necessary resilience and scalability demanded by modern business environments.

Establishing a Kubernetes cluster on AWS involves several foundational steps, from creating an AWS account to configuring IAM roles and deploying EC2 instances. Organizations must carefully consider their deployment options, weighing the benefits of Amazon EKS as a managed service against the control afforded by a manual setup. Each choice presents unique advantages and challenges, underscoring the importance of aligning decisions with organizational capabilities and requirements.

Implementing best practices for managing Kubernetes on AWS is crucial for optimizing performance and security. Monitoring cluster health, employing auto-scaling strategies, and maintaining robust security measures are essential components of effective management. Additionally, proactive troubleshooting of common issues can significantly enhance operational reliability, ensuring that organizations can swiftly address challenges as they arise.

In summary, as organizations look to leverage the full potential of cloud technologies, understanding Kubernetes within the AWS framework is indispensable. By adopting the strategies outlined in this guide, businesses can position themselves for success in an increasingly competitive landscape, driving innovation while maintaining a secure and efficient cloud infrastructure. Ultimately, embracing Kubernetes is not just a technical choice; it is a strategic imperative that will shape the future of cloud computing and the way organizations operate within it.