Introduction

In the rapidly evolving landscape of software development, mastering containerization and orchestration technologies is no longer optional but essential for organizations striving for agility and resilience. Docker and Kubernetes stand at the forefront of this transformation, offering developers the tools needed to automate application deployment and manage containerized environments effectively.

Docker allows for the creation of lightweight, portable containers that encapsulate applications and their dependencies, while Kubernetes orchestrates these containers at scale, providing features such as load balancing and self-healing capabilities. As industries increasingly adopt these technologies, understanding their intricacies is crucial for harnessing their full potential.

This article delves into the fundamental concepts of Docker and Kubernetes, guiding readers through the processes of:

- Preparing Docker images

- Deploying them within Kubernetes clusters

- Addressing common challenges that arise in the deployment journey

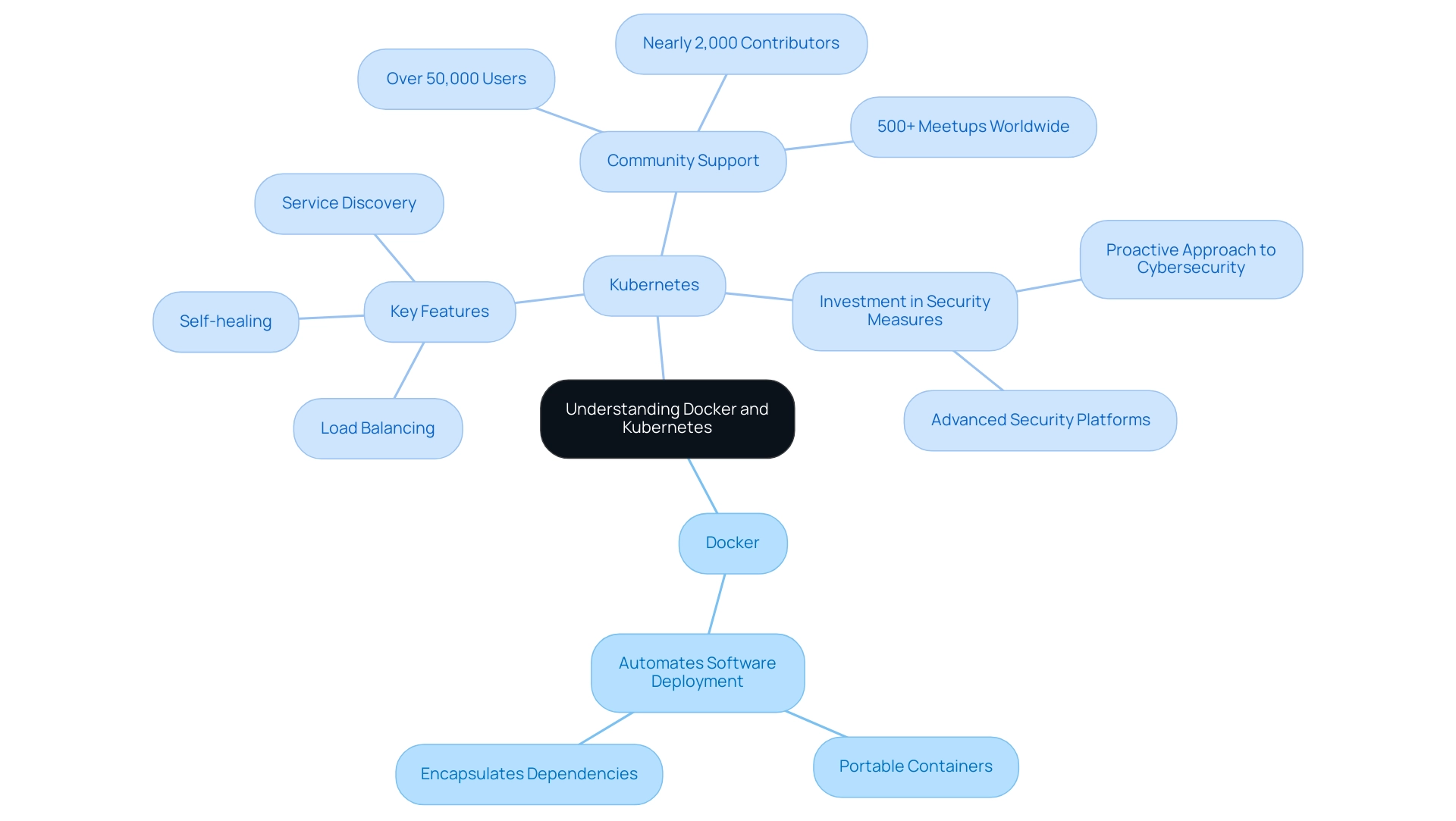

Understanding Docker and Kubernetes: The Basics

Docker serves as a robust platform that empowers developers to automate the deployment of software through lightweight, portable containers. These containers encapsulate not only the software itself but also all dependencies, ensuring consistency and reliability across various environments. In contrast, the orchestration platform operates as a sophisticated system designed for managing containerized applications at scale.

With over 50,000 users and nearly 2,000 contributors, Kubernetes showcases significant community support and widespread adoption across diverse industries. It simplifies the implementation, scaling, and management processes while offering essential features such as:

- Load balancing

- Self-healing capabilities

- Service discovery

Furthermore, many organizations are investing in advanced security measures to protect critical systems and data in cloud-native environments, reflecting a proactive approach to addressing the sophisticated nature of cyberattacks.

Mastering these technologies is imperative for effectively deploying docker image to kubernetes and managing software, where agility and resilience are paramount.

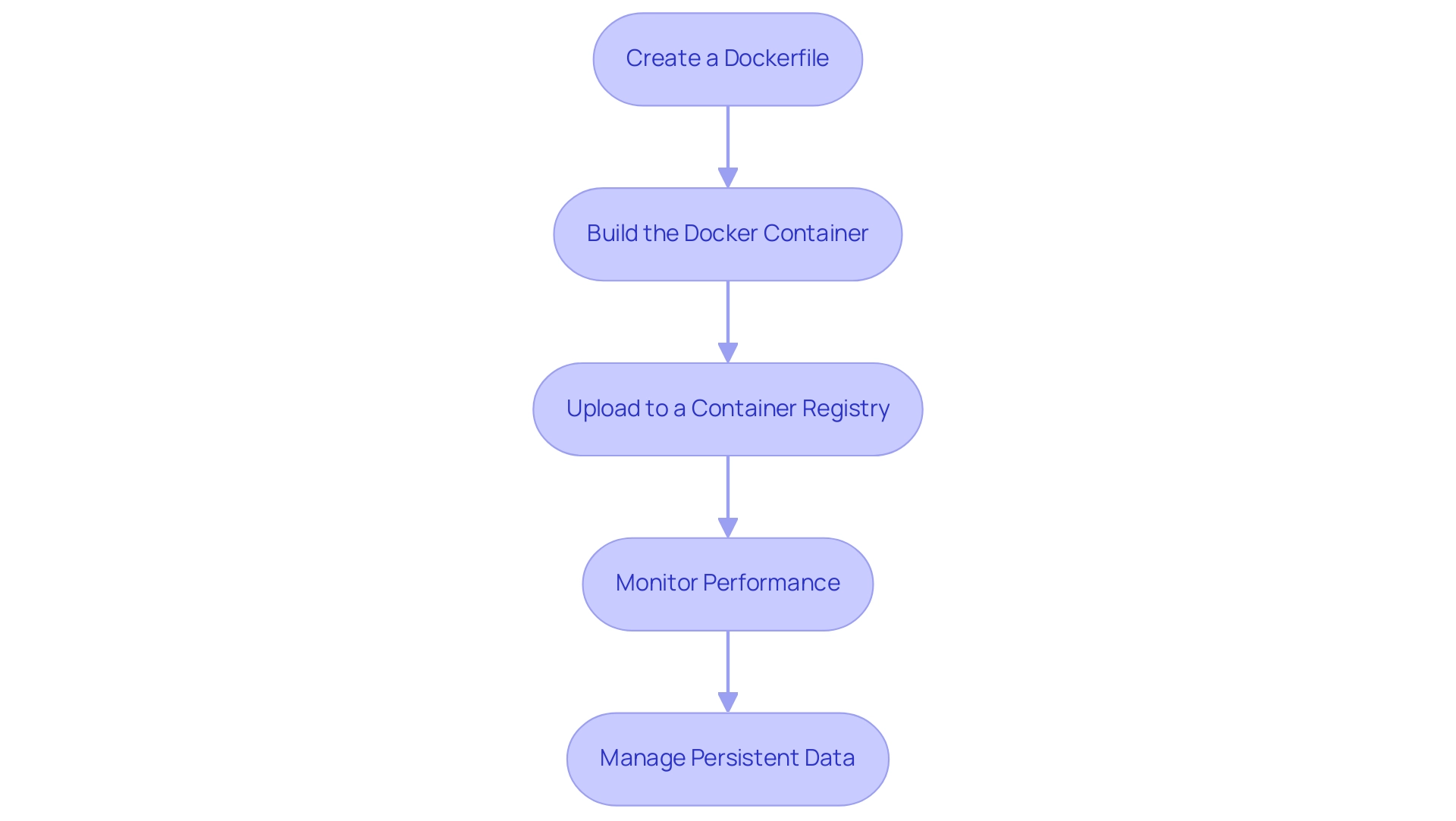

Preparing Your Docker Image for Deployment

Preparing your Docker container is a vital step in ensuring that your software is ready for deployment. Follow these detailed steps to create an efficient Docker image:

- Create a Dockerfile: The Dockerfile serves as the blueprint for your container, detailing how it should be built. Start with an appropriate foundational visual pertinent to your usage. For example, if you are using Node.js, your Dockerfile might look like this:

dockerfile

FROM node:14

WORKDIR /app

COPY . .

RUN npm install

CMD ["npm", "start"]

This configuration specifies the base image, sets the working directory, copies your application code into the image, installs necessary dependencies, and defines the command to run your application.

- Build the Docker Container: Once your Dockerfile is ready, utilize the Docker CLI to create your container. Execute the following command in your terminal:

bash

docker build -t your-image-name:tag .

This command compiles your code and dependencies into a single image, tagged for easy identification.

- Upload to a Container Registry: After constructing your container, it is vital to make it available for implementation. Push your image to a container registry, such as Docker Hub or Google Container Registry, using the command:

bash

docker push your-image-name:tag

This step ensures that your image is readily available for the Kubernetes deployment process.

Incorporating best practices into your Dockerfile configurations not only streamlines the deployment process but also optimizes performance. As noted by cAdvisor, an open-source tool developed by Google, > It’s a basic tool that provides the ability to create real-time metrics for container performance optimization and troubleshooting. Monitoring your containers is crucial for maintaining optimal performance and security, particularly when running containers as non-root users to mitigate risks and prevent unauthorized access.

Additionally, consider the performance metrics of your containers. For instance, container a3f78cb32a8e has a CPU usage of 0.00 and memory usage of 1.228 MiB with a limit of 16.455 GiB, illustrating the importance of monitoring resource utilization.

Furthermore, managing persistent data in containerized systems is essential. A practical approach is to use Docker volumes, which allow you to define a volume and mount it to a directory inside your container. This ensures that your data persists even if the container is stopped or removed, enhancing the reliability of your applications.

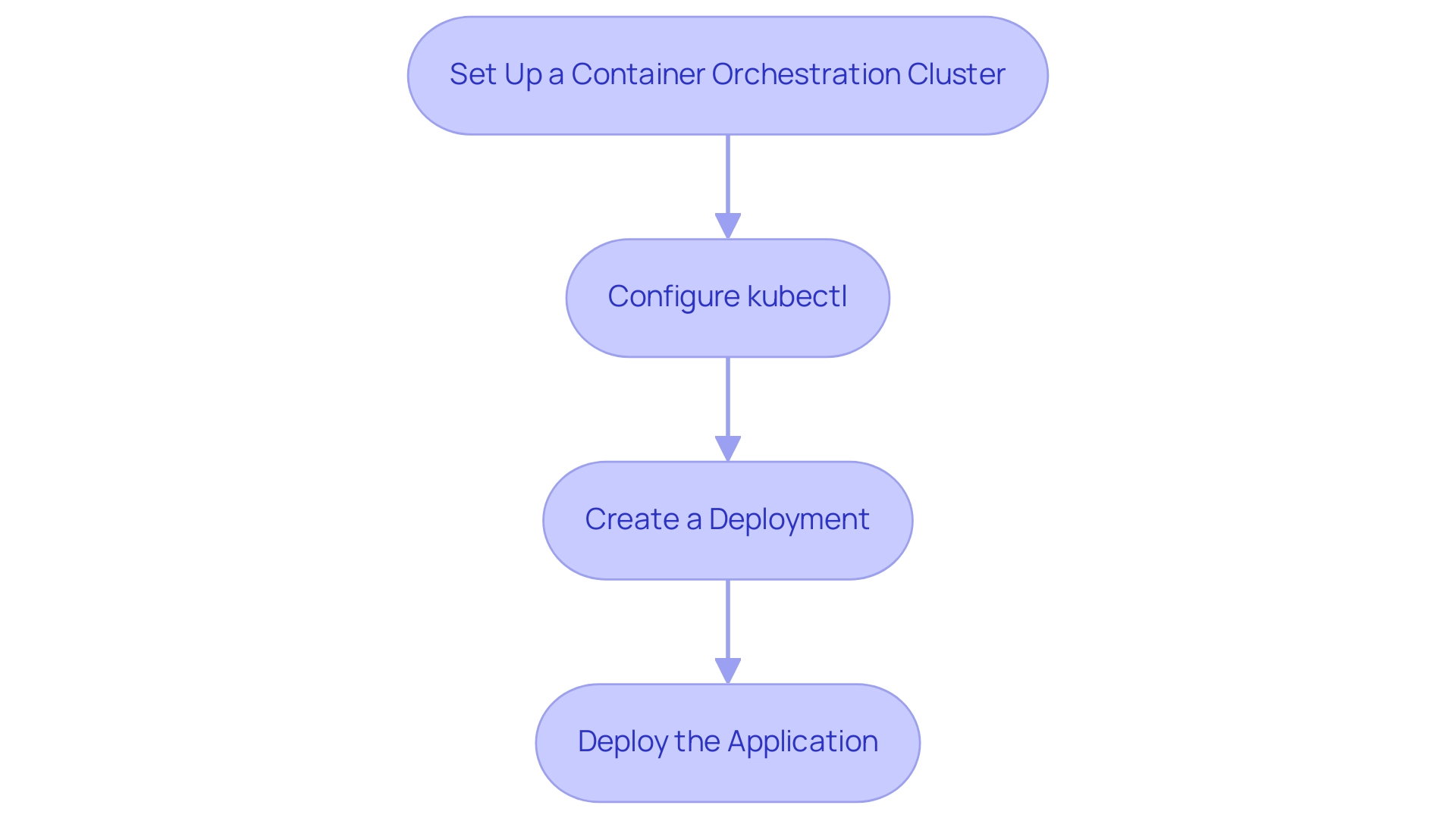

Creating a Kubernetes Cluster and Deploying Your Image

To establish a Kubernetes cluster and deploy your Docker image, adhere to the following structured steps:

- Set Up a Container Orchestration Cluster: Utilize your preferred cloud provider’s console to initiate cluster creation. For instance, in Google Kubernetes Engine (GKE), execute:

bash

gcloud container clusters create your-cluster-name

- Configure kubectl: Verify that

kubectlis installed and correctly set up to interact with your cluster. You can configure it using:

bash

gcloud container clusters get-credentials your-cluster-name

- Create a Deployment: Draft a deployment YAML file (e.g.,

deployment.yaml) to specify the deployment configuration:

yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: your-deployment-name

spec:

replicas: 2

selector:

matchLabels:

app: your-app

template:

metadata:

labels:

app: your-app

spec:

containers:

- name: your-container-name

image: your-image-name:tag

- Deploy the Application: Execute the following command to create your deployment based on the YAML configuration:

bash

kubectl apply -f deployment.yaml

This command will instantiate the designated number of replicas of your application within the cluster, aligning with the latest developments in Kubernetes cluster creation, which is increasingly being recognized for its ability to facilitate polyglot programming, as highlighted by Dynatrace's Chief Product Officer, Florian Ortner. Furthermore, the increasing number of container orchestration clusters deployed globally—projected to reach unprecedented levels in 2024—demonstrates the platform's robust adoption in the tech industry. Significantly, observations from 2023 indicate nearly one billion unique containers, underscoring the scale at which the platform is utilized.

However, CTOs must also consider common security issues such as writable file systems, privilege escalation, and image vulnerabilities when deploying applications. Adopting container orchestration not only simplifies implementation but also improves operational efficiency across various programming environments. As CloudBees states, "This collaboration demonstrates CloudBees' confidence in the platform's potential and its essential role in container orchestration going forward.

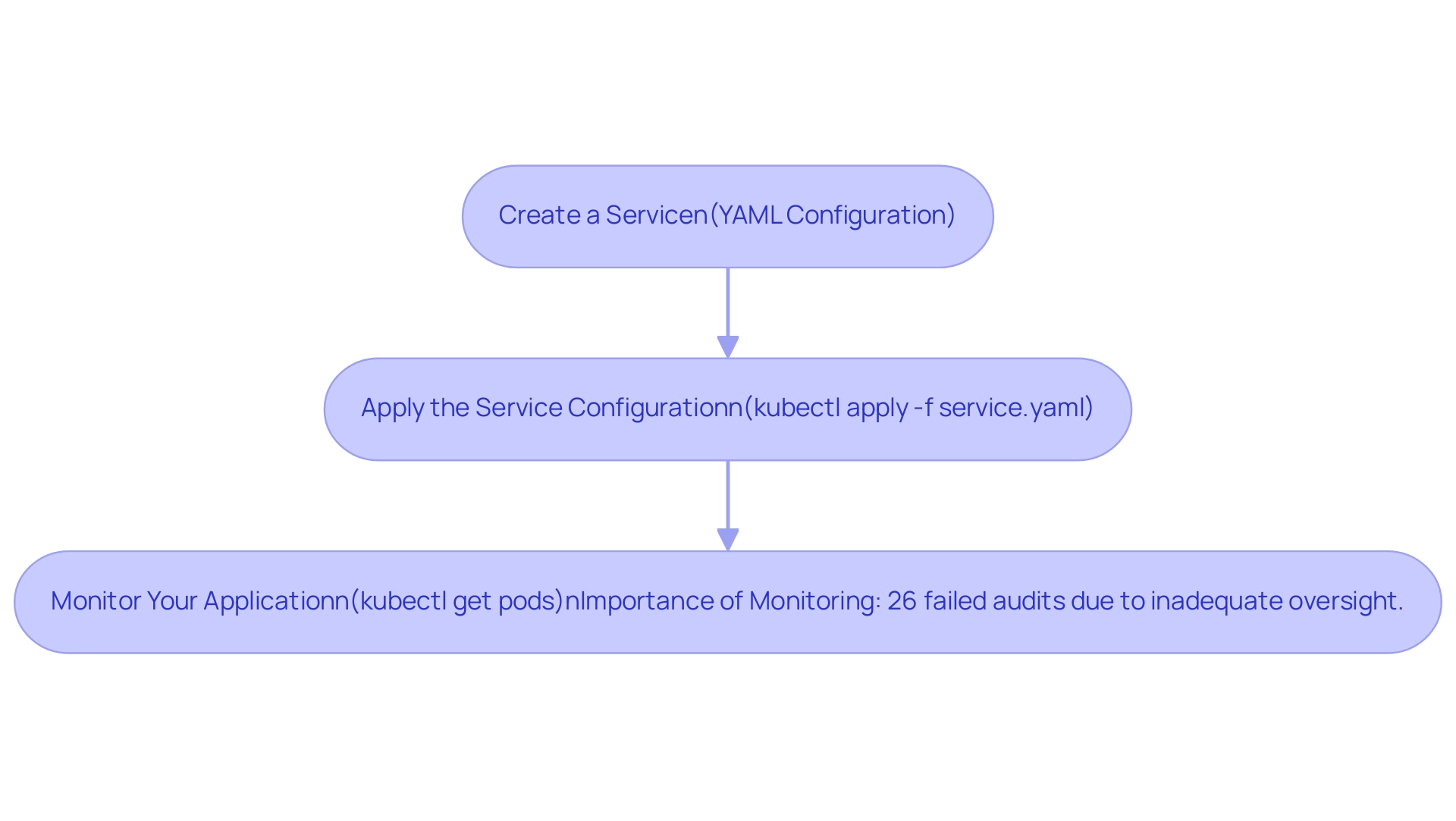

Configuring Kubernetes Resources for Your Application

Following the deployment of your software, it is critical to deploy the docker image to Kubernetes and configure essential resources for optimal performance and security. Here’s a structured approach to ensure your system is adequately exposed and monitored:

- Create a Service: To facilitate external access to your software, begin by defining a service in a YAML configuration file (for example,

service.yaml). This file should include the following structure:

yaml

apiVersion: v1

kind: Service

metadata:

name: your-service-name

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

selector:

app: your-app

This configuration specifies that your application will be accessible via port 80, directing traffic to port 8080 of your application.

- Apply the Service Configuration: Once the YAML file is prepared, deploy the service by executing the following command:

bash

kubectl apply -f service.yaml

This command registers your service with the Kubernetes API, making it effective immediately.

- Monitor Your Application: To ensure your application pods are functioning as intended, utilize the command:

bash

kubectl get pods

Regular monitoring is critical; according to a report, 26% of organizations have reported failing audits due to inadequate oversight. This statistic highlights the necessity of effective monitoring, which not only maintains performance and availability but also aligns with the growing emphasis on robust security measures in the face of evolving cyber threats. Furthermore, as highlighted in a case study on 'Investment in Advanced Security Platforms,' many IT organizations are investing in advanced security protocols to protect critical systems and data against sophisticated cyber attacks. In a landscape where 1,573 container orchestration users in Brazil are actively navigating these challenges, adopting best practices for monitoring and security is imperative for organizational success in 2024. Furthermore, the growth of cloud-native technologies is changing application development and implementation, making it crucial to incorporate these advancements into your management strategy.

Troubleshooting Common Deployment Issues

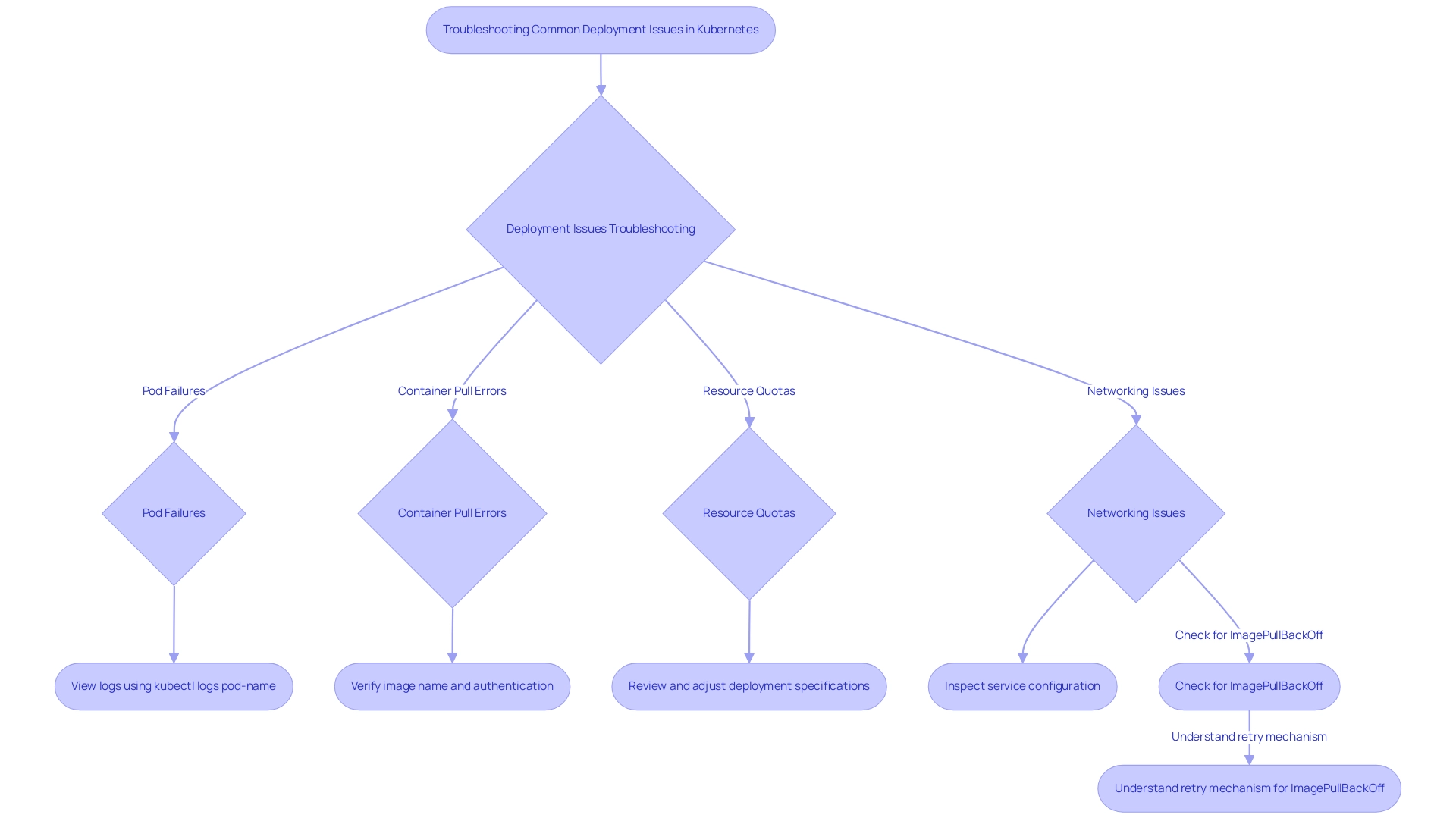

In the realm of Kubernetes implementations, addressing common issues is crucial for maintaining operational efficiency and ensuring robust security measures. Here are some prevalent deployment challenges and their corresponding troubleshooting strategies:

-

Pod Failures: A notable challenge occurs when pods fail to start.

To diagnose the issue, utilize the following command to access logs:

bash kubectl logs pod-name

Analyzing the logs will help identify errors within your application code or configuration that may be hindering pod initialization. -

Container Pull Errors: Kubernetes depends on successfully retrieving Docker visuals to create containers. If you encounter issues with retrieving the visual, verify the correctness of the name and ensure proper authentication to your container registry.

Keep in mind that 39% of organizations are still in the early stages of DevSecOps adoption, highlighting the importance of robust security practices during this process. Frequent security concerns such as picture vulnerabilities and writable file systems can worsen implementation challenges, making it crucial to enforce strict security protocols. -

Resource Quotas: Pods may remain unscheduled if resource limits set within your cluster are exceeded.

It's essential to review and adjust your deployment specifications to align with the defined resource quotas, ensuring that sufficient resources are available for your pods. -

Networking Issues: When services are unreachable, it’s imperative to inspect the service configuration. Confirm that the necessary ports are exposed and that any network policies applied do not inadvertently restrict access.

Additionally, understanding the case ofImagePullBackOffis vital.

This status indicates that Kubernetes cannot pull a container due to either an invalid name or network issues. The system will attempt to retry pulling the image with increasing intervals, but after five minutes of failure, it will mark the status asImagePullBackOff. Familiarity with this error is essential for ensuring successful container setup.

As the platform's value is projected to reach an incredible USD 9.69 billion by 2031, prioritizing security measures and compliance with regulatory standards in hybrid cloud environments becomes increasingly critical. By proactively addressing these common deployment issues, you can significantly enhance the experience when you deploy docker image to kubernetes.

Conclusion

The journey through Docker and Kubernetes reveals their indispensable roles in modern software development. Docker's capability to create portable containers ensures that applications, along with their dependencies, are consistent and reliable across different environments. This foundational step is crucial for any deployment strategy, as it sets the stage for seamless integration into orchestration systems like Kubernetes.

Kubernetes amplifies the power of Docker by managing these containers at scale, providing essential features such as load balancing and self-healing mechanisms. The structured approach to preparing Docker images, deploying them within Kubernetes clusters, and configuring resources not only enhances operational efficiency but also fortifies security measures in an increasingly complex cyber landscape. Organizations must prioritize monitoring and troubleshooting to address common deployment challenges effectively, ensuring that their applications remain resilient and performant.

In conclusion, as the adoption of containerization and orchestration technologies accelerates, mastering Docker and Kubernetes becomes paramount for organizations aiming to thrive in a competitive environment. By understanding and implementing best practices in deployment and management, businesses can harness the full potential of these tools, driving innovation and agility while safeguarding their applications against evolving threats. Embracing this paradigm shift is essential for success in the future of software development.