Introduction

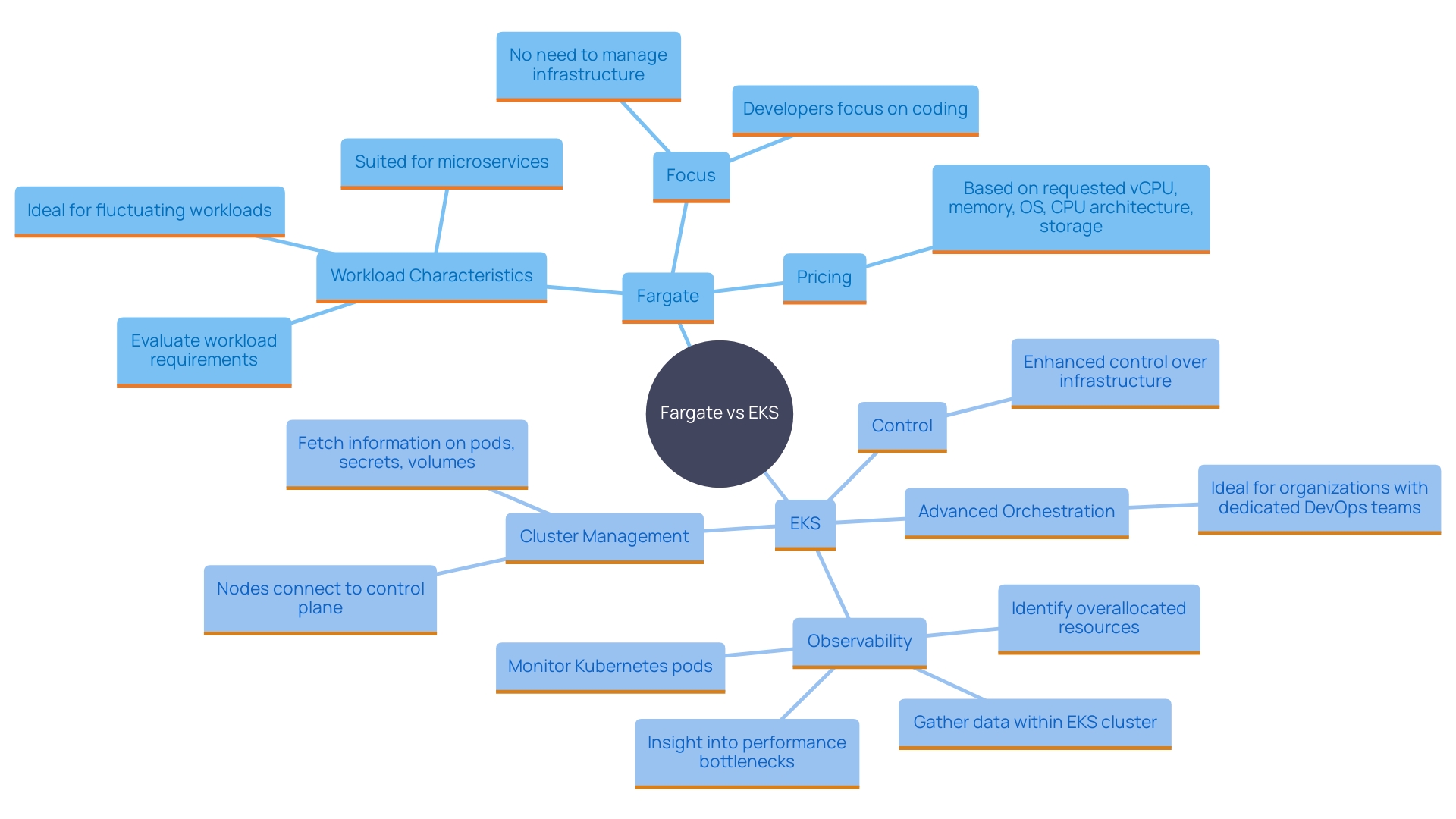

In the rapidly evolving landscape of cloud computing, selecting the right container management solution is crucial for optimizing resource allocation, cost-efficiency, and operational scalability. Fargate and EKS, two prominent services from Amazon Web Services (AWS), cater to these needs but differ significantly in their approach and use cases. Fargate, a serverless compute engine, abstracts the underlying infrastructure, allowing users to focus solely on container deployments without managing servers.

This model simplifies operations and scales automatically based on demand. Conversely, EKS, a managed Kubernetes service, provides a comprehensive environment for running Kubernetes applications, offering greater control and customization through its robust orchestration capabilities. This article delves into the nuances of Fargate and EKS, comparing their deployment models, pricing structures, scalability, performance, and security considerations to guide organizations in making informed decisions based on their specific requirements and workloads.

Overview of Fargate and EKS

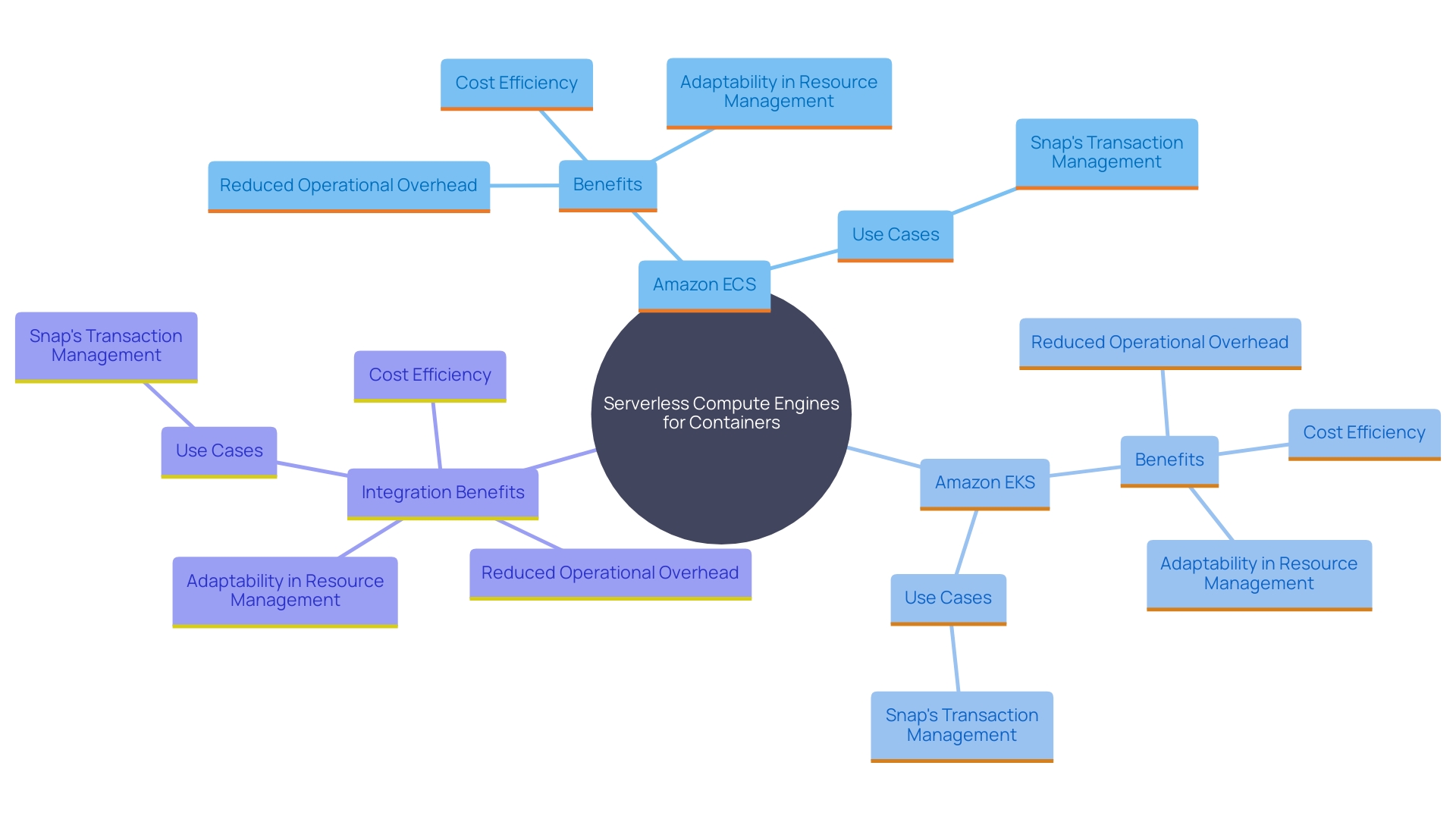

The serverless compute engine for containers integrates seamlessly with Amazon ECS and EKS, allowing users to run containers without managing the underlying infrastructure. This abstraction layer simplifies deployment and scaling, enabling organizations to focus on application development without concerning themselves with the complexities of server management. By leveraging Fargate, users only pay for the computing resources they use, eliminating upfront expenses and reducing overall costs.

EKS, a managed container orchestration service, facilitates the running of container applications without the operational overhead of managing the control plane. EKS harnesses the robust capabilities of the Kubernetes ecosystem, offering powerful orchestration features and extensive support for containerized workloads. For instance, Snap utilizes EKS to manage over 2 million transactions per second, achieving a 77% reduction in developer effort for launching new microservices. This demonstrates how EKS can significantly streamline operations and enhance productivity.

Both the service and EKS offer adaptability in managing assets. EKS clusters can operate on either EC2 instances or a serverless option, depending on specific needs. This hybrid method enables organizations to enhance asset distribution and control expenses efficiently. For instance, a cluster can operate certain pods on EC2 while others on a different platform, ensuring efficient utilization of resources.

By merging serverless capabilities with EKS's managed Kubernetes service, organizations can attain a scalable, cost-effective solution for container management. This integration supports workload gravity and monitoring with services like Amazon Managed Service for Prometheus and Amazon Managed Grafana, ensuring a comprehensive and efficient container management strategy.

Key Differences: Fargate vs EKS

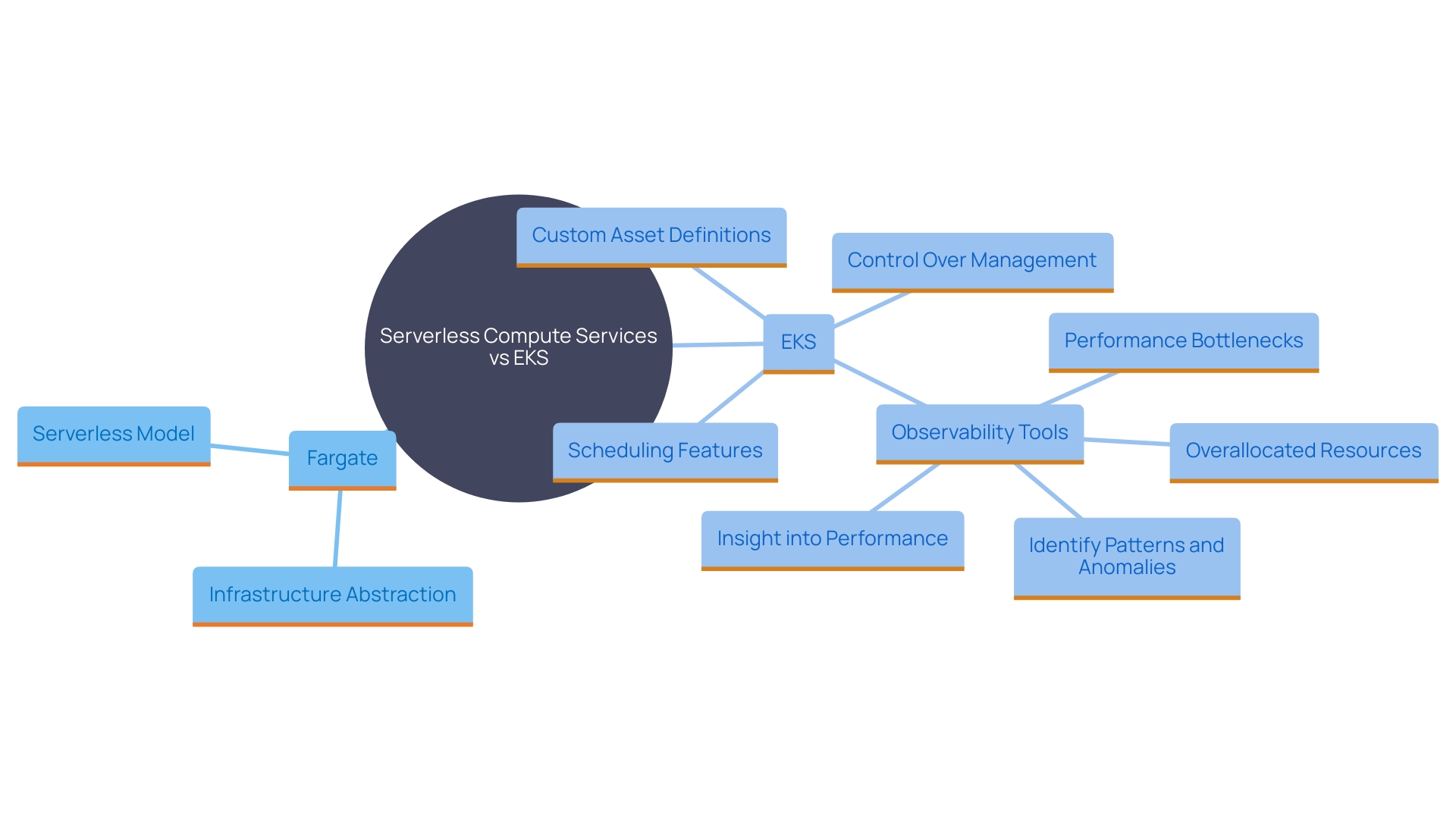

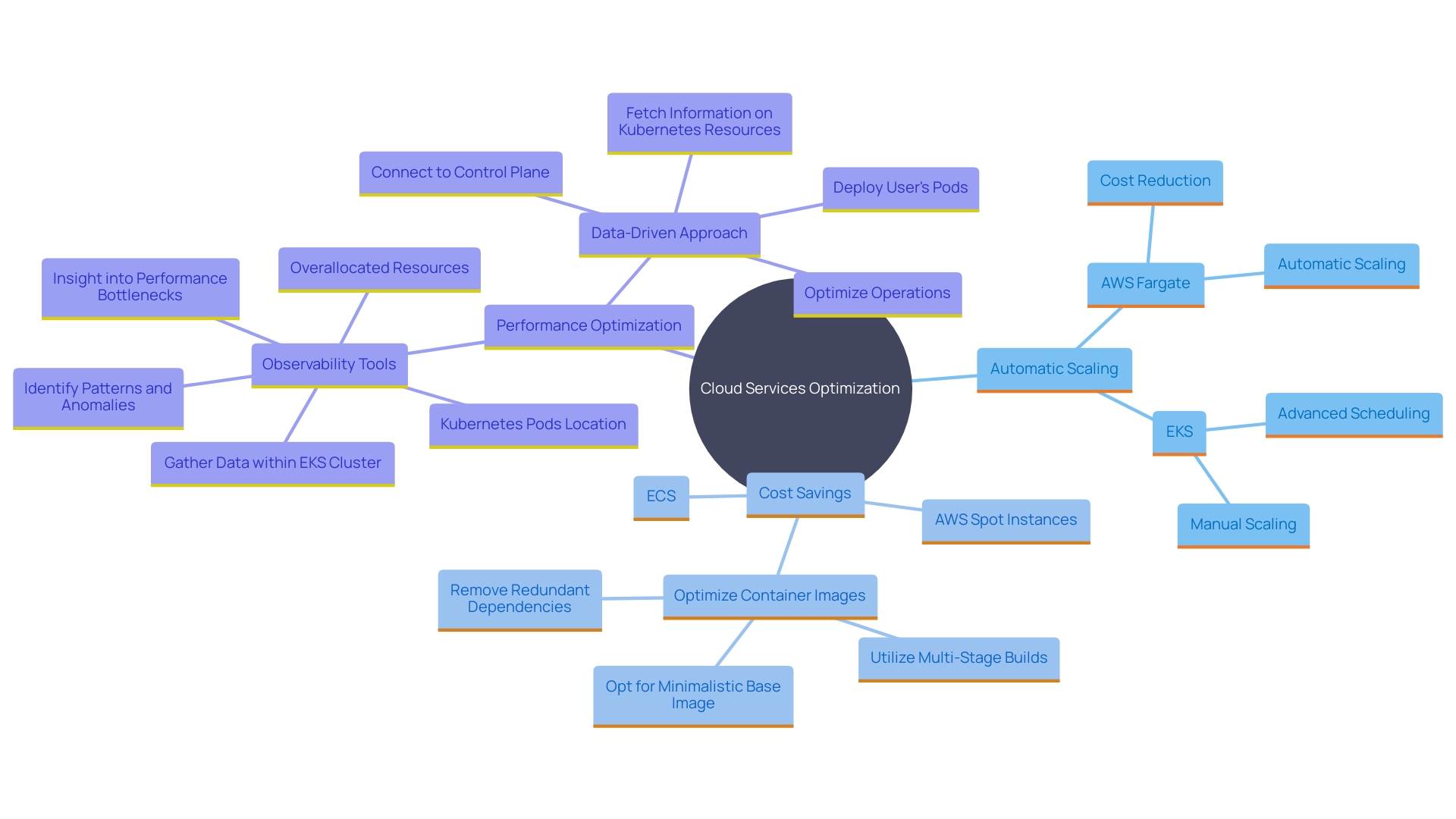

The main difference between the serverless compute service and EKS lies in their orchestration models. Fargate abstracts infrastructure management, enabling users to concentrate solely on container configurations and application logic. This approach allows for a serverless operational model, eliminating the need to manage clusters or servers. On the other hand, EKS offers a thorough container orchestration environment where users possess enhanced control over management, utilizing the vast container ecosystem. 'This includes custom asset definitions, advanced scheduling features, and observability tools that gather data within the EKS cluster and surrounding AWS infrastructure to provide insights into performance bottlenecks and overallocated assets.'. Each node in an EKS cluster connects to the control plane to fetch information related to Kubernetes assets such as pods, secrets, and volumes, ensuring efficient management. While the service simplifies operational tasks by removing the need to manage underlying infrastructure, EKS offers a robust platform for users seeking more control and flexibility in their container management strategy.

Deployment Models

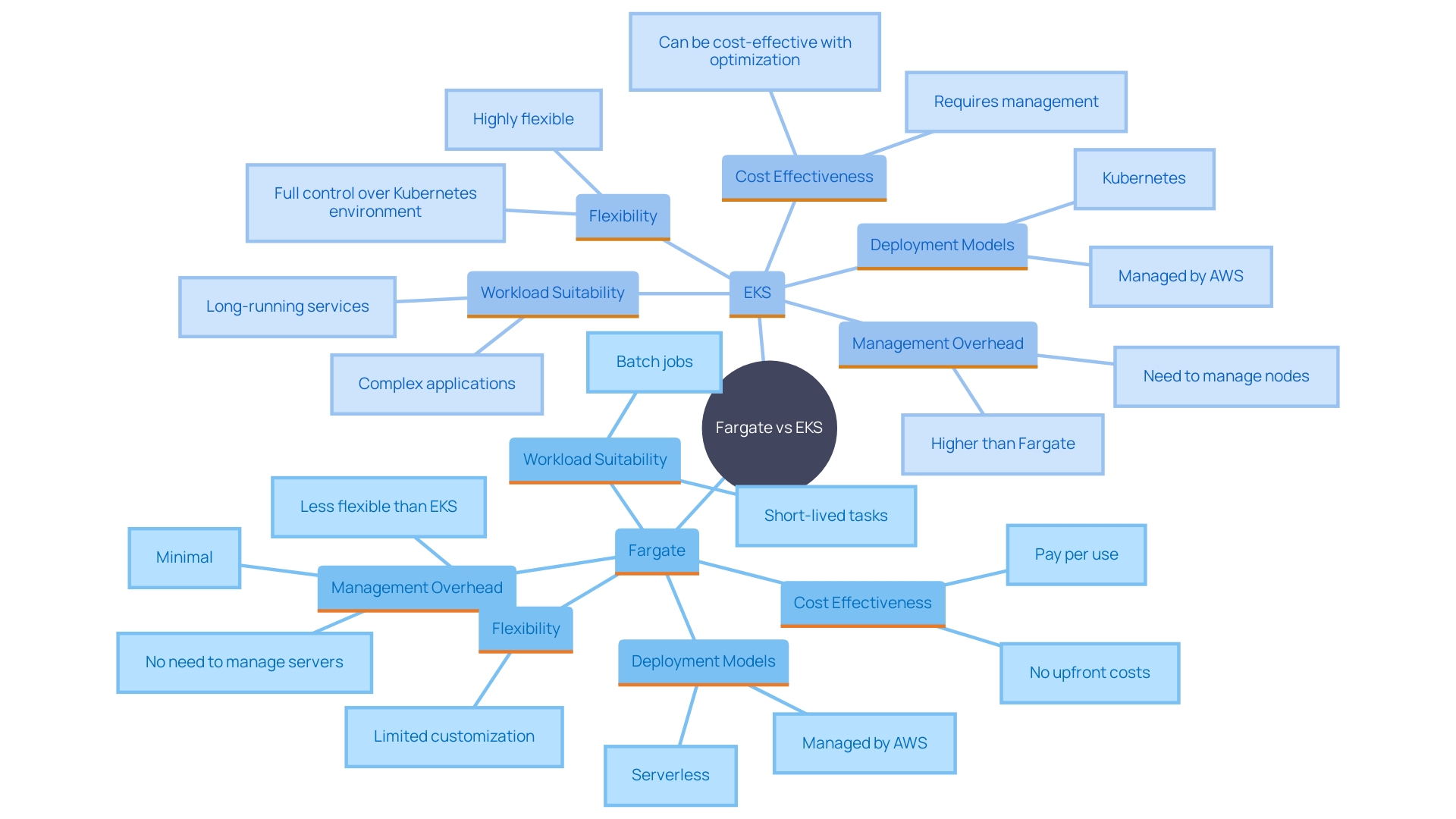

Fargate enables a serverless deployment model by automatically provisioning and scaling computing capabilities based on demand, making it ideal for applications with unpredictable workloads. This model guarantees cost effectiveness, as users only pay for the services utilized. On the other hand, EKS functions on a cluster-based deployment model, where users must manage nodes running container orchestration pods. This approach provides greater flexibility and control, but also introduces additional management overhead. Such a model is more suited for stable and predictable workloads where performance optimization is paramount.

Pricing and Cost Comparison

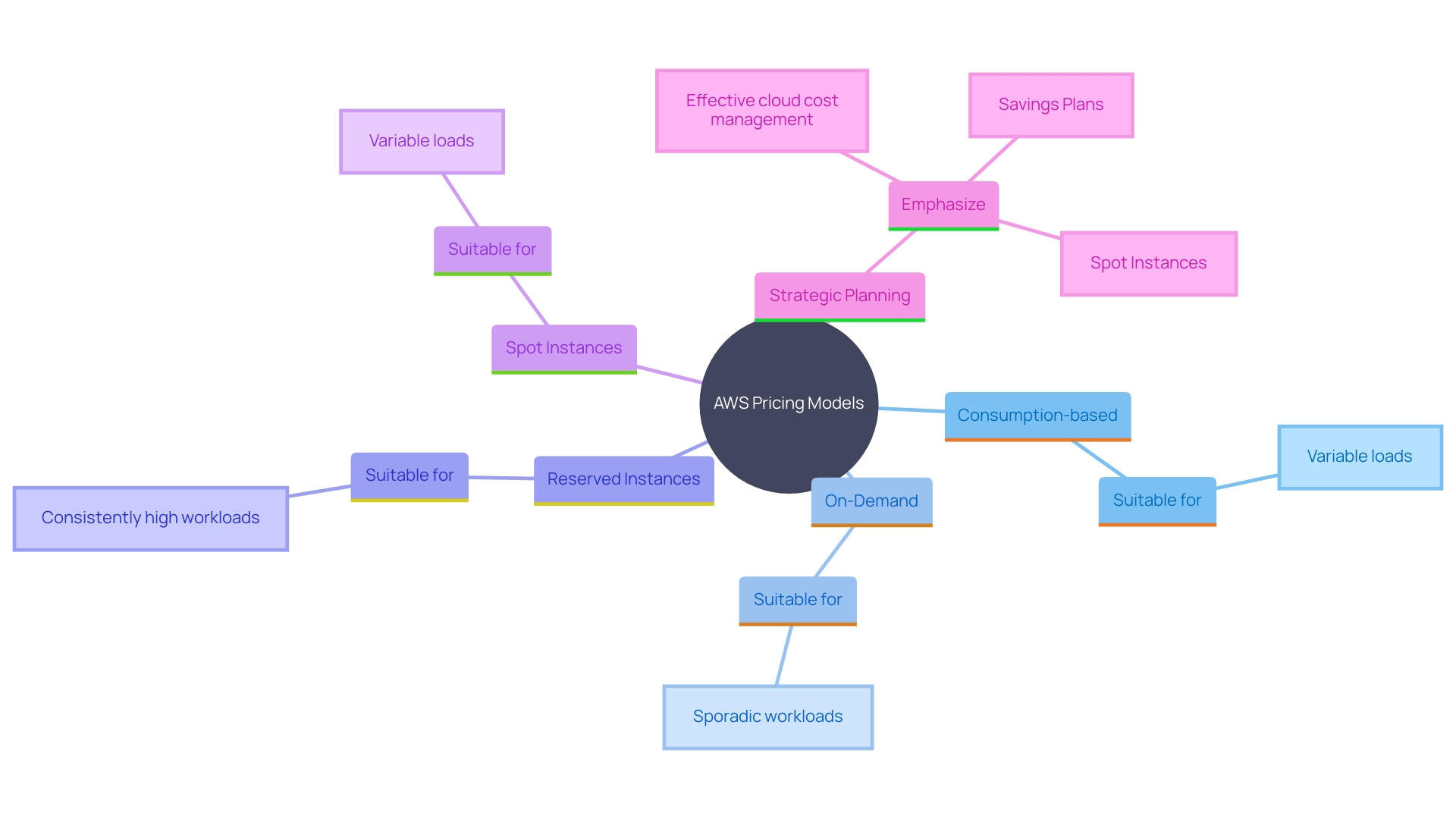

The consumption-based pricing model charges users for the vCPU and memory resources their containers utilize during execution, offering potential cost savings for applications with variable loads. This approach aligns well with workloads that experience fluctuating demand, allowing organizations to pay only for what they use. In contrast, Amazon EKS incurs costs for both the Kubernetes cluster management and the underlying EC2 instances used to run Kubernetes nodes. This dual-cost structure can be optimized for consistently high workloads through instance optimizations and reserved instances, potentially making EKS more cost-effective in such scenarios. However, for sporadic workloads, the flexible pricing of this service can lead to more economic outcomes, emphasizing the importance of evaluating specific use cases when comparing costs.

To enhance cost-efficiency, leveraging AWS's diverse pricing models, such as On-Demand, Reserved Instances, and Spot Instances, can further optimize expenditures. For instance, using Spot Instances with ECS can yield substantial savings for workloads with flexible start and end times without compromising availability. According to recent reports, organizations are increasingly exploring these cost-saving opportunities, with a noticeable shift towards Savings Plans and Spot Instances due to changes in AWS's policies on discounted Reserved Instances. This trend highlights the necessity for strategic planning in cloud cost management to achieve optimal asset utilization and cost savings.

Scalability and Performance

'The service provides automatic scaling features, dynamically modifying assets based on the number of active tasks, which is especially beneficial for applications requiring swift scaling to accommodate changing demands.'. This scalability is crucial in providing real-time updates and maintaining a highly responsive system. Additionally, the cost-effectiveness of the service enables clients to pay solely for the assets they genuinely utilize, resulting in considerable savings. For example, leveraging AWS Fargate has led to a remarkable 99.9% reduction in costs compared to traditional fixed server setups.

On the other hand, EKS, while capable of scaling, might require manual intervention or custom configurations to optimize performance. However, EKS can utilize the sophisticated scheduling and allocation features of the container orchestration platform, potentially providing enhanced performance for intricate applications needing precise management of assets. Observability tools within an EKS cluster can identify performance bottlenecks and overallocated resources, thereby optimizing cost and efficiency. Combining EKS with AWS Spot Instances and ECS can further minimize expenses and increase operational efficiency.

At GoDaddy, a data-driven approach has been instrumental in optimizing batch processing jobs, demonstrating the effectiveness of structured methodologies in achieving operational efficiency. This dedication to effectiveness and client contentment highlights the significance of utilizing advanced technologies such as container orchestration and serverless computing for scalable and economical solutions.

Security Considerations

Fargate inherently isolates tasks at the infrastructure level, enhancing security by minimizing the attack surface. Users do not manage the underlying servers, reducing the risk of configuration errors. Identity and Access Management plays a crucial role, as it involves syncing identities from an identity provider (IdP) for internal applications or leveraging authentication standards like SAML, OAuth, or OpenID Connect for external ones. EKS utilizes the strong security features of container orchestration, such as role-based access control (RBAC) and network policies, but necessitates implementation at both the cluster and node levels, which increases complexity. This complexity is highlighted by the fact that only 9% of clusters use network policies for traffic separation, making Kubernetes a central target for security risks. The selection between the serverless option and EKS relies on an organization’s security stance, with the serverless option providing ease and lesser management burden, whereas EKS necessitates more active security actions but offers enhanced control.

Use Cases: When to Use Fargate vs EKS

This service is optimal for applications with fluctuating workloads, microservices architectures, and those aiming to reduce operational overhead. This serverless solution enables developers to concentrate on programming instead of overseeing infrastructure, similar to flying on autopilot where the service handles the server and cluster management journey. Dunelm's case illustrates how Fargate can simplify scaling and minimize the complexities of ownership and communication across multiple teams, ultimately reducing lead-time for changes.

EKS, conversely, fits entities needing sophisticated orchestration, tailored container orchestrations, and enhanced control over their environments. It's especially beneficial for businesses with committed DevOps teams that can oversee and enhance container orchestration clusters. EKS offers the flexibility and extensibility needed for complex applications, similar to a Swiss Army knife, providing a range of tools and plugins ideal for Kubernetes enthusiasts. Observability tools within an EKS cluster can provide insight into performance bottlenecks and overallocated resources, ensuring efficient deployment of pods. This level of control and customization is essential for businesses that need to adapt quickly and optimize their infrastructure proactively.

Conclusion

In the context of cloud computing, the choice between Fargate and EKS emerges as a pivotal decision for organizations seeking efficient container management solutions. Fargate's serverless model simplifies deployment and eliminates infrastructure complexities, making it particularly advantageous for applications with variable workloads. By allowing users to pay only for the resources consumed, Fargate enables significant cost savings and operational efficiency.

This makes it an ideal choice for businesses focused on reducing overhead while maintaining agility in their development processes.

Conversely, EKS provides a robust Kubernetes environment that offers greater control and customization. Organizations that require advanced orchestration capabilities and have dedicated DevOps teams will find EKS beneficial for managing complex applications. The ability to leverage Kubernetes' extensive ecosystem, coupled with observability tools for performance monitoring, positions EKS as a powerful solution for enterprises that prioritize optimization and resource management.

Ultimately, the decision to utilize Fargate or EKS should be guided by specific use cases and organizational needs. Fargate excels in scenarios demanding rapid scaling and minimal management, while EKS caters to those seeking in-depth control and flexibility. By understanding the unique strengths of each service, organizations can make informed choices that align with their operational goals and resource strategies, ensuring they harness the full potential of cloud computing in their container management endeavors.