Introduction

In the fast-paced world of software development, DevOps has emerged as a crucial element of modern IT architecture. It goes beyond just a set of tools and encompasses a multidisciplinary approach that integrates development and operations. By embracing key components such as version control, continuous integration and delivery, microservices architecture, infrastructure as code, monitoring and logging, and security, businesses can achieve agility and efficiency in their software delivery processes.

DevOps practices enable organizations to innovate faster, adapt to changing markets, and drive better business results. The integration of AI-powered tools further enhances these practices. This article explores the core components of DevOps architecture and highlights their benefits in reshaping software development strategies to meet the demands of today's digital world.

It also delves into the planning and tracking phase, development phase, testing phase, deployment phase, and management phase, offering insights into each stage of the DevOps lifecycle. By following best practices and selecting the right tools and platforms, organizations can effectively implement DevOps architecture and achieve operational excellence.

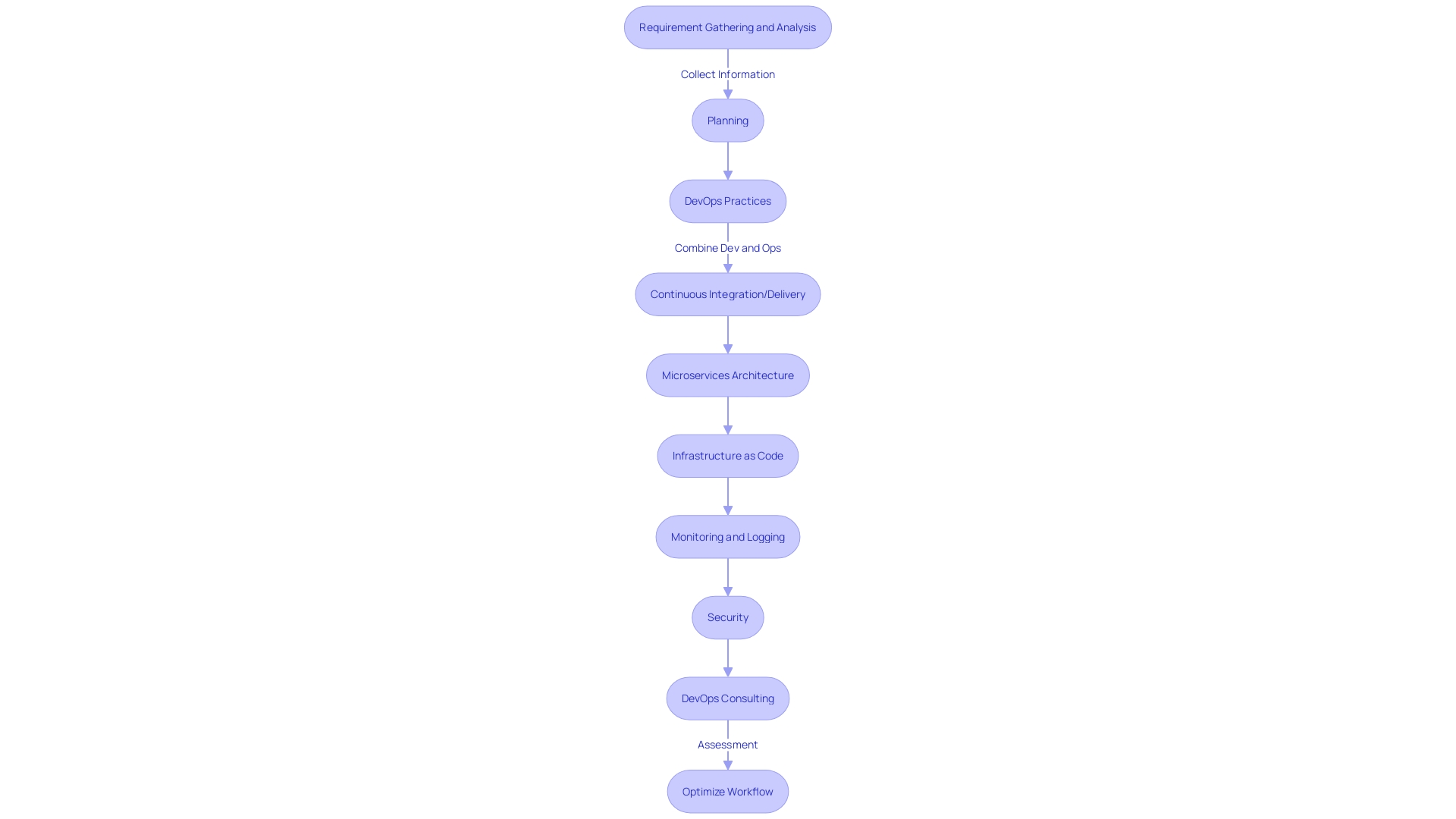

Key Components of DevOps Architecture

As the scenery of coding continues to evolve, the implementation of DevOps has established itself as a cornerstone of modern IT architecture. The seamless integration of development and operations is pivotal for businesses seeking agility and efficiency in their software delivery processes. The practice of combining development and operations is not simply a collection of tools; it is a multidisciplinary approach that demands a strategic comprehension of its foundational elements.

The core components of an effective DevOps architecture include:

-

Version Control: Central to any DevOps strategy is a robust version control system. Adaptations of well-known workflows such as Git-Flow, GitHub Flow, or a customized combination enable teams to manage changes to the codebase systematically, ensuring that the content of the development branch is always synchronized with the testing environment.

-

Continuous Integration and Delivery (CI/CD): These practices are the core of modern software development. Continuous Integration ensures that code changes are automatically tested and merged into a shared repository, while Continuous Delivery enables the automated deployment of applications to selected infrastructure environments. The infinite loop concept promotes a culture of ongoing improvement and frequent updates, crucial for maintaining high application quality.

-

Microservices Architecture: By decomposing a large application into smaller, independently deployable services, a microservices architecture enhances fault tolerance and scalability. Languages and frameworks such as C#, TypeScript, Django, .NET, and Spring play significant roles in building robust microservices for tasks like user registration and authentication.

-

Infrastructure as Code (IaC): This enables teams to automatically manage and provision infrastructure through code rather than manual processes, ensuring that environments are consistent, and changes are traceable.

-

Monitoring and Logging: Continuous monitoring of applications and infrastructure helps to promptly identify and address issues. Logging provides the necessary insights into system performance and user activities, thereby enhancing operational intelligence.

-

Security: Known as DevSecOps when tightly integrated with the development process, security is a priority at every stage of the application lifecycle. It involves practices to safeguard applications and data both at rest and in transit, and to manage access control.

Embracing these components not only accelerates the development lifecycle but also ensures continuous delivery with high software quality. According to industry statistics, practices enable businesses to innovate faster, adapt to changing markets more effectively, and drive better business results through increased velocity. Furthermore, the incorporation of AI-driven tools is becoming more and more noticeable, providing thrilling opportunities for further enhancing software development practices.

By leveraging the potential of modern software development practices, organizations can transform their strategies to meet the requirements of today's rapidly evolving digital landscape, as demonstrated by the case studies of companies implementing these principles to achieve impressive results.

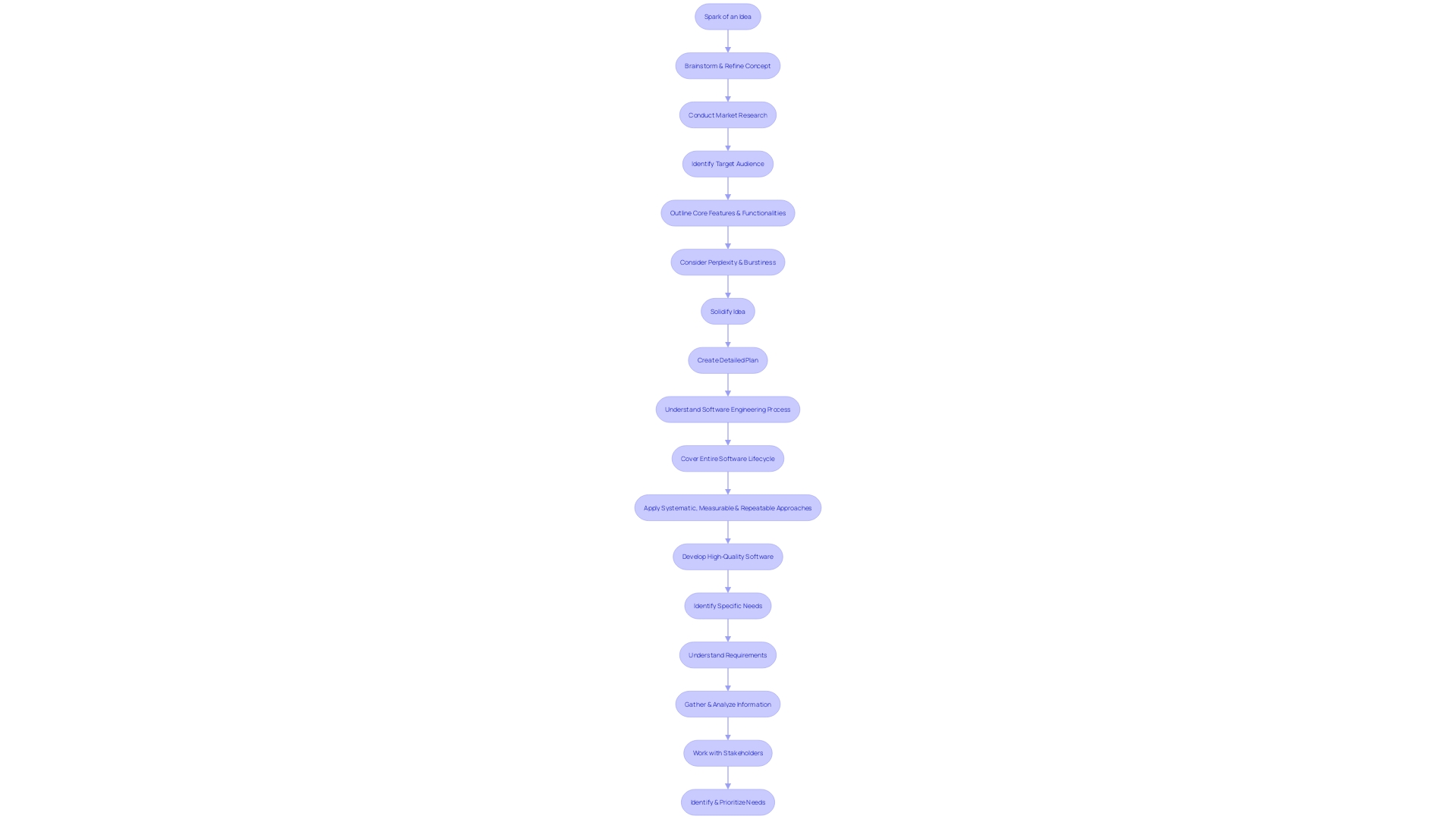

Planning and Tracking

The commencement of the journey towards a collaborative and efficient software development and operations approach is marked by meticulous planning and tracking, a fundamental phase that lays the groundwork for the entire pipeline. It is during this stage that teams articulate their vision by setting clear objectives and benchmarks for the project. By identifying crucial tasks and activities, they can create a comprehensive roadmap that navigates through the intricacies of the software development and operations lifecycle.

In practice, platforms such as Jira Cloud and GitLab have become instrumental in this phase, aiding in the planning of product milestones and releases. However, even with advanced integrations, certain processes like application releases still encounter manual bottlenecks. By leveraging automation, teams can refine these workflows, exemplified by the streamlined release processes achieved through custom integration solutions.

Understanding the importance of metrics and measurement in this early stage is crucial. Consider the insights from the DORA metrics analysis of OpenStreetMap, which provided a granular look at deployment frequency and lead time for changes—key indicators of a team's software development and operations health. These metrics serve as a blueprint for teams aspiring to optimize their software delivery and operations.

Furthermore, the ideology of modern software development surpasses mere technology adoption; it is a cultural movement focused on ongoing enhancement. The integration of continuous integration (CI) practices, where developers run tests locally before committing code, embodies this philosophy. Such practices not only enhance the quality and speed of development but also foster a culture of shared responsibility and transparency.

The field of modern software development is constantly changing, with management solutions for system setup and maintenance emerging as a crucial element for those in charge of projects and software creation. These instruments, distinguished by their user-friendly interfaces and ease of use, are essential in managing and automating configurations, a testament to the ongoing innovation in the field.

As the software development and operations ecosystem continues to expand, the integration of AI tools is also gaining traction. Even though it is still in its early stages, the implementation of AI is poised to transform performance measures and outcomes, representing the next wave of change in IT operations. This blend of culture, practice, and technology paves the way for organizations to not only meet but exceed their strategic objectives, making the planning and tracking phase a cornerstone of DevOps success.

Development Phase

The stage of real progress is more than just the act of writing code; it is the formation and integration of multiple modules and components, forming the foundation of the desired solution. This phase is the outcome of the initial concept, transformed into a comprehensive plan after thorough market research, identification of the target audience, and outlining the required features and functionalities. It is a methodical approach that encompasses the principles of engineering, which began in the 1960s to tackle the complexities and challenges in creating efficient, reliable, and cost-effective systems. The phase of progress embraces these standards to generate high-quality applications, guaranteeing functionality, reliability, and usability align with user expectations and project objectives. As we continue to apply these systematic, measurable, and repeatable approaches, the phase symbolizes a pivotal moment where the theoretical meets the practical, shaping the journey from concept to reality.

Testing Phase

In the realm of application development, rigorous testing is not merely a step but a cornerstone for delivering superior software products. It encompasses an array of procedures including unit, integration, and performance testing, each designed to unearth and rectify potential defects. The core of testing lies in its systematic approach to validate that applications meet the intended specifications, behave as expected, and can sustain a variety of operating conditions.

Reflecting on the evolution of technology over the last decade, applications have significantly increased in complexity, demanding testing to be more comprehensive. Users today expect flawless functionality and will swiftly abandon applications that fall short of their expectations. Indeed, a Forbes statistic highlights the gravity of this, revealing that 88% of users are likely to turn away from apps after encountering subpar performance.

From court cases scrutinizing program quality to the pragmatic gardening analogy of eradicating flaky tests, the importance of meticulous testing is clear. It is the bulwark against the risks and costs associated with program failure, aiming to bolster customer satisfaction and uphold the market reputation of the product. As we traverse through a technological landscape that advances at breakneck speed, applications must be robust and reliable to stave off obsolescence.

The World Quality Report's 15-year journey underscores the transformation within the field of quality engineering and testing. It highlights a shift from considering testing as a mere cost center to acknowledging it as a crucial element that adds to the overall worth and durability of products.

Given these observations, it's clear that efficient testing strategies are essential. Organizations like SoftoBiz Technologies exemplify the industry's commitment to this endeavor, offering a suite of testing services that underscore the effectiveness, reliability, and quality of digital products across various sectors.

In the end, the goal of testing is to deliver a product that not only fulfills user requirements but also surpasses them, providing a seamless, bug-free experience that aligns with the rapid pace of innovation in today's technological domain.

Deployment Phase

Deployment is a crucial stage in the development lifecycle, marking the shift from development to production. This step is not merely about transferring the application to live servers but ensuring that it integrates flawlessly into the existing ecosystem, adhering to the highest security standards and compliance requirements. As seen with industry leaders like M&T Bank, deploying software that meets stringent quality standards is paramount to prevent costly and damaging repercussions such as security breaches and financial losses.

For entities like California State University, utilizing resources like Postman is essential for navigating complex systems and ensuring seamless operations. Nevertheless, the complete capability of these instruments is frequently not recognized because of a absence of investigation and comprehension, emphasizing the necessity for comprehensive learning solutions like the Postman Academy.

Deployment strategies have evolved, with continuous deployment emerging as a standard that minimizes the risk of bugs and defects. This approach requires rigorous engineering practices including continuous integration, monitoring, and automated rollback mechanisms to confidently automate deployments. According to experts, continuous deployment is only possible with continuous delivery, emphasizing the need for changes to be production-ready at all times.

Containerization technologies, like Docker, have revolutionized deployment by encapsulating applications with all their dependencies, ensuring consistency across different environments. This innovation aligns with the sentiment in the tech community that we are only at the infancy of AI and its integration into deployment processes. As stated by Eric Siegel, Ph.D., the deployment of machine learning systems is an art that must be mastered for organizations to leverage AI effectively.

In conclusion, deployment is a complex process that requires a careful balance of speed, stability, and adherence to industry standards, as emphasized by the IEEE and reflected in the diverse deployment strategies and tools available to engineers today.

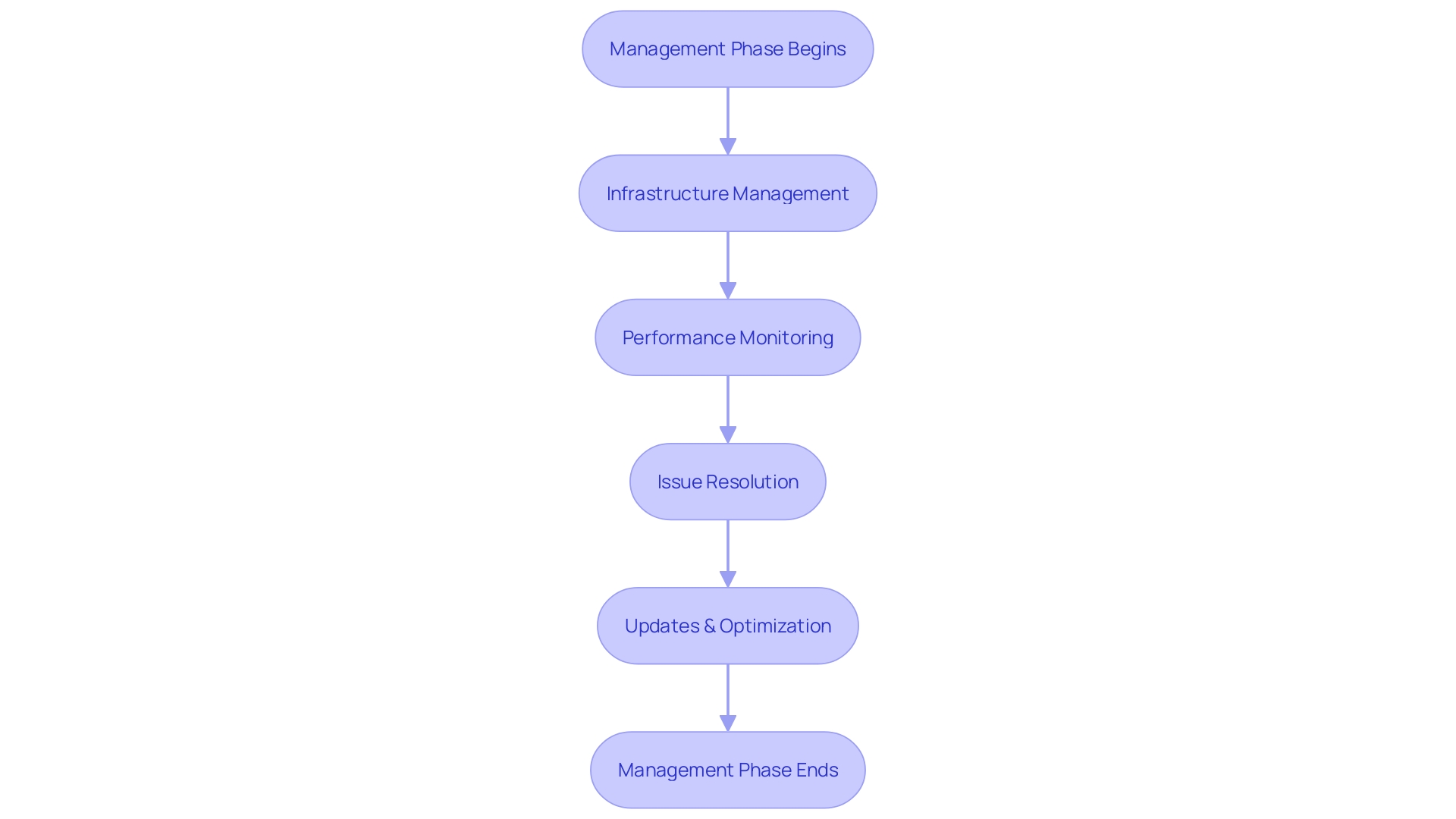

Management Phase

After the deployment, it is crucial to enter the management phase with a clear focus on maintenance, as it ensures the optimization and longevity of the solution. This phase encompasses diligent management of the infrastructure, proactive performance monitoring, and timely responses to emerging issues or necessary updates. The IEEE emphasizes the importance of maintenance, which starts after system deployment and usually represents over half of the total lifecycle costs. It is a process aimed at rectifying defects, enhancing performance or other attributes, and adapting the program to an evolving environment.

The ever-changing nature of programs requires continuous vigilance. Recent technological advancements, including AI, cloud computing, and large language models, have not only raised user expectations but also highlighted the importance of maintaining and updating legacy systems. For instance, property management systems (PMSs), although fundamentally unchanged since the 1980s, must now evolve to meet modern standards of efficiency and user experience.

Furthermore, the National Institute of Standards and Technology (NIST) maintains the National Vulnerability Database (NVD), highlighting the growing backlog of vulnerabilities that require analysis—a testament to the increasing complexity of programs and the associated security risks. The importance of managing these vulnerabilities cannot be overstated, as they are critical to the nation’s cybersecurity infrastructure.

In this context, the management phase is not just a routine procedure; it is a strategic operation that addresses high-priority cyber threats, maintains the integrity of critical infrastructure, and ensures that the application continues to meet the evolving needs of users and the market. It is a testament to the continuous effort required to sustain and advance technology for the benefit of humanity, aligning with the broader goals of the IEEE and the tech community at large.

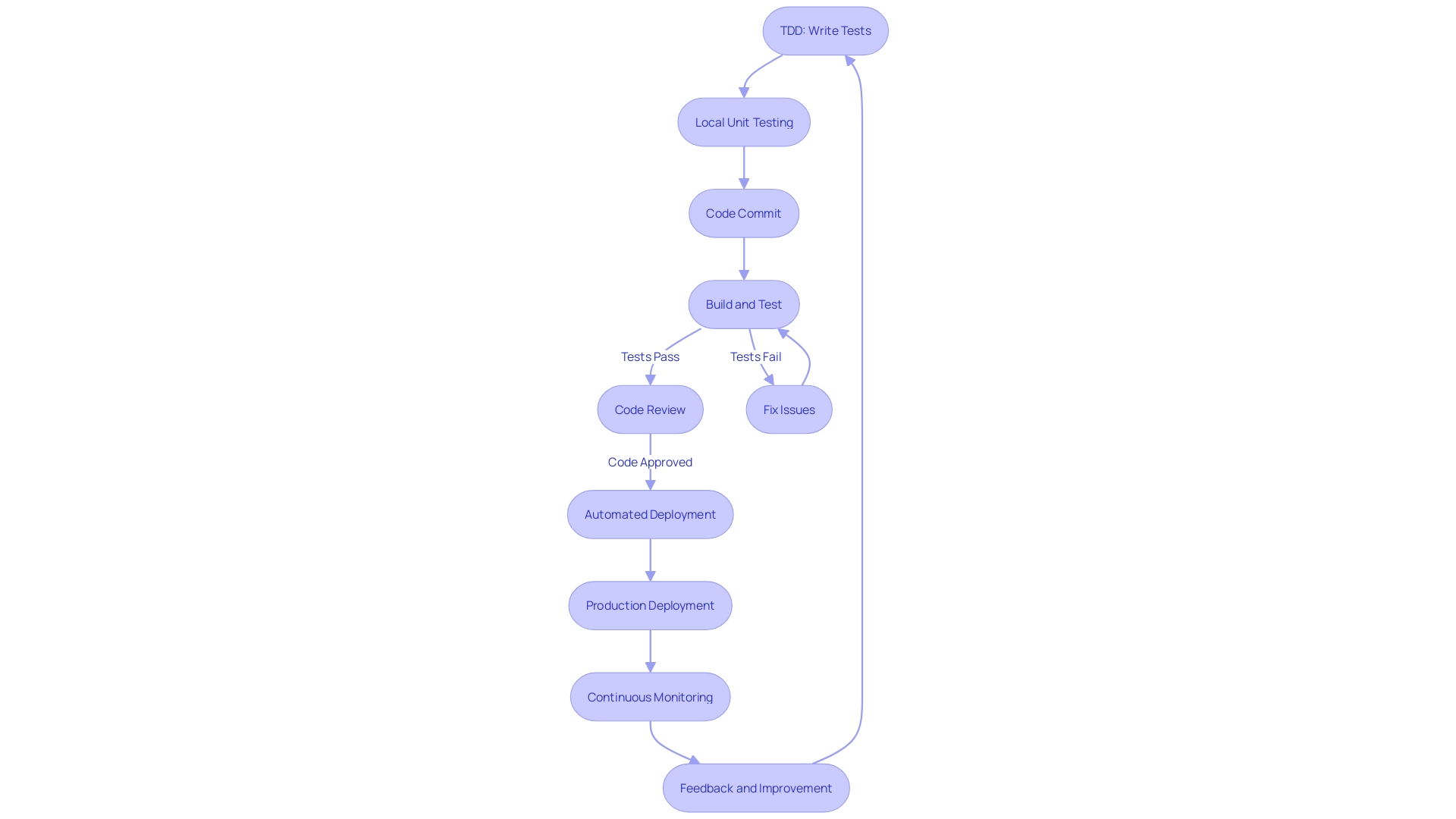

Continuous Integration and Continuous Delivery (CI/CD)

Embracing Continuous Integration (CI) and Continuous Delivery (CD) is more than a set of practices; it's a transformative approach that fosters agility and efficiency in the creation life cycle of code. CI is not merely about code commitment—it embodies a workflow that begins with Test-Driven Development (TDD), where developers craft unit-tests to validate their code locally before integrating into a central repository. This practice ensures that integration occurs smoothly and that any conflicts are identified and resolved promptly, enhancing the quality of the final product.

The CD pipeline extends this quality assurance by automating the path to production, ensuring that software can be released reliably and at a moment's notice. It's essential for teams not just to automate the process but to define a structured test plan that outlines the scope and objectives aligned with development goals. By prioritizing and executing critical tests frequently, organizations ensure that the primary functionalities of their applications remain intact and robust.

The benefits of a well-oiled CI/CD pipeline are evident. Based on recent findings from Dora's study, the incorporation of AI solutions, which are a crucial component of the CI/CD procedure, is increasing. This trend underscores the industry's shift towards tools that offer continuous improvement and contribute to higher organizational performance. As teams incorporate these methodologies, they can expect to see a significant time reduction in development and deployment processes, allowing developers to concentrate on more strategic tasks.

Moreover, the application of CI/CD is not limited by the scale of the operations. Whether it's configuring a handful of servers or scaling up to hundreds, automation and integration are key to maintaining agility and speed in delivering applications and web solutions. By following established methods and utilizing the appropriate tools, such as Jenkins for orchestration, teams can effectively manage the increasing requirements of modern deployment.

In essence, CI/CD is a critical component in the evolution of software development, setting the foundation for teams to deliver high-quality software swiftly and with greater precision. This article not only explores the theoretical underpinnings of CI and CD but also provides practical insights that can be applied to achieve a competitive edge in the fast-paced world of technology.

Infrastructure as Code (IaC)

Infrastructure as Code (IAC) has evolved from a niche concept into an integral part of DevOps, facilitating a shift away from manual provisioning towards automation and repeatability of infrastructure management. By treating infrastructure setup and configurations as code, IAC enables teams to automate the deployment of servers, databases, and other resources with predefined configurations. This method not only reduces the likelihood of human error but also enhances cost efficiency, disaster recovery, and version control. The declarative approach of IAC, in particular, allows teams to define the end-state of their infrastructure, further simplifying the provisioning process and ensuring consistent outcomes.

The adoption of IaC has been transformative for many organizations, including those that initially managed infrastructure manually. For instance, the implementation of resources like Terraform indicated a noteworthy milestone, offering a scalable resolution for handling expanding infrastructure requirements. The benefits of IAC were further highlighted at significant events such as the World Economic Forum in Davos, where discussions on technology's future touched upon the importance of automation and AI in infrastructure management.

The combination of Generative IaC with AI is poised to revolutionize the industry by addressing long-standing challenges and fostering innovation. As companies continue to prioritize swift progress and deployment, the implementation of IAC offers a competitive edge. Recent surveys indicate a surge in the integration of AI tools within IAC, suggesting a trend towards more intelligent automation and an emphasis on continuous improvement in infrastructure management.

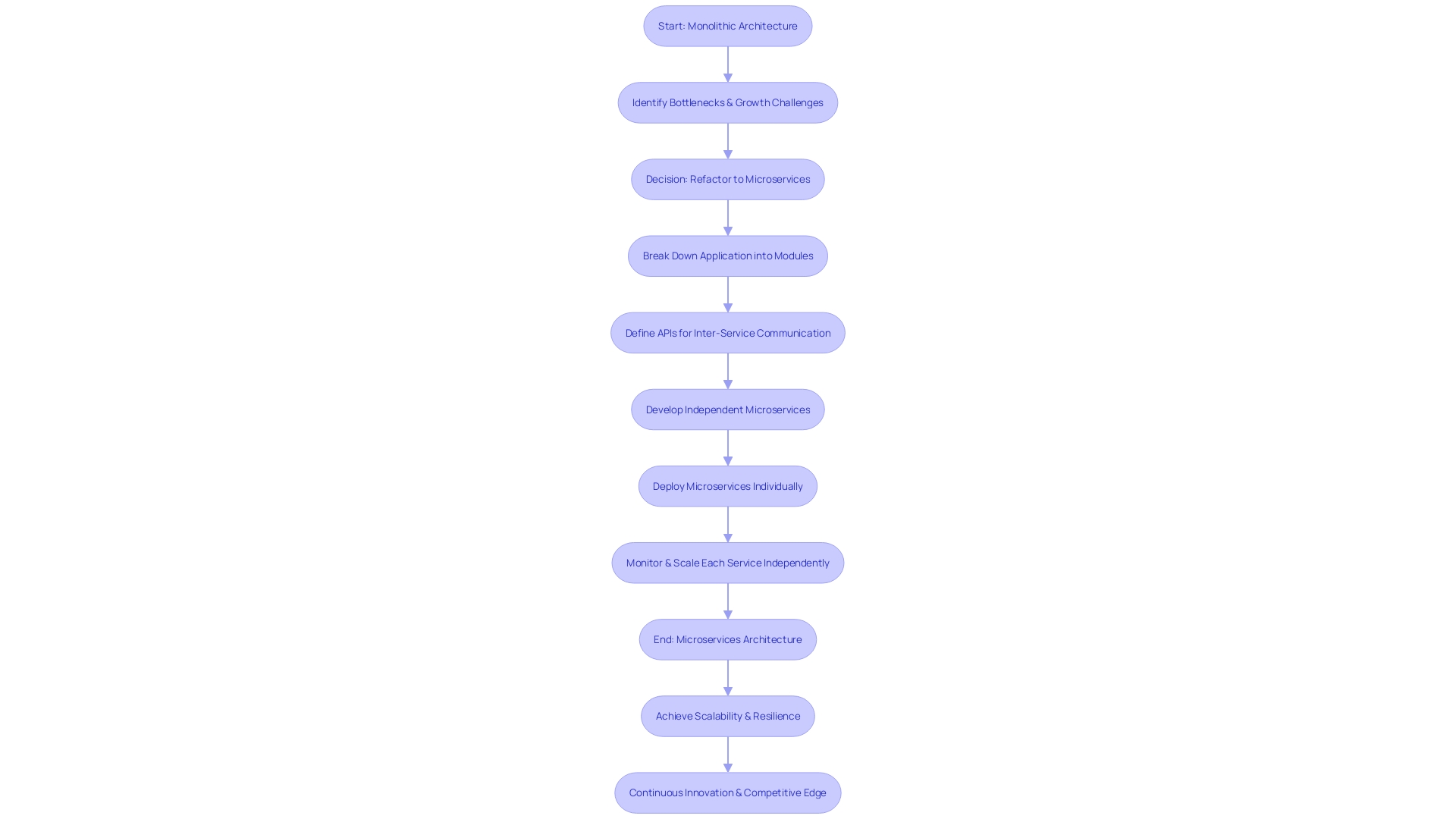

Microservices Architecture

Microservices architecture, a concept closely related to a modular approach to building applications, breaks down intricate systems into small, autonomous services. Each microservice serves a discrete business purpose and interacts with others via APIs, akin to individual books in a vast library, which can be reorganized or expanded without disturbing the entire collection. This modular nature fosters an environment of agility, granting the freedom to develop, deploy, and scale services independently. As an example, Allegro, a significant e-commerce player, and Dunelm, a leading homewares retailer, have utilized microservices to overcome growth-induced bottlenecks and demonstrate their expansion in their digital infrastructure. The transition from a monolithic to a microservice architecture is not just a technological upgrade but a strategic realignment to fulfill the evolving requirements of scalability and resilience in engineering. In the ongoing discussion on architecture, the adoption of microservices has become a pivotal consideration for organizations like IBM Global Business Services, as they navigate the complexities of modernizing large, unwieldy codebases. By facilitating targeted refactoring and mitigating the challenges associated with monolithic structures, microservices stand out as a compelling choice for enterprises seeking to maintain a competitive edge through continuous innovation.

Monitoring and Feedback

The merging of development and operations in the model emphasizes the crucial role that monitoring and feedback mechanisms play in the modern software architecture. Observability, an essential aspect of this paradigm, empowers teams to understand and diagnose the inner workings of their applications and infrastructure. It enables the proactive identification of problems by analyzing the data that systems generate, leading to swift and precise issue resolution.

Case studies, such as Digma's discovery of a code concurrency issue using their Continuous Feedback tool, highlight the performance enhancements that can be achieved through effective monitoring. This real-world success story illustrates the impact of observability in optimizing complex systems. Similarly, the use of DORA metrics in evaluating OpenStreetMap's approach to operations and development provides valuable insights into the frequency of deployments, the lead time for changes, and the driving factors behind these metrics.

These examples mirror the sentiments expressed by industry experts who advocate for a culture of continuous improvement and learning within the field. As design patterns guide developers in structuring code, observability guides them in understanding system outputs, ensuring that the solution is not only resilient and scalable but also continuously evolving to meet the demands of a dynamic market.

Benefits of DevOps Architecture

The combination of development and operations, known as DevOps architecture, is not just a trend but a strategic approach that is reshaping the landscape of IT development and operations. By promoting improved collaboration and communication, the methodology supports a more synchronized and efficient workflow, allowing teams to deliver software solutions rapidly and reliably. With an emphasis on agility and scalability, it aligns closely with business objectives, ensuring that technological progress marches in lockstep with an organization's goals.

These words 'DevOps' are still present. Fix this error and return the result. Such a portal, designed to serve customers in Africa with requirements for low-latency, secure, and fast-retrieval relational user data, would greatly benefit from principles of continuous integration and continuous deployment. Moreover, the architecture's emphasis on fault tolerance and high availability, alongside security for data at rest and in transit, is critical for businesses operating on a tight budget.

By incorporating modern configuration management tools, organizations can manage their software environments more intuitively and effectively. Tools with an intuitive interface, as emphasized in recent industry guides, simplify the complexity involved in configuration management, a key component of modern software development. This, in turn, allows for more streamlined project management and growth processes.

The changing scene in 2023 presents promising opportunities, with trends indicating significant shifts towards more resilient, efficient, and responsive software creation and IT operations. The all-inclusive environment of continuous integration and delivery, along with infrastructure as code, and the utilization of artificial intelligence development methods, is crucial in this transformation. The incorporation of AI into operational practices is gaining momentum, promising to further enhance performance measures and outcomes crucial for organizations.

Essentially, the combination of technologies and tools goes beyond being just a cultural shift that catalyzes better collaboration between developers and IT professionals. This change not only decreases the time from development to production but also guarantees the delivery of high-quality applications, meeting the needs of customers and stakeholders alike.

Implementing DevOps Architecture

To efficiently implement architecture, it is crucial to integrate various strategic elements to promote a culture conducive to collaboration, agility, and continuous enhancement. This involves not only adopting agile methodologies but also integrating solutions that facilitate automation, which are pivotal in streamlining operations and enhancing the quality and speed of software delivery.

For instance, consider the scenario of crafting an architectural design for an e-commerce portal aimed at African customers. The architecture must ensure low-latency access, secure and rapid retrieval of relational user data, and accommodate image storage for product display. Despite budget constraints, the system must also be fault-tolerant, highly available, and secure both at rest and during data transit.

A microservice-based architecture, utilizing frameworks such as Django, .NET, or Spring, is recommended to handle user registration, authentication, and authorization efficiently. Moreover, the implementation language—whether C#, TypeScript, or another—must align with the platform's needs without compromising functionality.

Continuous monitoring is an essential aspect of software development that ensures performance, availability, and security are maintained at optimal levels in production. By utilizing sophisticated monitoring technology, teams can promptly identify issues, collect actionable data, and accomplish the software's performance goals.

With the swift growth of DevOps practices across industries, professionals equipped with expertise in this domain can effectively fulfill both development and operational roles, leveraging contemporary technologies and tools. The effect of the collaborative approach to software development and IT operations on organizational infrastructure is significant, enabling operational streamlining, task automation, application deployment, and monitoring.

In our ever-changing work environment, the transition towards the collaborative development approach is crucial for achieving rapid delivery and reducing post-production errors. By embracing the principles of modern software development practices, organizations can create a more stable work environment and produce superior outputs, in contrast to the limitations of traditional development lifecycles.

As mentioned by Julia Romanenkova, a Project Manager and Consultant, working together with knowledgeable professionals like Roopesh in PHP and tailor-made programming yields outstanding dependability and promptness, emphasizing the importance of such expertise in team environments.

Ultimately, the essence of the collaborative approach lies in its ability to dissolve the traditional silos between development and operations, fostering enhanced collaboration, faster delivery, and improved software quality, as emphasized in industry insights and expert commentary.

Choosing the Right Tools and Platforms

The crucial role of choosing the suitable equipment and platforms for software development cannot be emphasized enough. These choices must not only align with an organization's unique needs and objectives but also enhance collaboration, enable automation, and integrate flawlessly with the existing infrastructure and workflows.

In the field of financial services, like TBC Bank and M&T Bank, the choice of these resources is crucial. The digital transformation sweeping through the banking industry, driven by shifting consumer habits and technological progress, demands a high level of security and compliance. These standards must be supported by the tools in order to prevent expensive security breaches and damage to reputation. For example, the transition to a fully digital customer experience in banking requires the implementation of software solutions that can manage the requirements of security and regulatory compliance.

Similarly, in industries tackling climate change, like Bosch with its focus on green energy, the need for efficient data platforms and pipelines is crucial. Technologies that facilitate the development of a streamlined, progressive data infrastructure are crucial for such intricate operations.

The importance of the practice is evident in its widespread implementation across different industries, with the goal of improving the software development process through automation. This integration streamlines operations, allowing for continuous improvement and faster value delivery. A mechanism like Git exemplifies this by enabling developers to manage changes in their codebase efficiently, thus facilitating better collaboration and productivity.

Additionally, management tools in the field of development and operations, according to Chef's report, should possess a user-friendly interface for simplicity of utilization. This aligns with the wider ecosystem of development and operations that includes practices like CI/CD, IaC, and monitoring, all designed to automate core SDLC stages.

Abid Ali Awan, Kdnuggets Assistant Editor, emphasizes the extensive job opportunities awaiting those proficient in DevOps methodologies, which are vital for deploying, maintaining, and monitoring applications. The application of the periodic table concept in the field of software development additionally assists in recognizing and classifying resources for efficient workflow automation, minimizing mistakes made by humans, and expediting the delivery of software.

Statistics reveal that incorporating modern software development practices leads to tangible benefits: 65% report improved quality of deliverables, 52% experience quicker recovery times, and there is a notable decrease in the failure rate of new releases. As the technology landscape evolves, the significance of these tools and the efficiencies they bring to the table become even more pronounced, underscoring the importance of careful tool selection in achieving operational excellence and driving innovation.

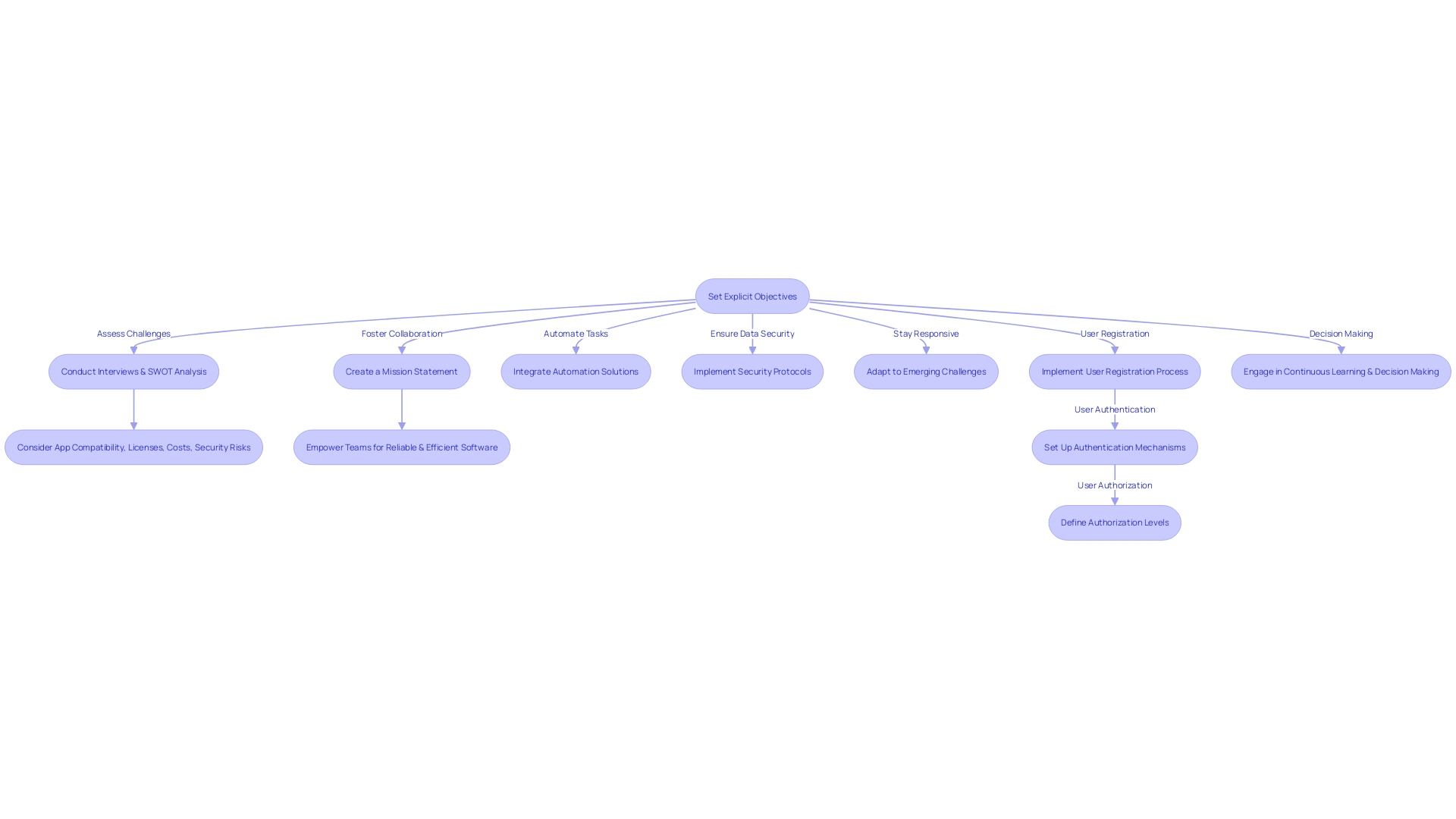

Best Practices for DevOps Architecture

Implementing a modern architecture requires following specific guidelines to fully harness its potential. Setting explicit objectives is the cornerstone of this adoption, as it aligns the technology with the business's strategic goals. Collaboration and continuous learning are the cultural bedrocks that facilitate the seamless melding of development and operations teams. Automation is not merely a convenience but a strategic imperative, particularly for repetitive tasks that can impede efficiency if performed manually. Ensuring that both data at rest and in transit is protected from unauthorized access is of utmost importance and should be integrated into the fabric of the development and operations processes. A proactive approach involves regular reviews and refinements of the strategy to stay responsive to emerging challenges and technological advancements. Reflecting on case studies, such as the e-commerce portal designed for the African market with stringent requirements for low-latency and high security on a tight budget, showcases the practical application of these principles. The architecture, possibly utilizing frameworks like Django, .Net, or Spring, must prioritize user registration, authentication, and authorization, staying vigilant about the continuous nature of the software development process symbolized by the infinity loop. Insights from industry experts like Rafal, who has contributed to scalable and resilient commerce platforms, underscore the importance of an ecosystem of automation solutions for CI, CD, IAC, configuration management, and monitoring. In addition, the collaborative decision-making process, avoiding the pitfalls of the 'HiPPO' syndrome, ensures that diverse perspectives contribute to a more robust and inclusive architecture. Current trends highlight the significance of developer experience (DevEx) over sheer productivity, advocating for sustainable practices that prevent burnout and foster innovation. The integration of AI tools, as indicated by recent surveys, points towards a future where Ai's role in enhancing DevOps practices could become a game-changer, marking a continuous evolution in line with the DevOps philosophy of perpetual improvement.

Conclusion

In conclusion, DevOps architecture integrates development and operations, enabling businesses to innovate faster and drive better results. By embracing key components like version control, continuous integration, microservices architecture, infrastructure as code, monitoring, and security, organizations achieve agility and efficiency in software delivery.

The planning and tracking phase sets objectives and creates a roadmap, while subsequent stages emphasize collaboration, automation, and continuous improvement. Effective software testing ensures high-quality products, and the management phase focuses on maintenance and adaptation.

Continuous integration and delivery (CI/CD) streamline the development lifecycle, while infrastructure as code (IaC) enables automation and repeatability. Microservices architecture enhances scalability and agility, and monitoring and feedback mechanisms drive performance optimization.

Choosing the right tools and platforms is crucial for successful implementation, aligning with needs and enhancing collaboration, automation, and integration. Following best practices and refining strategies drive operational excellence and innovation.

In summary, DevOps architecture reshapes software development strategies, enabling organizations to meet the demands of the digital world. By implementing core components and best practices, businesses can achieve operational excellence and drive innovation.