Introduction

Amazon Web Services (AWS) has revolutionized the world of cloud computing with its extensive range of compute services designed to meet the diverse needs of modern workloads. From running complex simulations in engineering to facilitating innovations in machine learning and genomics, AWS provides the computational horsepower necessary for intensive tasks. At the core of AWS's compute offerings is Amazon EC2 (Elastic Compute Cloud), a service that delivers scalable computing capacity in the cloud.

This article explores the different types of AWS compute services, including EC2, AWS Lambda, and AWS Batch, and highlights real-world use cases that demonstrate the flexibility and efficiency of these services. Whether it's managing industrial equipment in harsh conditions, training and deploying AI models, or handling batch computing workloads, AWS compute services offer the scalability, reliability, and cost-effectiveness that businesses need to thrive in the digital age. So, let's delve into the world of AWS compute services and discover how they can empower organizations to focus on innovation and growth.

Types of AWS Compute Services

Amazon Web Services (AWS) has established itself as a pivotal resource in the realm of cloud computing, offering an extensive array of compute services designed to meet the diverse needs of modern workloads. From running complex simulations in engineering to facilitating innovations in fields like machine learning and genomics, AWS provides the computational horsepower necessary for these intensive tasks.

At the core of AWS's compute offerings is Amazon EC2 (Elastic Compute Cloud), a service that delivers scalable computing capacity in the cloud, enabling businesses to run applications on a virtual server that best suits their requirements. Amazon EC2 is instrumental in handling the variability of computing needs, which can range from standard applications to high performance computing (HPC) and machine learning workloads, each demanding different compute, memory, and storage configurations.

The significance of EC2 can be seen in use cases like the ones at ICL, a multinational manufacturing and mining corporation, where the monitoring of industrial equipment in harsh conditions is crucial for operational efficiency and environmental safety. With AWS, ICL can leverage cloud-based compute resources that enable them to scale their monitoring capabilities without the high costs and limitations of on-premises hardware.

Furthermore, the expansive growth in the size of large language models (LLMs), which now boast hundreds of billions of parameters, underscores the increasing demand for substantial computing power. Training and deploying these AI models requires not just powerful CPUs but also GPUs capable of handling immense data and computation loads.

AWS addresses these needs with a suite of compute services that includes not only EC2 but also AWS Lambda for on-demand serverless compute operations. This diversity allows for a tailored approach to computing resources, ensuring that whether a company is a startup or an established research lab, there's an AWS service that fits their workload.

As the technology landscape continues to evolve, AWS's commitment to providing scalable, efficient, and cost-effective compute services remains a top priority, ensuring that customers can focus on innovation and growth, rather than the complexities of infrastructure management.

Amazon Elastic Compute Cloud (EC2)

Amazon EC2 (Elastic Compute Cloud) serves as a pivotal component in the realm of cloud computing, offering scalable virtual servers to cater to diverse operational demands. This service empowers users with the flexibility to configure and provision computation environments tailored to their specific requirements. Through EC2, businesses gain the autonomy to manage their computing resources adeptly, enabling them to adjust capacity with ease in response to changing workloads.

Amazon Web Services (AWS) is acclaimed for its expansive range of services, which include a variety of compute solutions like EC2, as well as big data processing, machine learning, data warehousing, and security tools. These offerings facilitate the deployment, development, and management of intricate applications, delivering on-demand and scalable IT resources via the internet — a boon for businesses seeking to eschew the hefty investments associated with maintaining physical infrastructure.

EC2's adaptability and scalability have been instrumental for teams such as TR Labs, which experienced growth both in team size and task complexity, necessitating enhanced model development and training processes. Similarly, Vertex Pharmaceuticals leveraged machine learning on EC2 for efficient and accurate analysis of massive experimental data sets, showcasing the platform's robustness for sophisticated computational tasks.

Recent developments in AWS include the launch of EC2 Capacity Blocks, a novel feature that provides customers with the ability to reserve instances for a predefined duration, analogous to hotel room bookings. This innovation, available in the AWS US East (Ohio) region, offers clear cost visibility and certainty, permitting users to fine-tune their resource allocation in alignment with their budget and needs, and includes access to NVIDIA H100 Tensor Core GPU instances.

AWS's position as the largest cloud provider, coupled with its comprehensive services and robust documentation, renders it an invaluable asset for professionals seeking to harness cloud computing's potential. Its influence extends to job markets, where expertise in AWS is highly sought after. The extensive support and resources offered by AWS ensure that both novices and seasoned users can seamlessly navigate and expand their cloud computing prowess.

Amazon EC2 Auto Scaling

Amazon Web Services (AWS) provides a robust framework for ensuring the high performance and availability of your cloud applications, a necessity in today's digital landscape. EC2 Auto Scaling exemplifies this by offering a scalable solution to maintain application uptime and dynamically adjust compute resources in line with demand. This system not only ensures optimal instance numbers to manage traffic fluctuations but also allows the creation of detailed scaling policies.

The significance of adopting an application scaling mindset is highlighted by case studies such as Chess.com, which hosts over ten million daily chess games. The platform's growth to serve a community of over 150 million users underscores the need for scalable infrastructure. AWS has proven to be instrumental in supporting the company's mission to connect chess enthusiasts globally, showcasing the capability of EC2 Auto Scaling to handle substantial and variable workloads with ease.

Recent advancements in AWS, like the introduction of Amazon S3 Express One Zone for high-demand data access and the continuous evolution of Graviton processors, further demonstrate AWS's commitment to innovation and cost-efficiency in cloud computing. These developments come in response to customer feedback and a vision for the future of cloud infrastructure, one where hardware costs are reduced and performance is accelerated.

Reflecting on the words of Steve Jobs, "The only way to do great work is to love what you do," embracing AWS's cloud solutions is about more than just following trends—it's about a passion for innovation and the courage to adopt new technologies for the achievement of great work. AWS's extensive suite of over 200 services, which are likened to the diverse and rich experiences offered by the Australian continent, provides the tools necessary for businesses of all sizes to scale their operations and optimize costs effectively.

Amazon Lightsail

Amazon Web Services (AWS) Lightsail is crafted with developers and small businesses in mind, offering a streamlined and wallet-friendly approach to cloud computing. The service simplifies the process of deploying virtual private servers (VPS), providing an accessible interface that caters to those who may not require the vast array of options and scalability that larger AWS services offer. Users can swiftly create a Lightsail instance by logging into their account, choosing the desired instance location, operating system, and plan, culminating in a single click to launch the instance. This ease of use is paramount for businesses that need to stay agile and responsive without the complexity of more involved cloud services.

AWS's flexibility and affordability have cemented its position as a leading cloud platform, allowing businesses to rent essential online resources and computing power, scaling as needed. The platform's significance is such that AWS outages can disrupt daily operations for countless organizations. The growing demands for state-of-the-art generative AI models and high-performance computing (HPC) applications underscore the need for robust compute capabilities. AWS Lightsail meets this demand by simplifying the management of VPS, which is essential for running a variety of applications, including those that require substantial computing power for tasks like machine learning. As quoted by industry professionals, starting small and focusing on solving business problems effectively is critical, and Lightsail's straightforward approach aligns perfectly with this philosophy.

In the context of cloud computing, Lightsail's role is increasingly important. Cloudflare's 2023 Year in Review illustrates the surging internet traffic, highlighting the ever-growing reliance on internet connectivity for commerce, communication, and more. Furthermore, the competitive landscape among cloud providers is intensifying, with AWS, Google Cloud, and Azure vying for market share. Insights from Vantage's cloud cost management platform reveal shifting spends in cloud services, with AWS's EC2 instances seeing a decrease in percentage spend while Azure gains traction. Amidst this backdrop, AWS Lightsail offers a cost-effective, user-friendly solution that empowers small businesses and developers to leverage cloud computing without the need to navigate the complexities of large-scale services.

Container Services: ECS, EKS, and Fargate

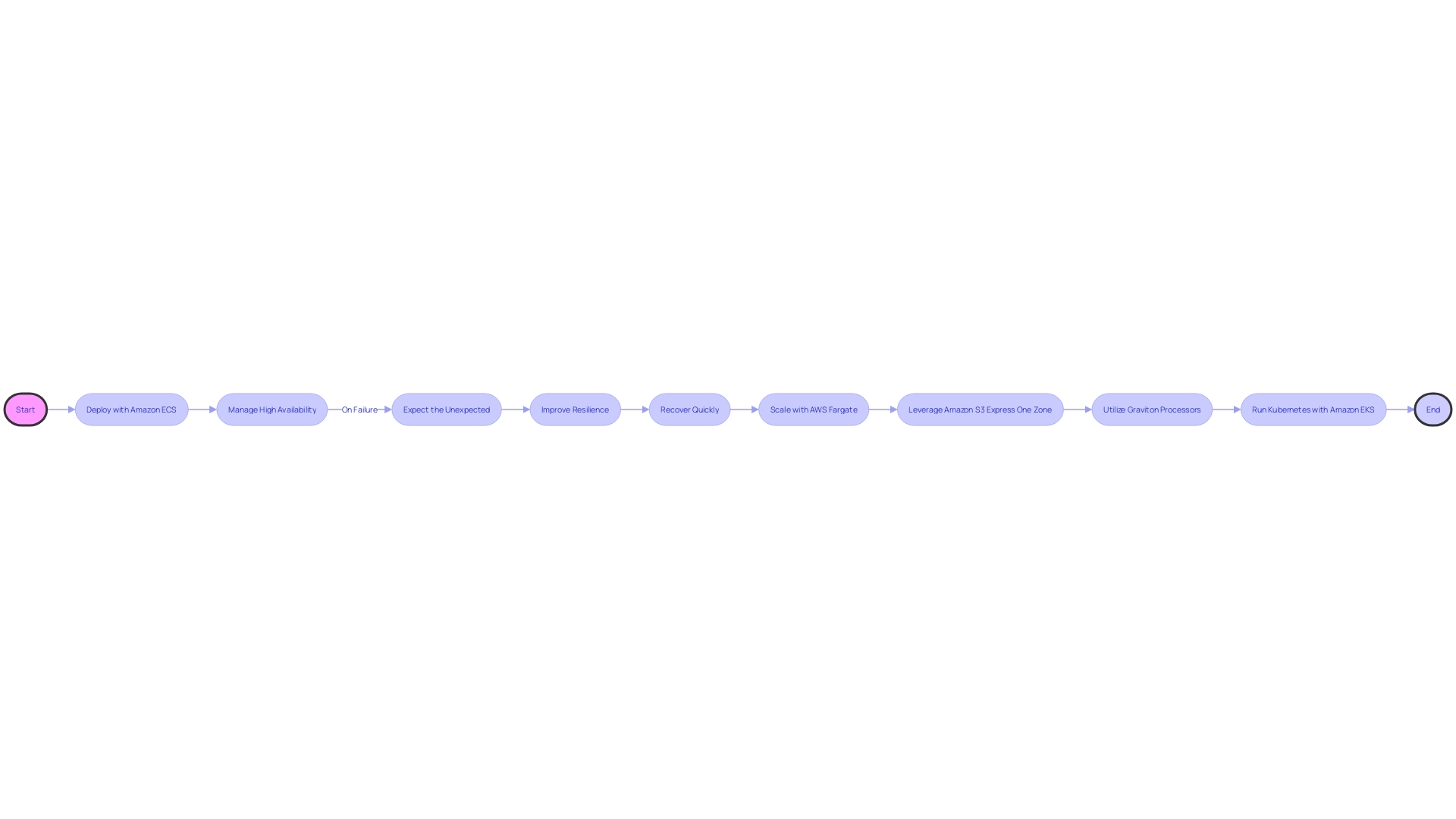

Amazon Web Services (AWS) provides a suite of container services that streamline the deployment, management, and scaling of containerized applications. These services are designed with high availability and resilience in mind, acknowledging the inevitability of system failures and the importance of quick recovery.

Elastic Container Service (ECS) is AWS's fully managed container orchestration service that leverages the same high-availability architecture AWS uses for its own services. This design consideration for ECS means that even with a substantial scale of one million servers at 99.99% availability, the potential for around one hundred servers to fail at any time is anticipated and effectively managed. ECS enables users to construct highly available applications with the confidence that the underlying service is built to handle unexpected failures.

For those seeking a managed Kubernetes service, AWS offers Elastic Kubernetes Service (EKS), which simplifies running Kubernetes on AWS without needing to install and operate your own Kubernetes control plane or nodes. EKS is architected for resilience, empowering businesses to focus on innovation rather than infrastructure management.

Furthermore, AWS Fargate provides a serverless compute engine for containers, eliminating the need to provision and manage servers. This service aligns with the needs of businesses like Dunelm Group plc, a leading UK homewares retailer, which requires a robust and scalable solution to handle over 400 million website sessions annually. Fargate allows companies to focus on delivering value to customers by ensuring their web platforms remain available and responsive, just as Dunelm must ensure their customers can purchase products anytime without interruption.

Recent advancements such as Amazon S3 Express One Zone and the Graviton series processors underscore AWS's commitment to providing innovative solutions that cater to the evolving needs of their users. With these services, AWS continues to push the envelope, offering faster access to frequently requested data and enhanced general-purpose computing power to meet the demands of modern applications.

The combination of ECS, EKS, and Fargate demonstrates AWS's dedication to offering a diverse range of solutions that address availability and resilience, critical concerns for organizations aiming to maintain robust and efficient digital operations.

Serverless Computing: AWS Lambda

AWS Lambda represents a paradigm shift in computing, allowing developers to concentrate on code rather than server infrastructure. As a serverless compute service, Lambda automatically scales, handling the execution of code in response to various events such as modifications in an Amazon S3 bucket or a DynamoDB table. This pivotal technology ensures efficient operation without the need for server provisioning or management.

Chime, a fintech company, leverages AWS Lambda for safeguarding member accounts from unauthorized transactions by analyzing patterns in data to detect fraud. Similarly, the TR Labs team, which has grown significantly to over 100 members, uses Lambda to manage complex model development and training processes in their ML innovation endeavors. These real-world applications underscore Lambda's scalability and the reduced operational overhead it offers, which are transformative for applications requiring a high level of efficiency and productivity.

Recent advancements in Amazon's storage solutions, like the introduction of Amazon S3 Express One Zone, demonstrate the ongoing evolution of cloud services that AWS Lambda is a part of. This new storage tier offers significant performance improvements, catering to the most accessed data with support for millions of requests per minute. The ability to operate with such efficiency and scalability is indicative of the serverless environment that AWS Lambda thrives in.

The serverless architecture promised by AWS Lambda, which includes automatic scaling, cost-efficiency, and reduced operational overhead, is not just theoretical but is proven by the experiences of those who have migrated to such systems. Despite the challenges that may arise, the transformative potential for a variety of applications is evident. AWS Lambda enables seamless integration with other AWS services like API Gateway, providing secure and scalable serverless interactions, and is a key player in the narrative of cloud computing's future.

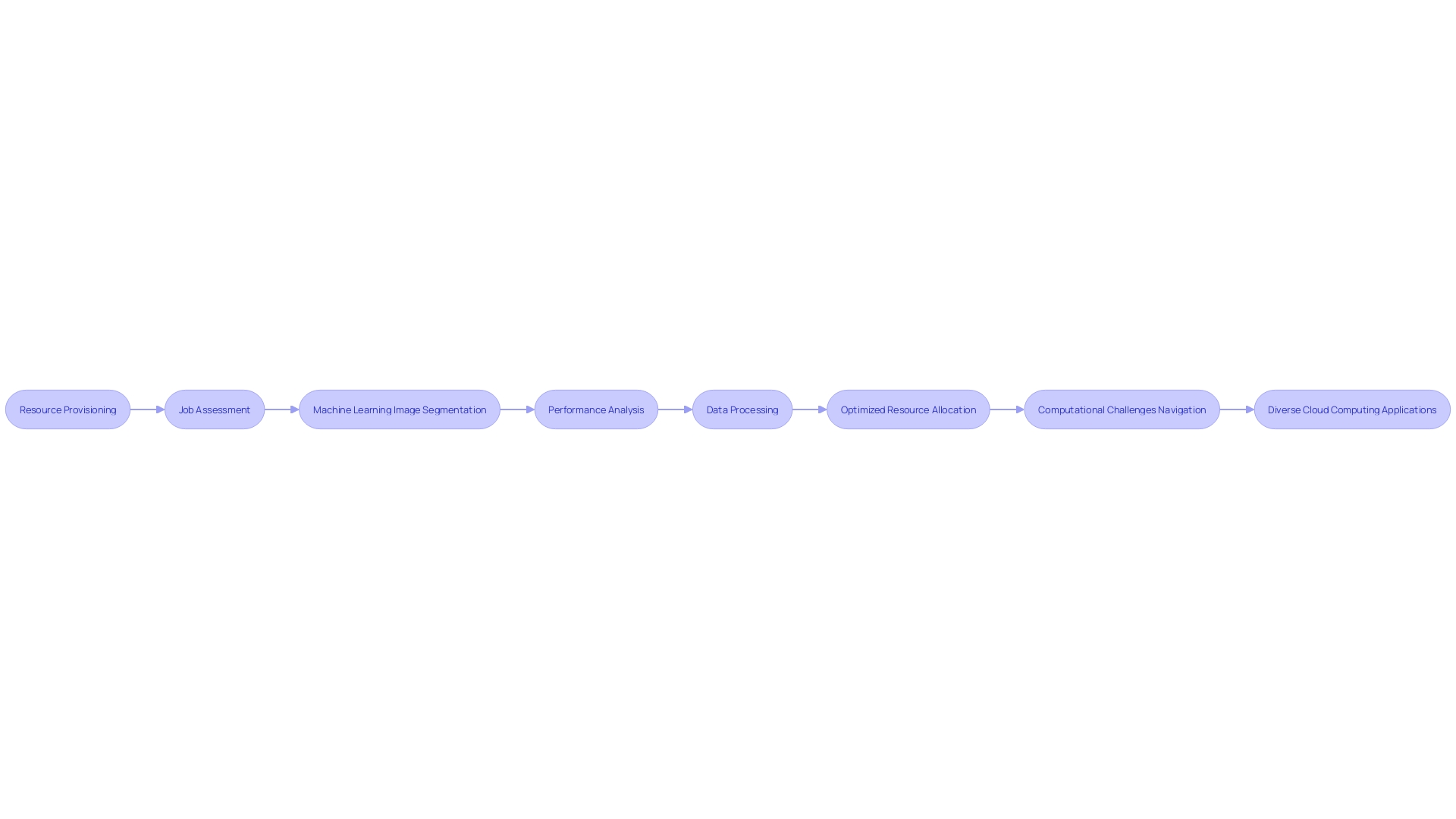

Batch Computing: AWS Batch

Amazon Web Services (AWS) Batch is a robust service designed to facilitate batch computing across various industries, handling workloads of any magnitude by dynamically allocating the necessary compute resources. This service optimizes resource provisioning by meticulously assessing the volume and specific resource needs of batch jobs. Vertex Pharmaceuticals utilized AWS Batch to expedite their drug discovery process, which demands the analysis of vast datasets including high-resolution microscope images. By leveraging machine learning for image segmentation, they were able to train models that could process these images en masse, significantly enhancing the speed and accuracy of their analyses.

Similarly, AWS Batch has been instrumental for Swimming Australia's Performance Insights team. To maintain their competitive edge, they implemented a sophisticated performance analysis system that scrutinizes training footage to glean actionable insights. This real-time data empowers coaches to provide immediate, targeted feedback to swimmers, a process made possible by the scalable and efficient computing power AWS Batch provides.

The service's ability to handle asynchronous data processing—where data events are managed as they occur without the need for timed coordination—is crucial for applications like these. It supports various scenarios, from microservices requiring immediate responses to distributed systems managing substantial data volumes. AWS Batch's flexibility and power enable organizations to navigate the computational and resource challenges presented by state-of-the-art applications, such as high-performance computing and large language models (LLMs). With LLMs growing exponentially in size and complexity, AWS Batch's scalable solutions provide the necessary computing power, memory, and storage to train and deploy these advanced models.

As cloud computing continues to redefine software operation, enabling the rental of virtual resources on a pay-per-use basis, services like AWS Batch play a pivotal role. They offer a reliable infrastructure that underpins container-as-a-service and function-as-a-service platforms, all while ensuring optimal VM performance, crucial for the quality of hosted applications. Through comprehensive benchmarks and performance variability analyses, AWS Batch demonstrates its capacity to predict and adapt to future performance demands, underscoring its value across diverse cloud computing applications.

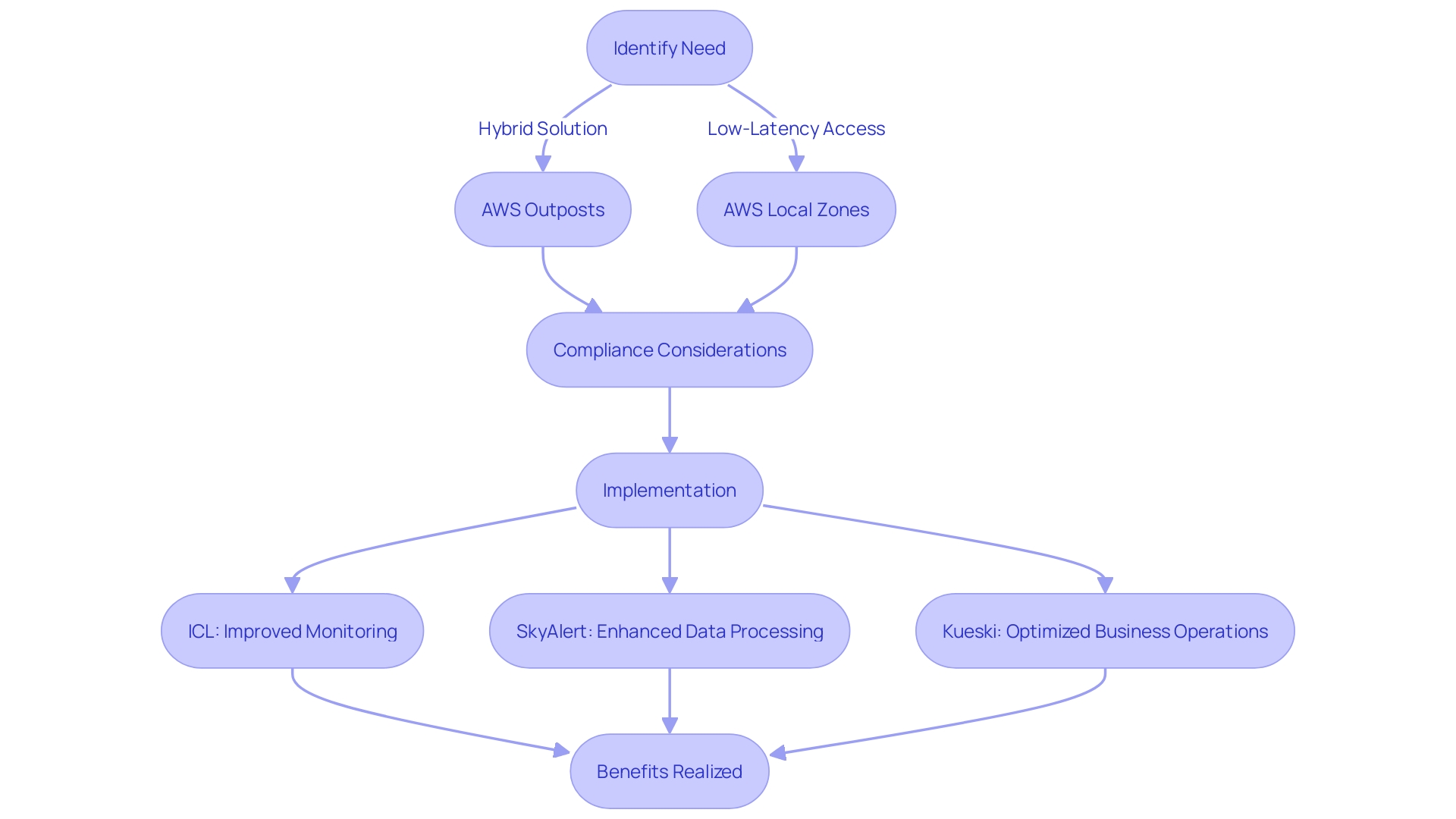

Hybrid and Edge Computing: AWS Outposts and Local Zones

Amazon Web Services (AWS) relentlessly innovates to support a digitally progressive future, contributing significantly to economic development by creating high-quality jobs and investing in infrastructure. AWS Outposts and Local Zones exemplify this commitment by bridging the gap between cloud services and local data processing needs, ensuring compliance with regional data regulations and enabling businesses to operate with agility and confidence in their data sovereignty.

Outposts offers a seamless hybrid experience, allowing businesses to run AWS services on-premises, which is vital for companies like ICL, a multinational manufacturing corporation. These services provide a resilient and scalable solution to ICL's challenge of monitoring industrial equipment under harsh conditions. By adopting Outposts, organizations can reduce the need for continuous manual monitoring, minimize downtime, and protect against revenue loss and environmental damages, as seen with ICL's deployment.

Meanwhile, AWS Local Zones cater to the demand for low-latency access to cloud services, facilitating swift and efficient data processing. This is crucial for applications such as SkyAlert's IoT solution, which sends timely alerts to millions in earthquake-prone areas, and Kueski's online lending platform, which leverages big data analytics to deliver rapid financial services.

The European Data Act, set to be fully effective in September 2025, underscores the importance of data mobility and cloud service flexibility. AWS's European cloud initiative, in alignment with the Act, empowers organizations to meet stringent regulations while maintaining digital sovereignty. As countries like Germany recognize the need for local computing power and digital independence, AWS's infrastructure enhancements, including Local Zones, are essential for organizations to thrive in a competitive global market.

Cost and Capacity Management

AWS's commitment to cost optimization is embodied in the Well-Architected Framework, which emphasizes the importance of financial management, resource provisioning, data management, and cost monitoring. By integrating the insights from the IDP case study, it becomes evident that managing cloud expenses is an ongoing journey that involves continuous refinement throughout a workload's lifecycle. AWS Cost Explorer, a vital tool for cost analysis, is not activated by default. To leverage its full capabilities, users must manually enable it within the AWS Management Console, unlocking the potential to view and manage expenses with up to an hour and resource-level granularity.

The synergy between finance and technology teams is essential for effective cost management. Financial experts, such as CFOs and financial planners, must understand the nuances of cloud consumption and collaborate with technology leaders to link technology expenditures to business outcomes. This partnership is critical in establishing a culture of cost-awareness that supports the business's strategic objectives.

GoDaddy's approach to optimizing their batch processing jobs demonstrates the practical application of AWS's cost management tools. By adopting a data-driven strategy and a structured methodology referred to as the seven layers of improvement opportunities, GoDaddy has been able to enhance operational efficiency and customer satisfaction.

To encapsulate the essence of cost management, it's crucial to consider both short-term operational costs and long-term architectural and organizational challenges. Embracing a culture of cost management without sacrificing value delivery or team motivation is paramount. As AWS pricing follows a pay-as-you-go model, users must diligently monitor and manage their usage to prevent unforeseen expenses, particularly with EC2 instances and storage services, which are priced based on various factors such as type, size, region, and data access frequency.

In summary, AWS provides a comprehensive suite of tools and practices to assist in cost management and optimization. By fostering collaboration between finance and technology, enabling detailed cost analysis tools like AWS Cost Explorer, and learning from real-world examples like GoDaddy, organizations can effectively manage their AWS costs, leading to significant savings and a maximized return on investment.

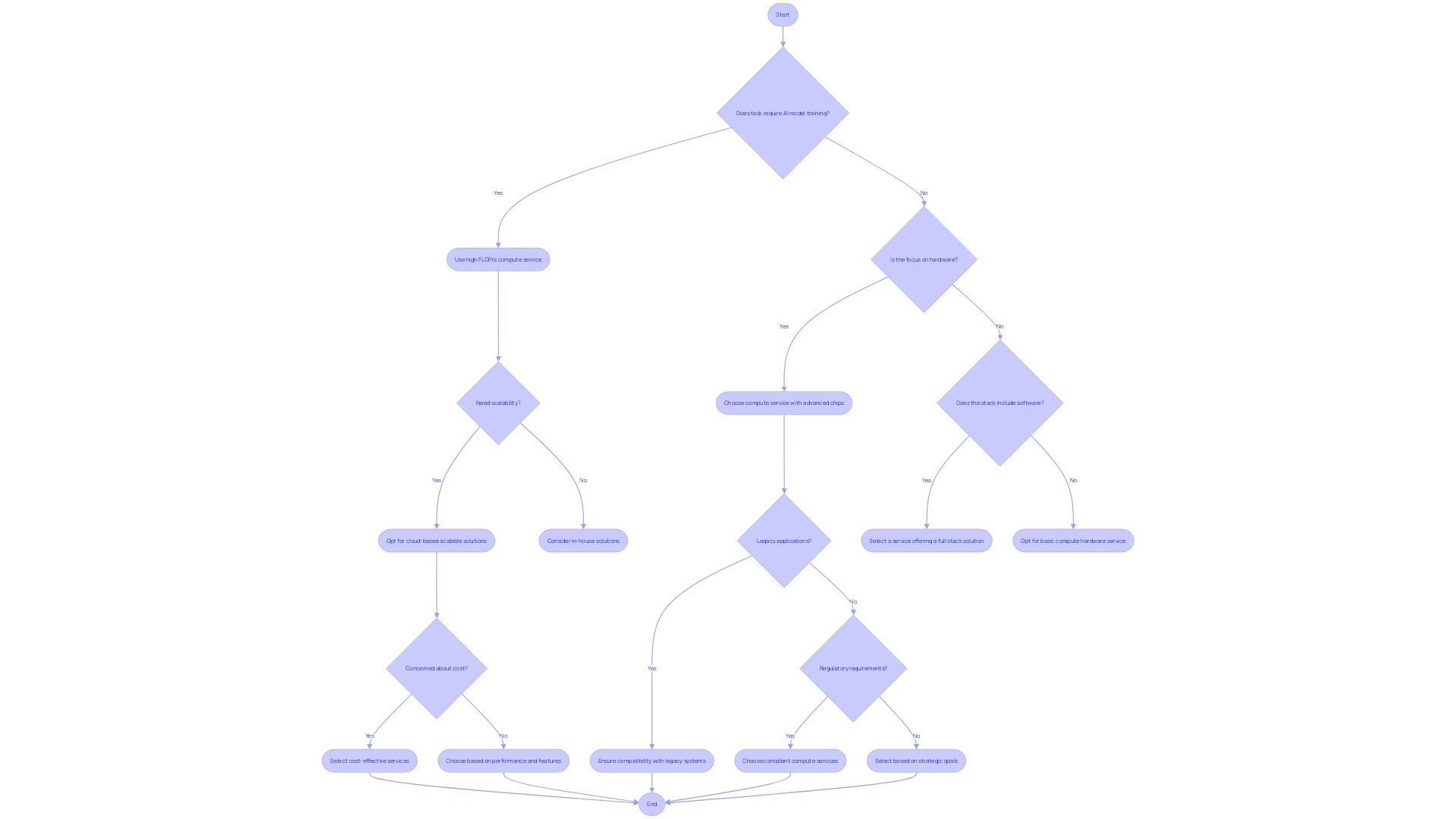

Choosing the Right AWS Compute Service for Your Needs

Choosing an AWS compute service that aligns with your company's needs requires a nuanced understanding of the specific demands of your workloads. For example, industrial giants like ICL must monitor machinery under extreme conditions, where deploying sensors for remote monitoring is impractical. They've relied on manual inspections to prevent revenue loss and environmental harm, but this method does not scale well and is cost-intensive. On the other hand, organizations like the TR Labs team, which started small and blossomed to over 100 employees, face the challenge of managing complex and diverse AI/ML models as they scale, necessitating robust compute options that can handle such growth seamlessly.

With generative AI models necessitating formidable compute resources due to their billions of parameters, companies are seeking efficient ways to harness computing power that pairs with their expansion. AWS offers a range of services, including Amazon EC2, to cater to these evolving needs. EC2 exemplifies the scalability and elasticity required for modern workloads, enabling businesses to utilize cutting-edge hardware with a flexible pay-as-you-go model.

However, the decision to build in-house solutions or to buy services like SaaS applications and managed services hinges on an organization's capabilities and strategic goals. For those with mature development teams, creating custom solutions might be viable, while others may find value in purchasing services that offload the responsibilities of scale and maintenance. This trade-off is particularly pertinent for those handling legacy applications or facing stringent regulatory or data residency requirements, where on-premise solutions might be more appropriate.

When it comes to compute services, the choices range from Lambda for on-demand operations to more traditional server-based options. The key is to assess the specific requirements of your project, be it AI/ML workloads or resource-intensive applications, and to consider the implications on cost, performance, sustainability, and ease of use. As one industry expert noted, selecting the right infrastructure for generative AI workloads is a delicate balance that can significantly affect an organization's overall efficiency and return on investment.

As AWS continues to evolve, offering various compute services to accommodate different scenarios, it is vital to stay informed and choose wisely to ensure that the technology serves the business effectively and positions it for future growth.

Getting Started with AWS Compute Services

Embarking on the journey with AWS compute services equips you with a formidable toolset for tackling a variety of computational tasks, from machine learning to high-performance computing. AWS, or Amazon Web Services, is a robust suite offering over 300 cloud solutions, including but not limited to computing, storage, and databases. These services cater to both businesses and individuals, providing the resources needed to deploy, develop, and manage complex applications through the internet, on-demand, and with scalability.

Among the vast offerings, AWS EC2 (Elastic Compute Cloud) stands out as a pivotal service, offering scalable computing in the cloud. Compute resources are akin to the brain of cloud operations, handling processing tasks akin to what RAM and CPUs do, but within the cloud infrastructure. AWS compute services are versatile, encompassing virtual machines, serverless computing options like AWS Lambda, and container orchestration tools such as Kubernetes.

To begin leveraging these services, create an AWS free tier account. This account allows you to explore and use certain services without cost, providing a practical starting point for newcomers. Register by providing your email and account name, and upon email verification, you can access the AWS Management Console, a centralized platform for managing AWS services.

Real-world applications of AWS compute services are extensive and transformative. For instance, ICL, a multinational manufacturing and mining corporation, faced challenges in monitoring industrial equipment under harsh conditions. By adopting AWS technologies, they could significantly reduce manual monitoring, mitigate potential revenue losses, and prevent environmental damages through enhanced remote monitoring capabilities.

The demand for computational power has surged with the advent of generative AI models and large language models (LLMs), which have seen exponential growth in parameters over the past five years. These advancements necessitate robust computing resources, which AWS compute services are well-equipped to provide, ensuring that customers can continue to push technological boundaries and deliver high-fidelity products and experiences across various industries.

Best Practices and Use Cases

Optimizing AWS compute services encompasses enhancing performance, security, and cost-efficiency, which are critical to any organization's cloud infrastructure. GoDaddy, a global leader with over 20 million customers, exemplifies this focus. By adopting a structured approach called the seven layers of improvement opportunities, they've honed in on optimizing batch processing jobs to drive efficiency and customer satisfaction.

Financial prudence in cloud computing is equally important. The emergence of companies like Reyki AI, founded by cloud computing and AI experts, has been pivotal in helping businesses manage cloud expenses. By leveraging software intelligence, automation, and pricing optimizations, Reyki AI clients, including those who have switched to their solutions, have witnessed up to 65% in savings on cloud compute costs within months.

When it comes to making decisions between buying services or building in-house solutions, organizations need to weigh time, effort, and cost. With AWS's scalable and elastic infrastructure, companies are able to modernize workloads efficiently. However, the transition to the cloud can be complex, especially for organizations with legacy applications or specific regulatory requirements.

Best practices are critical for performance efficiency and cost optimization within AWS environments. Organizations should conduct a Well-Architected Framework review to ensure that their cloud infrastructure is aligned with industry-leading design principles and technical best practices. This approach is essential for building and operating cost-aware workloads that not only minimize expenses but also maximize return on investment, allowing organizations to focus on their core business objectives.

References

From pharmaceuticals to online gaming, organizations are harnessing the power of AWS Compute Services to transform their operations. Vertex Pharmaceuticals, for example, has revolutionized drug discovery by employing machine learning techniques for analyzing microscopic images. They've trained ML models to measure the effects of drug candidates on biological cells, a process that once required laborious manual analysis. This innovation illustrates the critical role of high-performance computing in handling large datasets and complex computations.

Chess.com, the world's premier online chess platform with over 150 million users, leverages AWS to maintain and scale its IT infrastructure, ensuring a seamless experience for chess enthusiasts globally. The platform's dedication to providing a digital chess environment has united players from diverse regions, underscoring the importance of reliable and scalable cloud services.

The demand for compute resources is not limited to specific industries. Cutting-edge generative AI models and high-performance computing applications across various sectors require unprecedented levels of computing power. The exponential growth of large language models—expanding from billions to hundreds of billions of parameters within a few years—has improved their natural language processing capabilities. However, this also poses significant computational and resource challenges, necessitating potent infrastructure like GPUs for training and deploying these models.

Selecting the right infrastructure for these intensive tasks is crucial. It impacts cost, performance, sustainability, and user experience. As the digital economy grows, data centers—valued at approximately $250 billion—serve as the backbone, providing the necessary compute power, connectivity, and security. This infrastructure supports the demanding workloads of AI, revealing the symbiotic relationship between technological advancements and the evolution of cloud computing services.

Conclusion

In conclusion, AWS provides a comprehensive range of compute services like Amazon EC2, AWS Lambda, AWS Batch, and more. These services offer scalability, reliability, and cost-effectiveness, empowering businesses to thrive in the digital age.

Amazon EC2 delivers scalable virtual servers tailored to specific requirements, allowing businesses to manage computing resources effectively. AWS Lambda revolutionizes computing by focusing on code rather than server infrastructure, offering scalability and reduced operational overhead. AWS Batch optimizes resource provisioning for batch computing, handling workloads of any size.

Other services like Amazon Lightsail, ECS, EKS, and Fargate streamline deployment and management of applications, while Outposts and Local Zones bridge the gap between cloud services and local data processing needs.

AWS provides tools and best practices for cost and capacity management, ensuring optimized expenses and resources. Choosing the right compute service requires considering scalability, cost, performance, sustainability, and ease of use. With AWS, organizations have a comprehensive suite of compute services to scale operations, optimize costs, and focus on core objectives.

In summary, AWS compute services offer scalability, reliability, and cost-effectiveness for businesses to thrive. By leveraging these services, organizations can focus on innovation and growth, knowing they have the expertise and reliability of AWS as their trusted partner in cloud computing.