Introduction

Docker Compose and Kubernetes are two essential tools in the realm of container orchestration, each offering distinct capabilities to meet various development and deployment needs. Docker Compose simplifies the creation and management of multi-container applications on a single host, making it ideal for development and testing scenarios. On the other hand, Kubernetes excels in orchestrating containerized applications across clusters of machines, providing scalability and resilience for production environments.

In this article, we will explore the key differences between Docker Compose and Kubernetes, their use cases, and how they can be used together. We will delve into topics such as scalability and orchestration, multi-node clusters, service discovery and load balancing, as well as the ecosystem and community support for both tools. By understanding the strengths and nuances of Docker Compose and Kubernetes, developers can make informed decisions when choosing the right tool for their specific needs.

So let's dive in and explore these powerful tools that are shaping the landscape of modern software development and deployment.

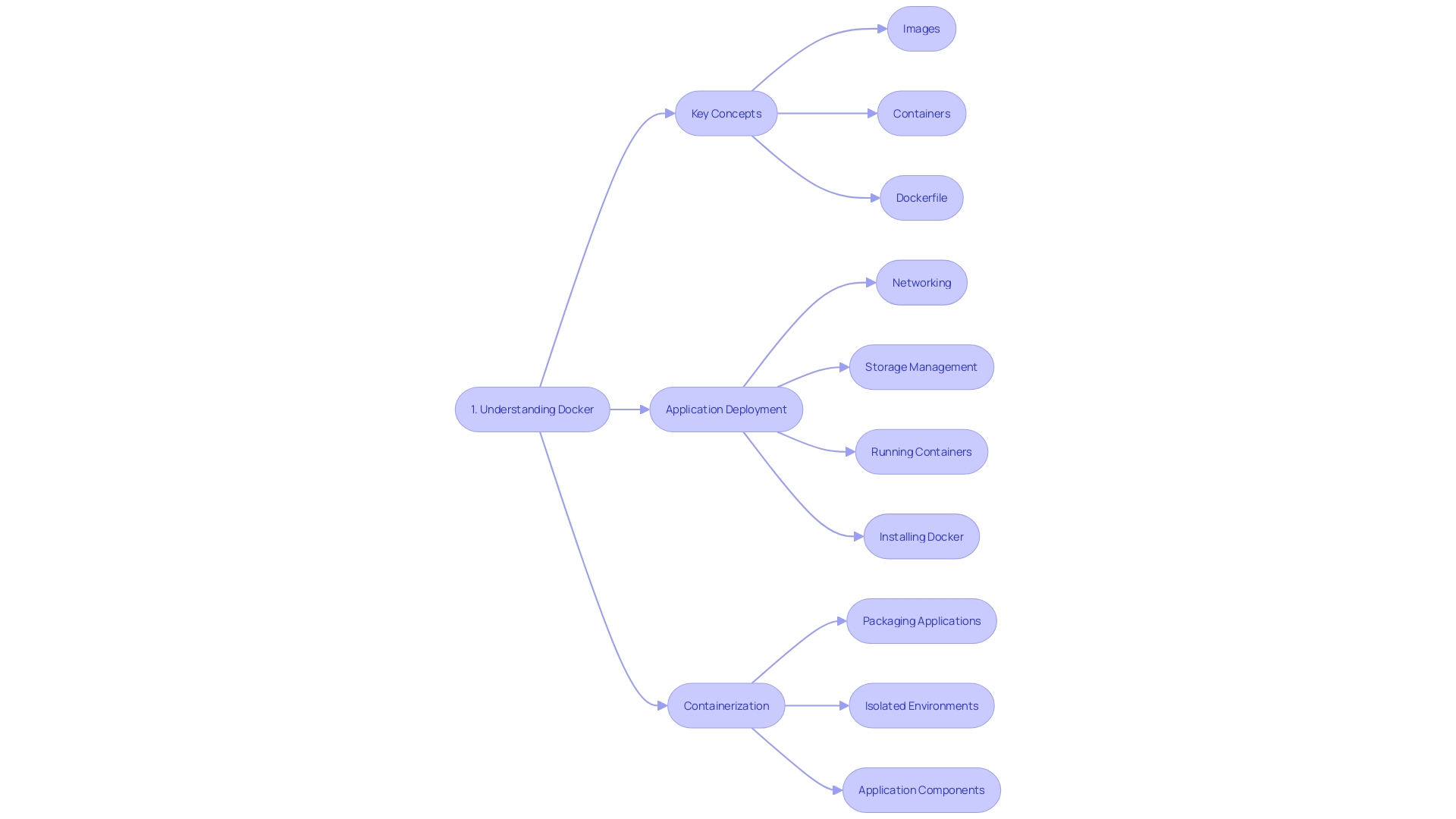

What is Docker Compose?

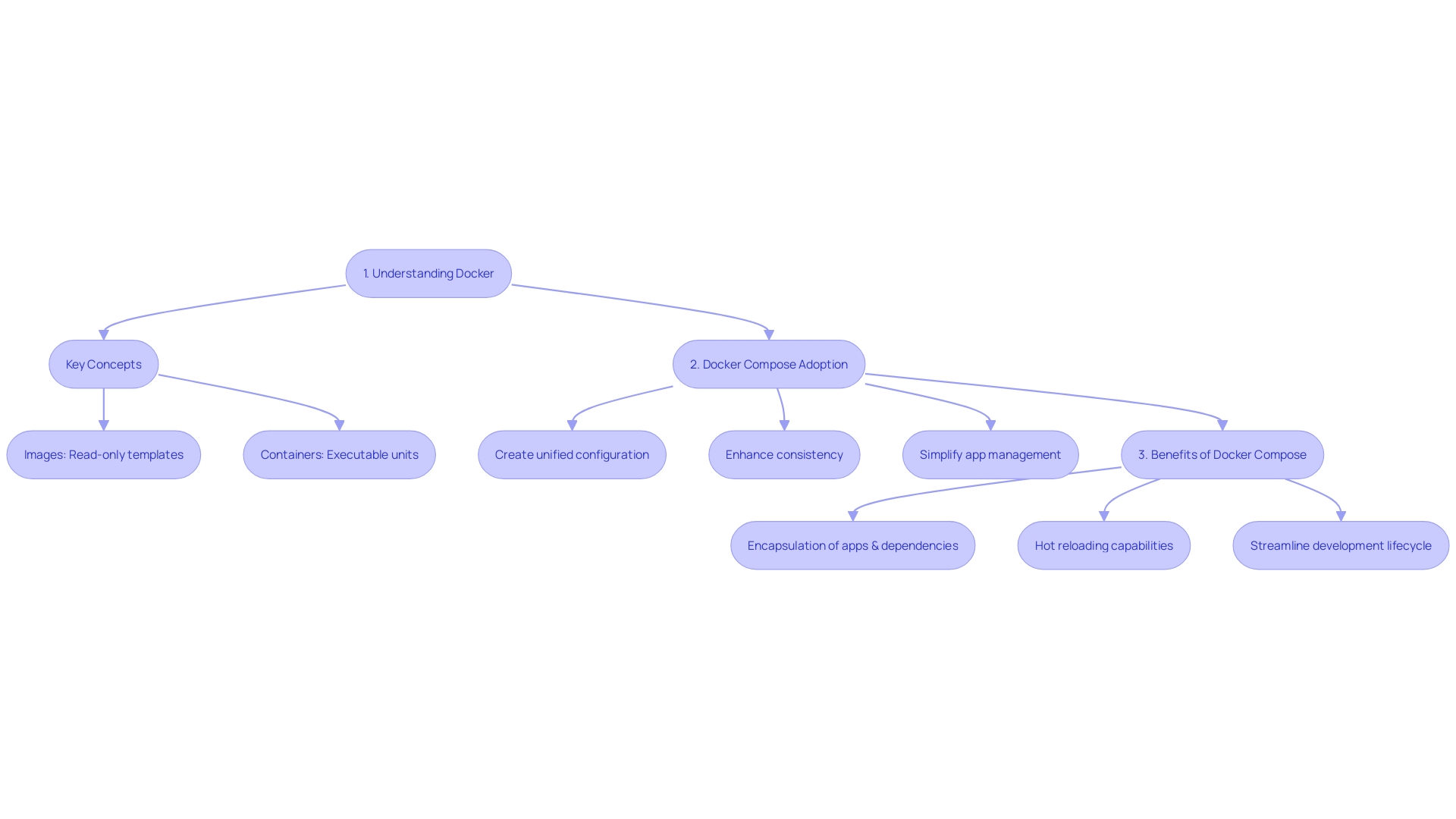

Docker Compose stands as a pivotal tool for developers, particularly in complex environments where multiple containerized applications must interoperate flawlessly. By utilizing a straightforward YAML file, Docker Compose orchestrates the creation and management of services, networks, and volumes, which are essential components of any robust application ecosystem.

Consider the example of Tabcorp, Australia's premier wagering and gaming company. They faced a tangled web of development and deployment challenges due to their diverse array of teams, each deploying different services like Java, Scala, Nodejs, and .Net directly to hardware. The adoption of Docker Compose transformed their workflow by establishing a unified configuration file, docker-compose.yml, which enhanced consistency across various environments and simplified the complex management of their multifaceted applications.

The significance of this approach cannot be overstated. As one industry expert put it, Docker Compose elevates the management of applications, surmounting the notorious challenge where 'code runs on my machine but nowhere else.' It ensures that regardless of the deployment environment, whether a developer's local machine or a production server, the application behaves uniformly due to the container's encapsulation of the application and its dependencies.

Further bolstering the efficiency of Docker Compose, recent advancements have introduced hot reloading capabilities, allowing developers to make live adjustments without the cumbersome process of rebuilding and recreating containers. This represents a leap forward in the developer's workflow, making Docker Compose an indispensable element of modern application development.

This evolution aligns with Docker's overarching aim, as articulated during DockerCon, to streamline the development lifecycle and obviate repetitive configuration tasks. Docker's innovative direction, with the integration of AI and an emphasis on security and supply chain management, promises to further simplify and enhance the developer experience.

The 2024 Docker State of Application Development Report underscores Docker's growing impact, with findings from a comprehensive survey of over 1,300 respondents. These insights reveal Docker's role in standardizing development practices and addressing prevalent industry challenges, highlighting its significance as a cornerstone in the realm of software development.

What is Kubernetes?

Kubernetes, also referred to as K8s, has revolutionized the orchestration of containerized applications by providing an open-source framework to automate their deployment, scaling, and operational management. This platform facilitates a declarative configuration for managing your application's containers along with their networking and storage needs, thereby creating an ecosystem that is both highly scalable and resilient.

As an illustration of Kubernetes' impact, consider the case of Tabcorp, Australia's leading provider of wagering and gaming services. The company faced a complex development environment with teams deploying a variety of services such as Java, Scala, Nodejs, and Microsoft. Net directly onto hardware. This approach led to inconsistencies and challenges that were further exacerbated by the manual setup process of Ansible. By adopting Kubernetes, organizations like Tabcorp can streamline their deployment processes and achieve more consistent outcomes.

Another example is Chess.com, the world's premier chess platform with over ten million daily games and a community of more than 150 million users. The company's commitment to nurturing the game's growth and fostering connections among chess enthusiasts worldwide has necessitated a robust and stable IT infrastructure. Kubernetes plays a pivotal role in enabling Chess.com to deliver a seamless digital chess experience to its global audience.

From its inception, Kubernetes has been central to modern computing orchestration. Over the past decade, Kubernetes has been a driving force in cloud-native computing. As Docker simplified running applications in containers, Kubernetes emerged as the solution to manage these containers. This has led to a paradigm shift where applications are no longer confined to the servers or clouds they were originally built on but have become highly portable, allowing businesses to operate more efficiently and at a reduced cost.

These real-world use cases underscore the transformative power of Kubernetes in today's technological landscape, as it continues to influence new directions in computing and remains an essential tool for companies aiming to innovate and stay competitive in the ever-evolving market.

Key Differences: Docker Compose vs. Kubernetes

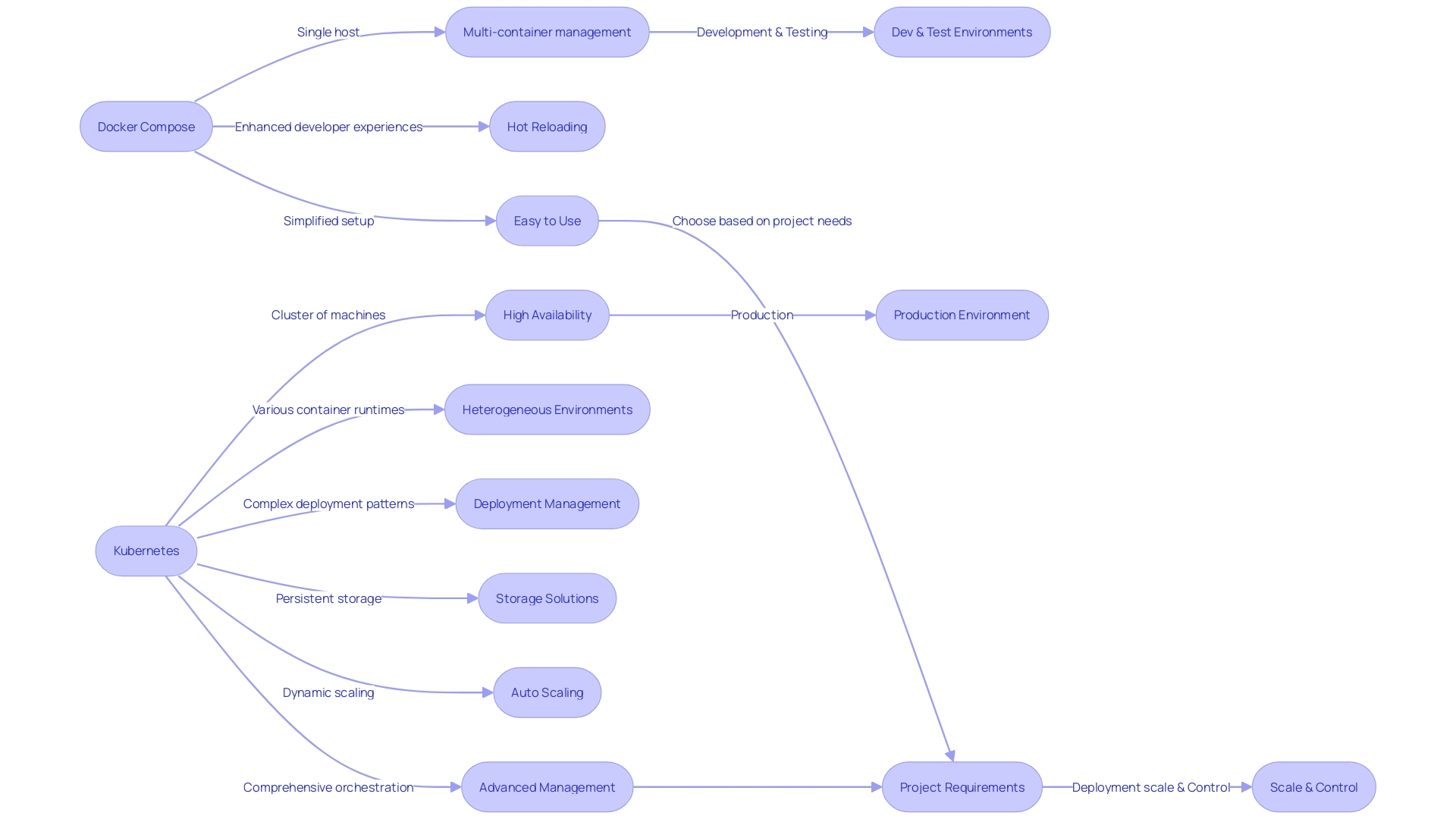

Docker and Kubernetes are pivotal in the orchestration of modern software applications, each with distinct capabilities tailored to different development and deployment needs. Docker Compose, a tool within the Docker ecosystem, excels in creating and managing multi-container applications on a single host. This makes it ideal for development and testing scenarios where simplicity and speed are desired. In contrast, Kubernetes is architected to orchestrate containerized applications across a cluster of machines, providing the resilience and scalability necessary for production environments.

While Docker Compose streamlines the workflow for Docker containers, Kubernetes is not limited to Docker and can manage a variety of container runtimes. This flexibility is crucial in heterogeneous environments where a mix of container technologies coexists. Kubernetes' complexity can be daunting, but it offers a comprehensive set of features to handle intricate deployment patterns, persistent storage, and dynamic scaling.

The evolution of Docker, as showcased at DockerCon, continues to enhance developer experiences with features like hot reloading in Docker Compose, which simplifies the iterative development process. Meanwhile, companies like Tabcorp navigate the complexities of deployment across diverse teams and services by leveraging container orchestration to achieve consistency and efficiency.

As the industry progresses, the Docker State of Application Development Report illuminates ongoing trends, including the adoption of cloud services, AI/ML integration, and the microservices architecture. The insights from over 1,300 developers emphasize the community's drive towards more agile, secure, and innovative application development practices.

Overall, the choice between Docker Compose and Kubernetes hinges on the specific requirements of the project, the scale of deployment, and the desired level of control over container management. Both tools play a crucial role in the containerization ecosystem, empowering developers to build and deploy applications with confidence and precision.

Scalability and Orchestration

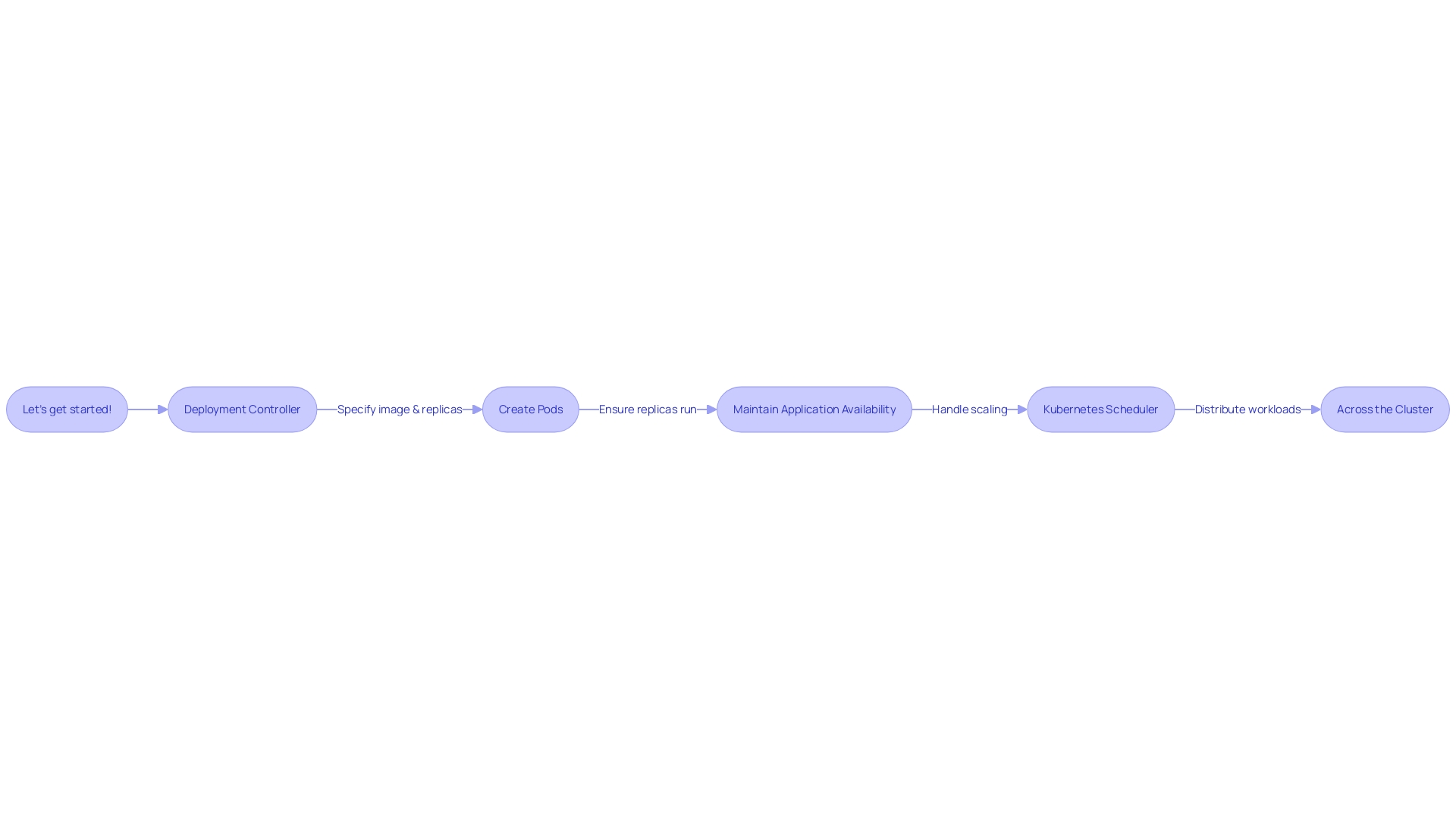

Kubernetes stands out as a robust solution for managing containerized applications across various enterprises, thanks to its advanced orchestration capabilities. It is designed to maintain application availability and seamlessly handle scaling through its pod-based architecture. Each pod, a collection of one or more containers, is the smallest deployable unit within a Kubernetes cluster. This design enables Kubernetes to automatically replace failed pods, ensuring continuous application performance without manual intervention. A particularly notable feature is the Kubernetes Scheduler, which orchestrates the distribution and scaling of workloads efficiently across the cluster, maintaining optimal resource utilization and application responsiveness.

In contrast, Docker Compose requires additional overhead for scaling, lacking the inherent, automated scalability features of Kubernetes. To scale with Docker Compose, one would need to integrate external tools or undertake manual configurations, which can be cumbersome in environments that demand high scalability and rapid adjustments to load variations.

The importance of Kubernetes' self-healing and scaling capabilities is exemplified by platforms like Chess.com, which hosts millions of chess games daily for a global community. The platform's infrastructure, led by James Kelty, utilizes Kubernetes to manage its vast, ever-growing user base, ensuring a stable and scalable environment that delivers a seamless experience to chess enthusiasts around the world.

Moreover, Kubernetes' persistent storage model addresses various data consumption needs, whether it's allowing multiple users simultaneous read-write access or restricting sensitive data to a single user. Updates to Kubernetes, such as the shift from cgroups v1 to cgroups v2, further highlight its dedication to providing a reliable, AI-friendly platform that simplifies complexity and focuses on maintaining the desired state for workloads.

In the evolution of application deployment, organizations once faced challenges with physical server setups leading to resource allocation issues. Kubernetes not only solves this problem by defining resource boundaries but also offers the flexibility to scale applications with simple commands or automatically based on CPU usage, exemplifying its role as an agile and cost-effective platform for modern application deployment.

Multi-Node Clusters

Kubernetes has emerged as a powerful orchestrator for handling complex, multi-node cluster environments, enabling seamless distribution of application workloads. It excels in automatically managing critical aspects such as load balancing, ensuring fault tolerance, and efficiently rescheduling pods when they fail. Contrastingly, Docker Compose is tailored for simpler, single-host deployments, lacking the native capabilities to manage the intricacies of multi-node clusters.

As applications evolve and expand, Kubernetes' multi-cluster architecture offers a robust solution to the bottlenecks of single-cluster setups, such as limited resources and the need for fault isolation. By deploying multiple clusters across various data centers or cloud regions, Kubernetes enhances resource allocation, provides geographical redundancy, and caters to specific workload requirements. This approach not only ensures high availability but also optimizes latency, significantly improving the user experience by directing traffic to the nearest cluster.

Considering the geographic distribution of users, Kubernetes multi-cluster deployment stands out by ensuring that service remains uninterrupted, even in the face of regional outages or disasters. This strategic placement of clusters near user bases enables quick failover and recovery, ensuring that your application's reach is unaffected by localized incidents.

Over the past decade, Kubernetes has become a pivotal force in cloud-native computing, transforming the way applications are orchestrated. It built on Docker's innovation of containerization, which revolutionized application deployment by allowing for greater efficiency and portability across platforms. The combination of Docker's containerization with Kubernetes' orchestration capabilities creates a powerful ecosystem for modern software development.

In the landscape of hybrid and multi-cloud environments, Kubernetes facilitates a flexible approach, allowing organizations to leverage both public and private clouds. This adaptability ensures that sensitive workloads can remain on-premise while taking advantage of public cloud resources for other tasks, effectively managing costs without compromising on functionality.

In summary, while Docker Compose serves as an excellent starting point for single-host applications, Kubernetes offers a scalable, resilient infrastructure for larger, more complex deployments, ensuring your applications remain robust and responsive, regardless of scale or global distribution.

Service Discovery and Load Balancing

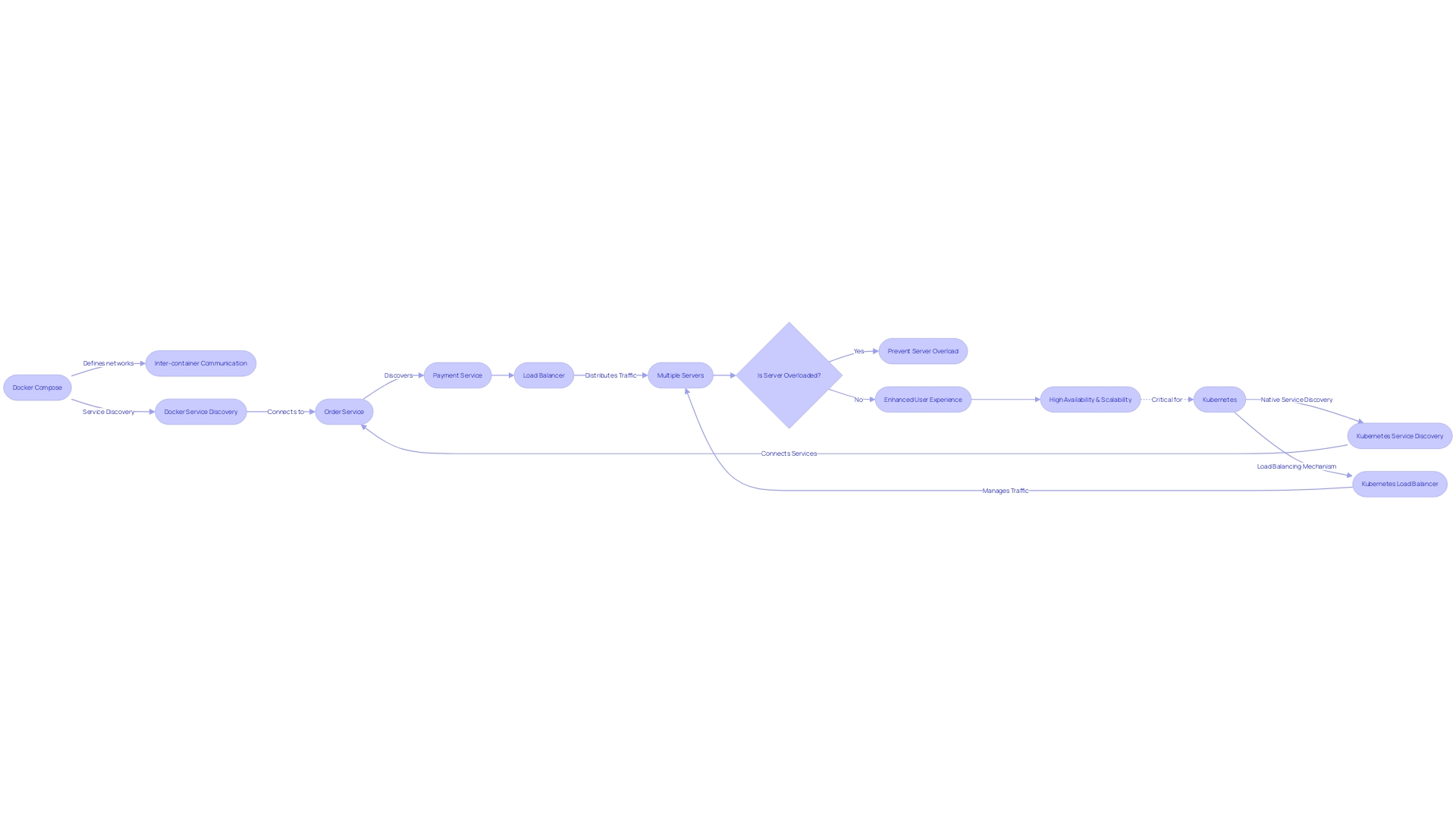

Docker Compose and Kubernetes are pivotal in the realm of microservices, offering unique capabilities for service discovery and load balancing. Docker Compose facilitates inter-container communication through user-defined networks, yet it lacks intrinsic load balancing functions. To scale and manage multiple service instances effectively, Kubernetes excels with its native service discovery and load balancing mechanisms. It empowers you to define services that can be exposed to other pods within the cluster, ensuring smooth traffic distribution among service instances.

The Kubernetes service abstraction is critical for handling dynamic pod IP assignment, a common occurrence as pods are routinely destroyed and recreated with deployments. This abstraction allows for seamless communication between services, such as in an online shopping platform, where the order service dynamically discovers and connects with the payment service without manual IP tracking.

Recent advancements like the release of Matter 1.2, which enhances device interoperability and security, and the development of CloudCasa for Velero, which offers versatile container backup solutions, further underscore the importance of robust service discovery and load balancing in managing modern, scalable applications.

Moreover, industry movements such as Arm Holdings' successful IPO, signaling strong market confidence in companies that are integral to AI and microservices transitions, reflect the growing importance of service orchestration tools like Kubernetes in the tech ecosystem. With these trends, the ability to dynamically manage service instances and ensure high availability becomes paramount, echoing sentiments from AWS about designing systems with inherent resilience and availability to expect and recover from the unexpected.

According to Docker's State of Application Development Report, developers increasingly rely on containerization for consistent and reproducible deployments. This reality places a spotlight on Kubernetes' service discovery as an indispensable feature for maintaining the flexibility and scalability of services that operate in a distributed system. In conclusion, Kubernetes' built-in service discovery and load balancing capabilities are essential for modern applications that demand dynamic scaling and robust interaction between microservices.

Ecosystem and Community Support

Docker Compose and Kubernetes are pivotal in the modern landscape of software development, each with robust ecosystems and strong community support. Docker Compose, a part of the Docker suite, simplifies the process of defining and sharing multi-container applications. With the Docker platform's extensive library of pre-built images, developers can streamline the creation and deployment of applications. Meanwhile, Kubernetes has emerged as a powerful orchestration tool, enjoying broad support from industry giants like Google, Microsoft, and Amazon. Its ecosystem is replete with a wide array of extensions and integrations, which enhances its usefulness across various platforms and tools.

The 2024 Docker State of Application Development Report, detailing responses from over 1,300 professionals, highlights the growth and influence of Docker within the development community. The report reflects the evolving nature of development practices, emphasizing Docker's role in supporting developers with a plethora of tools that foster innovation and agility. Docker's commitment to the 'shift-left' paradigm, as revealed during DockerCon 2023, further underscores its dedication to enhancing security and developer experience.

Kubernetes is not left behind in this progressive wave, as showcased by the introduction of Upbound's Spaces, which leverages Crossplane to offer a more accessible Kubernetes control plane. Such initiatives demonstrate Kubernetes' adaptability and its drive to simplify cloud-native operations for developers.

Both Docker and Kubernetes are shaping the development ecosystem, each with its particular strengths. Docker's utility in building, sharing, and running applications across various environments is mirrored by Kubernetes' capabilities in managing complex, large-scale deployments. Together, they provide a comprehensive toolkit for developers navigating the ever-changing landscape of application development and deployment.

Use Cases for Docker Compose

Utilizing Docker Compose, developers can orchestrate multiple containers that interconnect seamlessly, replicating complex development and testing environments with ease. This utility is particularly advantageous for constructing consistent local development settings that mirror production ecosystems, allowing for streamlined testing and debugging.

Docker Compose serves as a pivotal tool for development teams, such as those at Tabcorp, facing the challenge of deploying a diverse array of services ranging from Java and Scala to Nodejs and .NET, directly onto hardware. By facilitating the definition of service interdependencies and automating container deployment, Docker Compose helps avoid the inconsistencies that previously plagued their development lifecycle.

In the realm of artificial intelligence development, where setting up an environment can be daunting, Docker Compose proves invaluable. A comprehensive AI development setup, including a machine learning manager, a distributed file server, and a robust database, can be initialized in under a minute. Developers and data scientists benefit from an expedited setup process, allowing them to focus on innovation rather than configuration.

Moreover, the adoption of Docker Compose extends to document management platforms like Alfresco, where the high number of pulls from Docker Hub indicates a strong reliance on containerization for content management services. Docker Compose's ability to streamline the setup of such services underscores its role in facilitating efficient workflows across various technological domains.

The Compose Specification has become a cornerstone in defining computing components as services within an application, thereby standardizing the deployment process. The transformative impact of Docker Compose is echoed by industry reports, highlighting Docker's significant influence on application development trends and the growing importance of cloud and AI/ML in software development. Developers are now able to manage infrastructure with the same agility as their applications, marking a paradigm shift in how software is developed and delivered.

Use Cases for Kubernetes

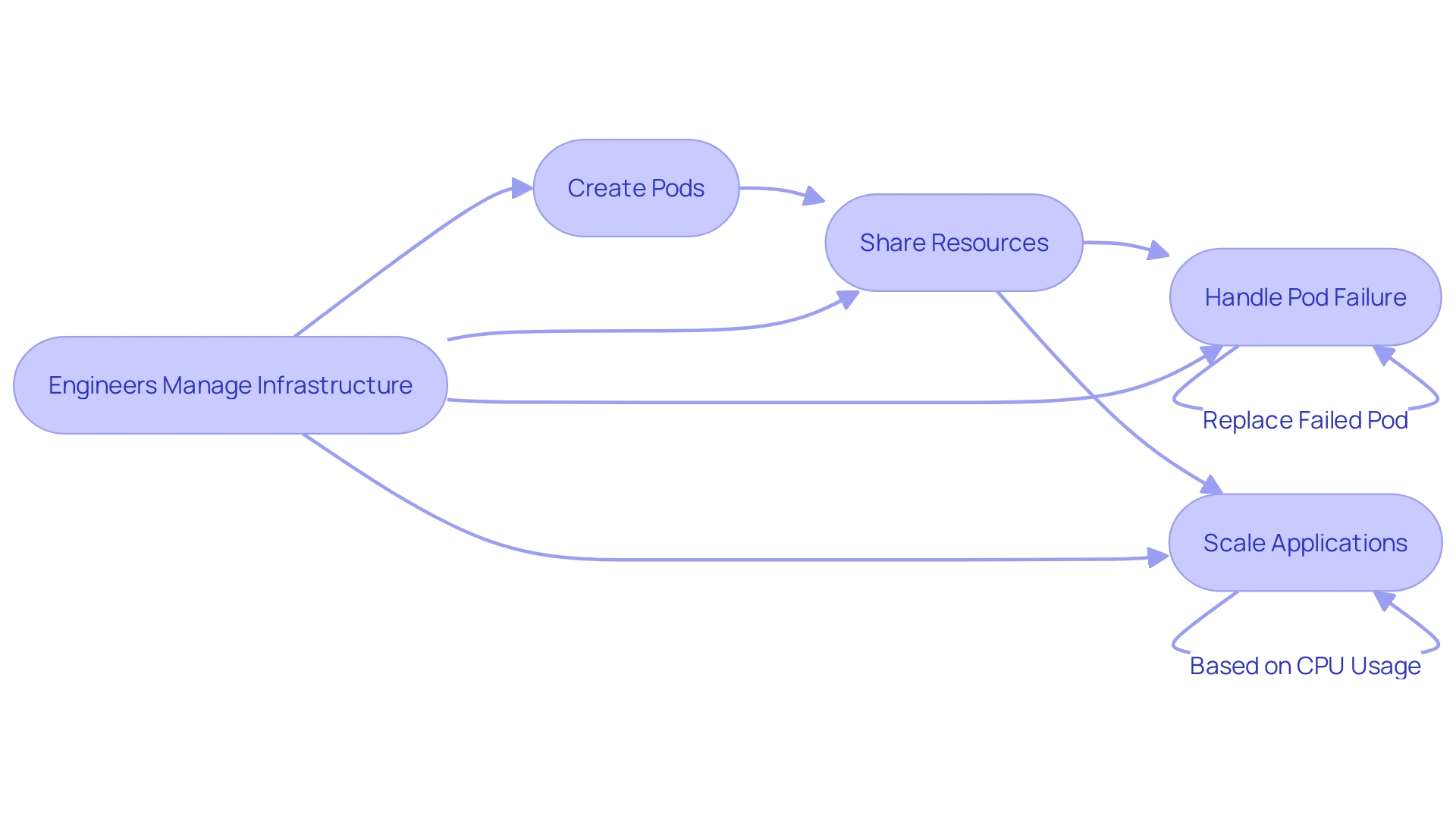

Kubernetes excels in production environments by ensuring high availability, scalability, and resilience, integral for complex microservices architectures and dynamic application scaling. This container orchestration tool, adopted by top enterprises globally, manages containerized applications across multiple servers, or nodes. The smallest deployable units, known as pods, can contain one or multiple containers that share resources. If a pod fails, Kubernetes cleverly replaces it, maintaining application uptime, which is key in the Control Loop managed by the Kubernetes Scheduler.

For example, Chess.com, which facilitates over ten million daily chess games and caters to a community of 150 million users, leverages Kubernetes to maintain a robust IT infrastructure that spans public cloud and on-premises solutions, essential for worldwide user engagement. Similarly, PlanetScale deploys databases on Kubernetes, harnessing pods for resilience.

Moreover, Kubernetes streamlines scaling applications up or down with simple commands, UIs, or automatically based on CPU usage, a feature particularly beneficial for machine learning platforms. As evidenced by Microsoft engineers and the 'Kubernetes Best Practices, Second Edition,' Kubernetes enables rapid iteration on client demands and is key to leveraging full potential. However, Kubernetes' complexity necessitates dedicated engineers to manage its intricate infrastructure, ensuring optimal use and deployment.

Can Docker Compose and Kubernetes be Used Together?

Docker Compose and Kubernetes can effectively complement each other in the realm of software development and deployment. Docker Compose serves as a streamlined tool that simplifies the definition and management of multi-container applications through a clear YAML configuration file. This enables developers to construct Dockerfiles for distinct services, encapsulating the application code, runtime, libraries, and dependencies. These Dockerfiles are crucial for building custom service images – a necessity for both the front and back services of a project.

Kubernetes, on the other hand, excels in scaling and orchestrating complex applications. With the recent deprecation of cgroups v1 in favor of cgroups v2, Kubernetes is moving towards simplifying its codebase and focusing on the desired state of applications, as noted by Angelos Kolaitis from Canonical. This shift underscores Kubernetes' commitment to workload reliability and the seamless operation of production services.

The integration of Docker Compose within a Kubernetes environment allows developers to harness the simplicity of defining services with Docker Compose alongside the robust, scalable nature of Kubernetes. The Compose Specification defines computing components of an application as services, which, when implemented on platforms like Kubernetes, run efficiently and communicate through networks.

The significance of this integration is reflected in the real-world scenario faced by Tabcorp, Australia's largest provider of wagering and gaming products. Tabcorp tackled a complex web of development and deployment challenges by transitioning from a fragmented approach with diverse teams to a more unified solution, highlighting the importance of consistent and reliable development environments.

Moreover, the 2024 Docker State of Application Development Report, based on a comprehensive survey conducted by Docker’s User Research Team, emphasizes the growing adoption of tools like Docker and Kubernetes. This report provides insights into current industry trends, such as the rise of microservices and the shift-left approach to security, affirming the critical roles these technologies play in contemporary software development.

Choosing the Right Tool for Your Needs

Navigating the world of container orchestration can be daunting, yet understanding the differences between Docker Compose and Kubernetes is critical for modern application deployment. Docker Compose serves as a straightforward and efficient tool for defining and running multi-container Docker applications, making it ideal for smaller-scale projects or simple development environments. Its simplicity streamlines the process of configuring and initiating applications, but it lacks the robustness required for larger, production-grade deployments.

On the other hand, Kubernetes emerges as the superior solution when it comes to large-scale, complex applications that demand advanced orchestration, scalability, and high availability. Originating from the lessons learned by Google while managing containerized applications at immense scale, Kubernetes has evolved into a comprehensive platform that excels in managing and automating container operations. This includes provisioning, deploying, scaling up or down, and managing the lifecycle of containerized applications across clusters of hosts.

The evolution of application deployment from physical servers to cloud-based solutions underscores the importance of resource allocation and management. Kubernetes not only facilitates efficient resource use but also ensures that applications perform optimally by avoiding resource contention that was typical in earlier computing environments.

Moreover, the recent DockerCon announcements have demonstrated Docker's commitment to streamlining the development lifecycle. Features like hot reloading in Docker Compose applications, first introduced at DockerCon, have transformed the way developers build and iterate on their applications, pushing the boundaries of what container tools can offer in terms of productivity and collaboration.

As we consider the deployment of applications in today's fast-paced environment, it is crucial to select a tool that aligns with the project's scale and complexity. For small-scale ventures or individual developers, Docker Compose might suffice. However, for enterprises aiming for a resilient, scalable, and automated production environment, Kubernetes is the go-to choice. As highlighted by industry experts and reinforced by the latest market trends, Kubernetes not only addresses common pain points but also propels organizations towards efficiency and innovation.

Conclusion

In summary, Docker Compose and Kubernetes are crucial tools in container orchestration. Docker Compose simplifies multi-container application management on a single host, perfect for development and testing. Kubernetes excels in orchestrating containerized applications across clusters, providing scalability and resilience for production environments.

The key differences lie in scalability and orchestration. Kubernetes offers advanced self-healing and automated scaling, while Docker Compose requires additional effort for scaling. Kubernetes also shines in multi-node clusters, efficiently distributing workloads and ensuring geographical redundancy.

For service discovery and load balancing, Kubernetes has native mechanisms that ensure seamless communication and traffic distribution between services. Docker Compose facilitates inter-container communication but lacks built-in load balancing.

Both tools have robust ecosystems and strong community support. Docker Compose simplifies defining and sharing multi-container applications, while Kubernetes enjoys support from industry giants and offers a wide range of extensions and integrations.

Choosing between Docker Compose and Kubernetes depends on project requirements, deployment scale, and desired control over container management. Docker Compose suits single-host applications, while Kubernetes is ideal for larger, complex deployments. Together, they provide developers with a comprehensive toolkit for application development and deployment.