Introduction

The rapid advancement of artificial intelligence (AI) has unlocked new possibilities for innovation across diverse industries. Building generative AI applications on Amazon Web Services (AWS) necessitates a strategic approach, encompassing specific use case identification, high-quality data access, and leveraging advanced AWS services such as Amazon SageMaker for scalable model development and deployment. This article delves into the pivotal considerations and best practices for developing AI applications, highlighting real-world examples like Cepsa Química's approach to regulatory compliance through dynamic model updates.

It also addresses the ethical implications of AI, including algorithmic bias and data privacy, emphasizing the importance of responsible AI deployment.

Furthermore, the article explores best practices for modern application development, advocating for microservices architecture and serverless computing to enhance scalability and reduce costs. Security and compliance are critical, with AWS tools like IAM and KMS playing a vital role in safeguarding data. Finally, cost optimization strategies, such as utilizing AWS Cost Explorer and adopting pre-built models, are discussed to ensure efficient resource use.

Through these insights, organizations can successfully harness AI's transformative power while maintaining ethical standards and operational efficiency.

Key Considerations for Building Generative AI Applications on AWS

When creating generative AI solutions on AWS, pinpointing the particular use cases that can gain from AI capabilities is essential. Assessing information needs and ensuring access to high-quality collections are essential steps. Leveraging AWS services, such as Amazon SageMaker, facilitates the building, training, and deployment of machine learning models at scale.

A noteworthy example comes from Cepsa Química's Safety, Sustainability & Energy Transition team, which employs a Retrieval Augmented Generation (RAG) approach to manage regulatory compliance. This method updates large language models (LLMs) with dynamic information, addressing the static nature of pre-trained models and enabling rapid adaptation to new regulations. This method is especially advantageous in situations where regulatory information often shifts, enabling rapid prototyping and utilization in document search use cases.

Additionally, the ethical implications of AI, including algorithmic bias and data privacy, must be considered to maintain compliance and build trust with users. As AI becomes more integrated into daily operations, its role in driving innovation across business functions cannot be overstated. According to recent statistics, generative AI has the highest adoption in marketing and sales (34%) and in product or service development (23%).

Building responsible AI involves managing these ethical considerations diligently. As highlighted by industry experts, Ai's pervasive impact on society raises important questions about its responsible deployment. By addressing these challenges, organizations can harness the transformative power of AI while ensuring ethical standards and user trust.

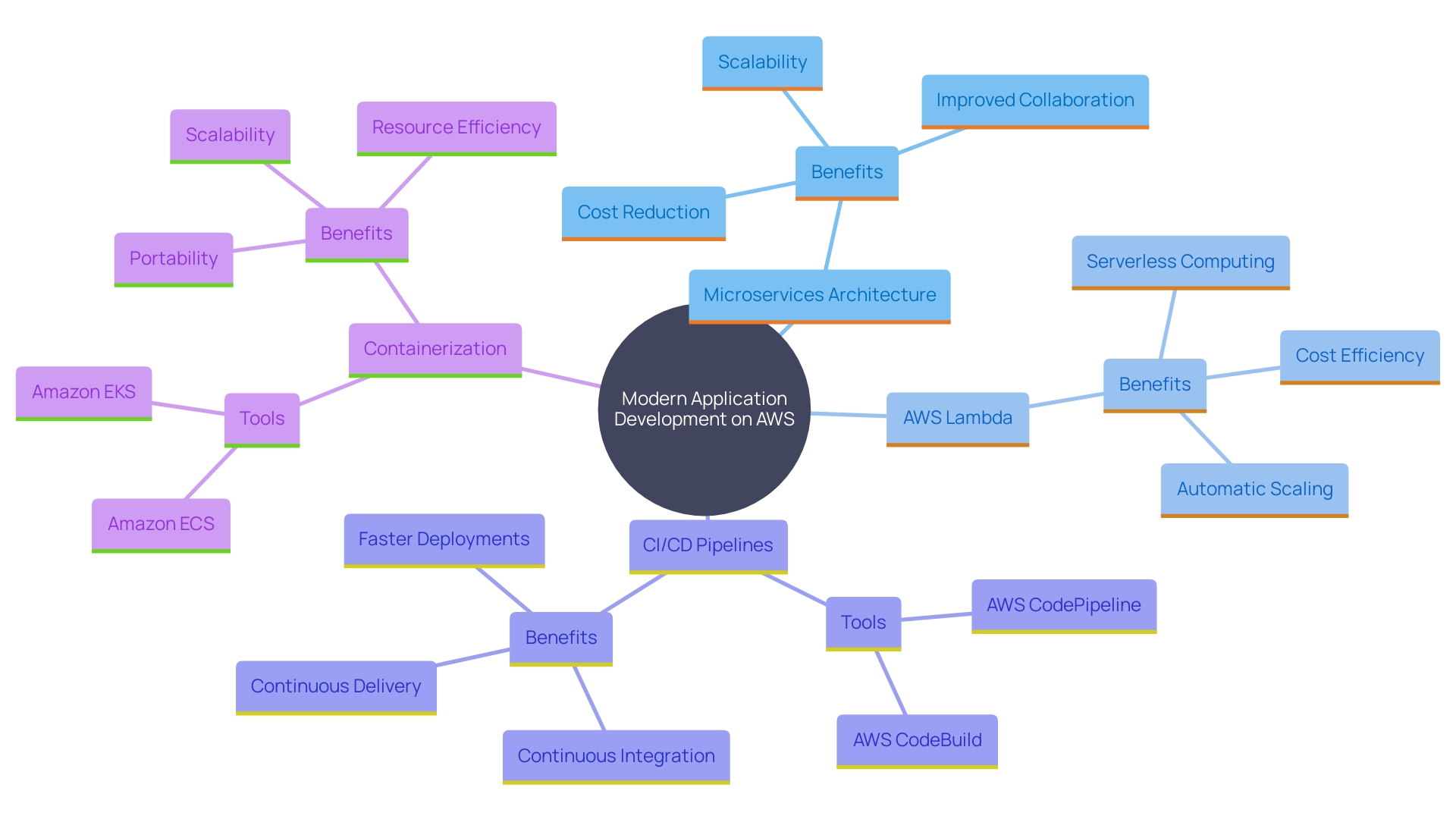

Best Practices for Modern Application Development

Modern application development on AWS should embrace microservices architecture to amplify scalability and flexibility. By utilizing AWS Lambda for serverless computing, organizations can significantly lower operational overhead and expenses. For instance, Dunelm Group plc transitioned to a serverless microservices architecture, reaping benefits such as decreased overall expenditure and enhanced business agility.

To streamline testing and deployment, implement CI/CD pipelines using AWS CodePipeline and AWS CodeBuild. This approach ensures rapid iterations and fosters improved collaboration among teams. In practice, setting up a robust CI/CD pipeline on AWS EC2 instances, utilizing tools like Jenkins, has proven effective in automating build, test, and deployment processes. This method allows for consistent and reliable deployments, enhancing the overall development workflow.

Moreover, adopting containerization with Amazon ECS or EKS ensures improved resource management and deployment consistency. With ECS, it's crucial to analyze resource requirements of the software and select appropriate instance types to optimize costs. Utilizing ECS Auto Scaling can dynamically adjust the number of tasks based on demand, further optimizing resource utilization and cost-efficiency.

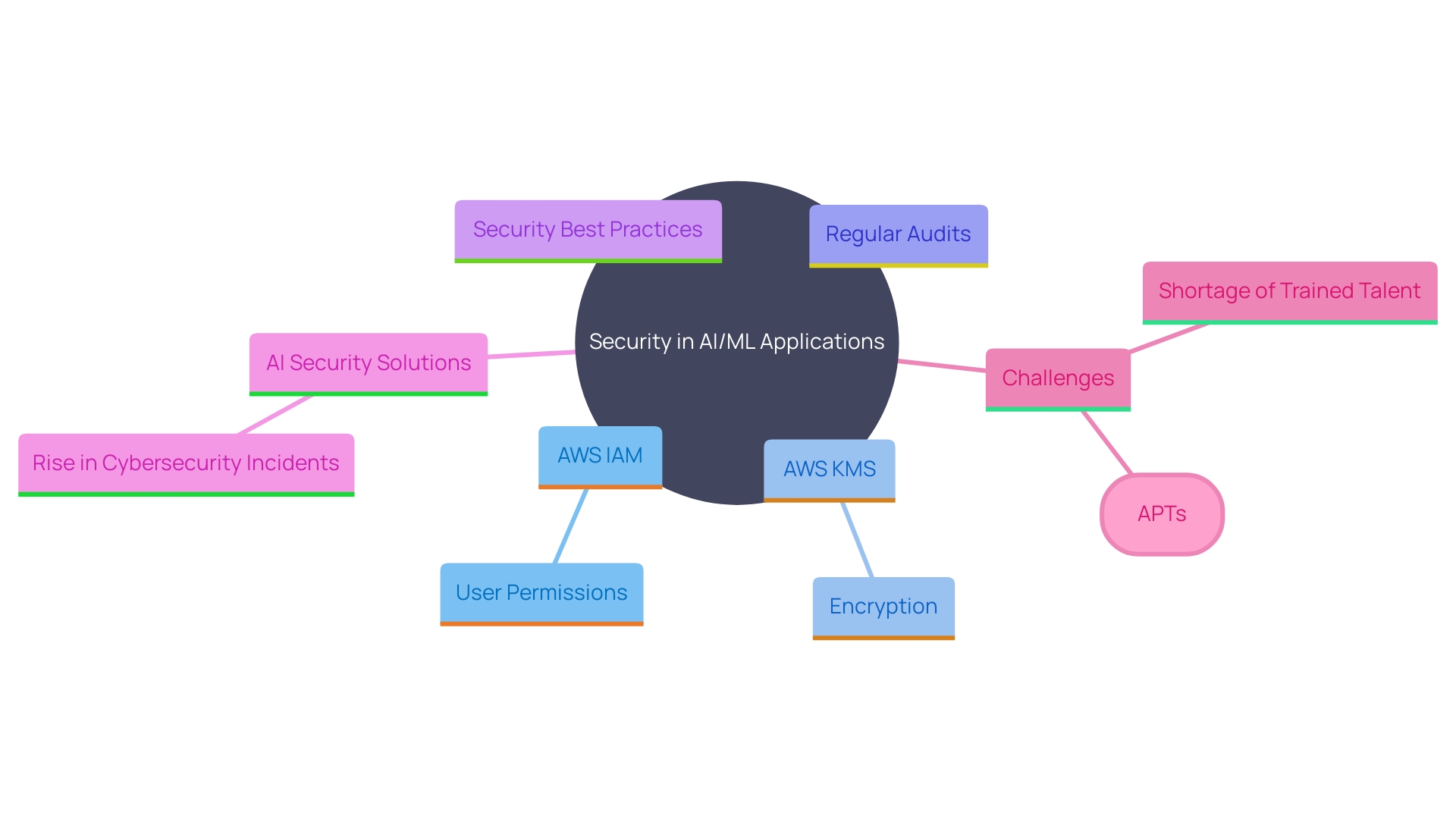

Security and Compliance in AI/ML Applications

Security is critical in AI/ML applications. Implement AWS Identity and Access Management (IAM) to control user permissions and ensure least privilege access. 'Utilize AWS Key Management Service (KMS) for encryption both at rest and in transit.'. Regular audits using AWS CloudTrail and AWS Config help maintain compliance with industry standards. Incorporate security best practices into the development lifecycle to mitigate vulnerabilities early.

AI security solutions offer greater security and speed in identifying cyber threats, making them crucial as the rise in cybersecurity incidents continues. AI systems, such as chatbots and recommendation engines, thrive on large volumes of information, emphasizing the need for robust protection of that information. The importance of information protection has reached new heights as organizations leverage AI's transformative potential.

A network attack known as an advanced persistent threat (APT) occurs when an unauthorized user accesses a network for an extended period without detection. Modern defense cannot rely on outdated methods, highlighting the importance of AI in cybersecurity. The shortage of trained talent remains a significant challenge to advancing AI projects.

AI in cybersecurity is anticipated to grow with the increased demand for cloud-based security solutions among small and medium-sized organizations. This growth underscores the need for comprehensive security measures in AI/ML applications to protect data and maintain operational integrity.

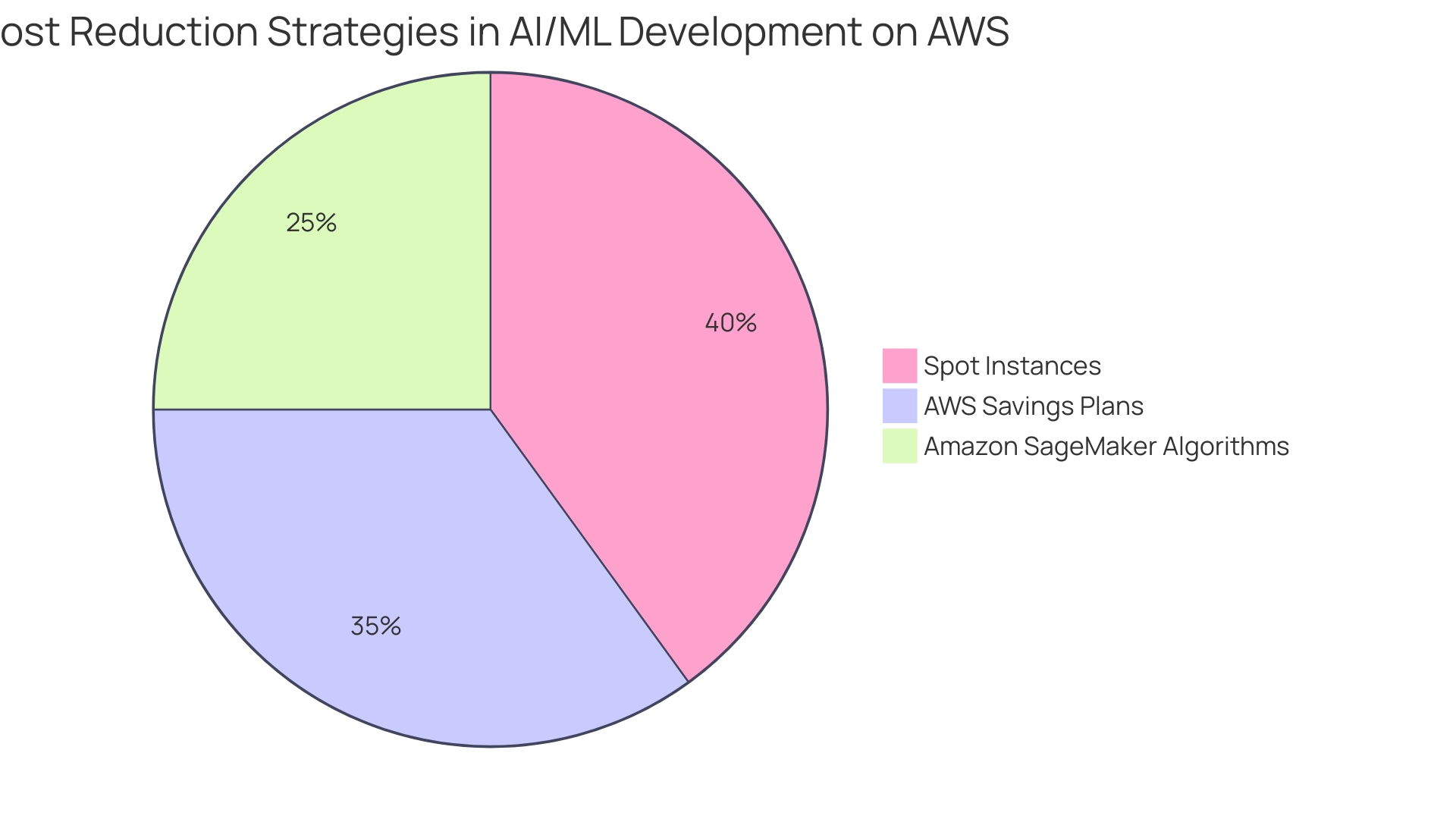

Cost Optimization in AI/ML Development

To enhance expenses in AI/ML development on AWS, utilize AWS Cost Explorer and AWS Budgets to carefully track usage and recognize spending trends. This approach ensures that expenditures align with project needs. Choosing the appropriate instance types according to workload needs is essential; for example, utilizing Amazon EC2 Spot Instances can greatly lower expenses as they provide discounted compute capacity from excess cloud resources. A customer example shows that incorporating Spot Instances effectively reduced expenses for AI model training.

Utilize Amazon SageMaker’s built-in algorithms and pre-built models to expedite training processes, thus saving time and resources. Additionally, AWS Savings Plans are beneficial for predictable workloads, providing substantial cost savings. ICL, a multi-national manufacturing and mining corporation, has effectively integrated these AWS services to monitor their industrial equipment, achieving operational efficiency despite harsh conditions.

BMW Group's experience highlights the importance of modernizing IT landscapes with state-of-the-art services, advocating for cost-effective and easily maintainable cloud solutions. This aligns with the broader industry trend where AWS continues to expand its model offerings, providing diverse and flexible options tailored to various use cases and budgetary constraints.

Conclusion

The development of generative AI applications on AWS presents significant opportunities for organizations to enhance operational efficiency. Key steps include identifying relevant use cases and ensuring access to high-quality data. Advanced services like Amazon SageMaker facilitate effective model development and deployment, as demonstrated by Cepsa Química's approach to regulatory compliance through dynamic model updates.

Addressing ethical considerations, such as algorithmic bias and data privacy, is crucial for maintaining user trust and ensuring responsible AI deployment. As generative AI becomes increasingly prevalent in marketing and product development, organizations must navigate these challenges to fully leverage its potential.

Modern development practices, including microservices architecture and serverless computing, enhance scalability and reduce costs. Implementing CI/CD pipelines allows for rapid iterations and improved collaboration, while robust security measures like AWS Identity and Access Management (IAM) and Key Management Service (KMS) safeguard sensitive data.

Cost optimization strategies, such as utilizing AWS Cost Explorer and pre-built models, are essential for maximizing resources. Success stories from organizations like ICL and BMW Group highlight how these strategies can lead to operational efficiency while managing the complexities of AI/ML development.

In summary, by focusing on use case identification, ethical implications, modern practices, security, and cost optimization, organizations can effectively harness AI on AWS. This comprehensive approach not only drives innovation but also ensures ethical standards and operational efficiency, positioning organizations for long-term success in a competitive landscape.