Introduction

As organizations increasingly embrace the cloud, serverless computing has emerged as a transformative approach that allows developers to focus on innovation rather than infrastructure management. Central to this paradigm is AWS Fargate, a service that enables seamless container deployment without the complexities of server maintenance. While Fargate offers significant advantages—such as automatic scaling and reduced operational overhead—it also presents challenges that must be navigated carefully.

From cost considerations to security implications, understanding both the benefits and limitations of Fargate is crucial for organizations looking to optimize their serverless strategies. This article delves into the multifaceted role of AWS Fargate in serverless computing, exploring its capabilities, drawbacks, and best practices for effective implementation in modern cloud environments.

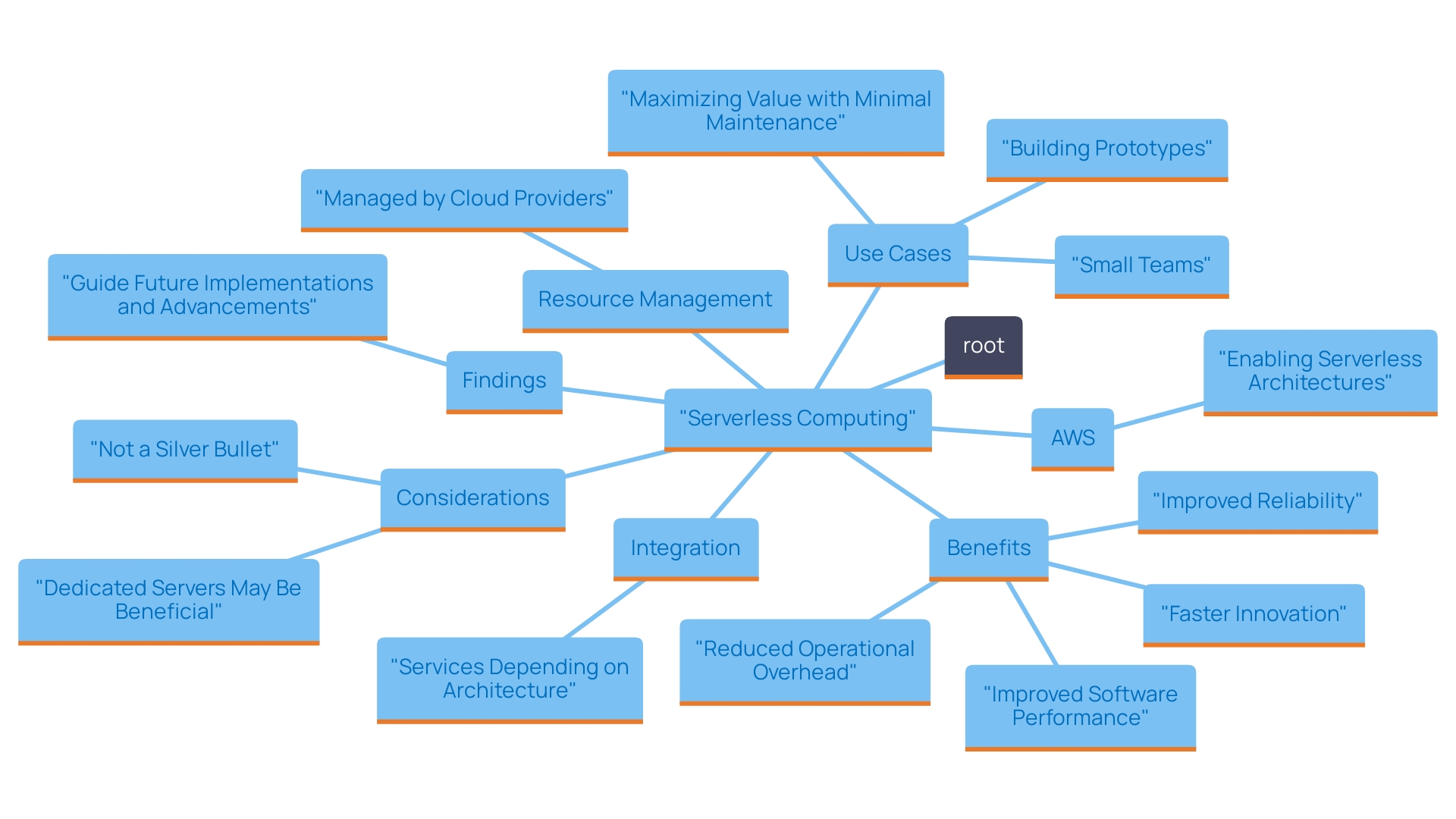

Understanding Serverless Computing and Fargate's Role

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of resources. This allows developers to focus on writing code without concern for the underlying infrastructure.

AWS's service allows users to run containers without managing servers, making it a key player in the serverless paradigm. The service abstracts the server layer, enabling companies to deploy software effortlessly and scale automatically based on demand.

This shift not only reduces operational overhead but also accelerates the pace of innovation, as developers can iterate faster and respond to market needs with agility. By utilizing the service, organizations can attain a more effective distribution of resources while improving software performance and reliability.

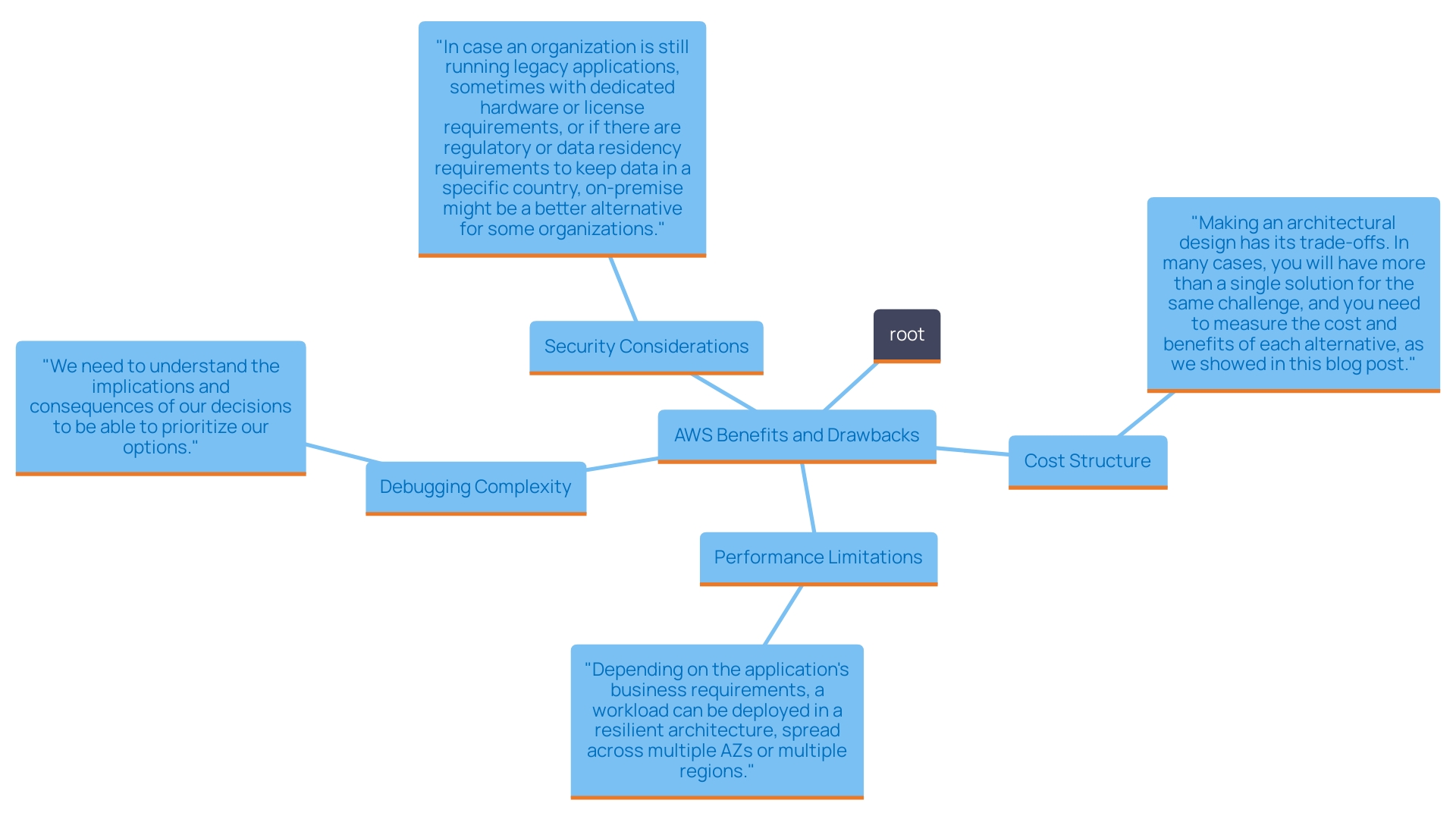

Evaluating the Drawbacks of Fargate in Serverless Architecture

Despite its many advantages, AWS presents several challenges that organizations must consider. One notable drawback is its cost structure. The service charges based on the resources utilized, which can result in considerably greater costs compared to conventional server management, especially for programs with irregular or unpredictable workloads. For instance, organizations may find that sporadic spikes in usage can substantially inflate their monthly bills. This cost challenge is underscored in case studies where companies have had to carefully manage and optimize their use of Fargate to avoid spiraling expenses that can exceed traditional server costs by as much as 30% in some scenarios.

Performance limitations can also emerge, particularly for systems that require fine-grained control over the underlying infrastructure. In a serverless model, such control is inherently abstracted away, which may not suit all use cases. For example, applications requiring specific configurations or low-latency responses might struggle with the limitations of a managed service. Moreover, debugging and monitoring in a serverless environment can be more complex. Developers often report having fewer tools and less visibility into the containerized environment, making it harder to identify and resolve issues in a timely manner.

From a security perspective, it is crucial to collect and analyze logs and network traffic to detect and respond to vulnerabilities effectively. As Josh Davies, Principal Technical Product Marketing Manager at Alert Logic, points out, 'Following the best practices outlined above will mitigate against compromise; however, they are not standalone solutions.' This quote highlights the significance of a thorough security strategy that extends beyond merely implementing the platform.

Organizations must weigh these factors against the advantages of the serverless model, ensuring that it aligns with their specific operational needs and business objectives. A thorough understanding of both the advantages and limitations, supported by concrete examples and statistics, will enable more informed decision-making regarding the adoption of AWS services.

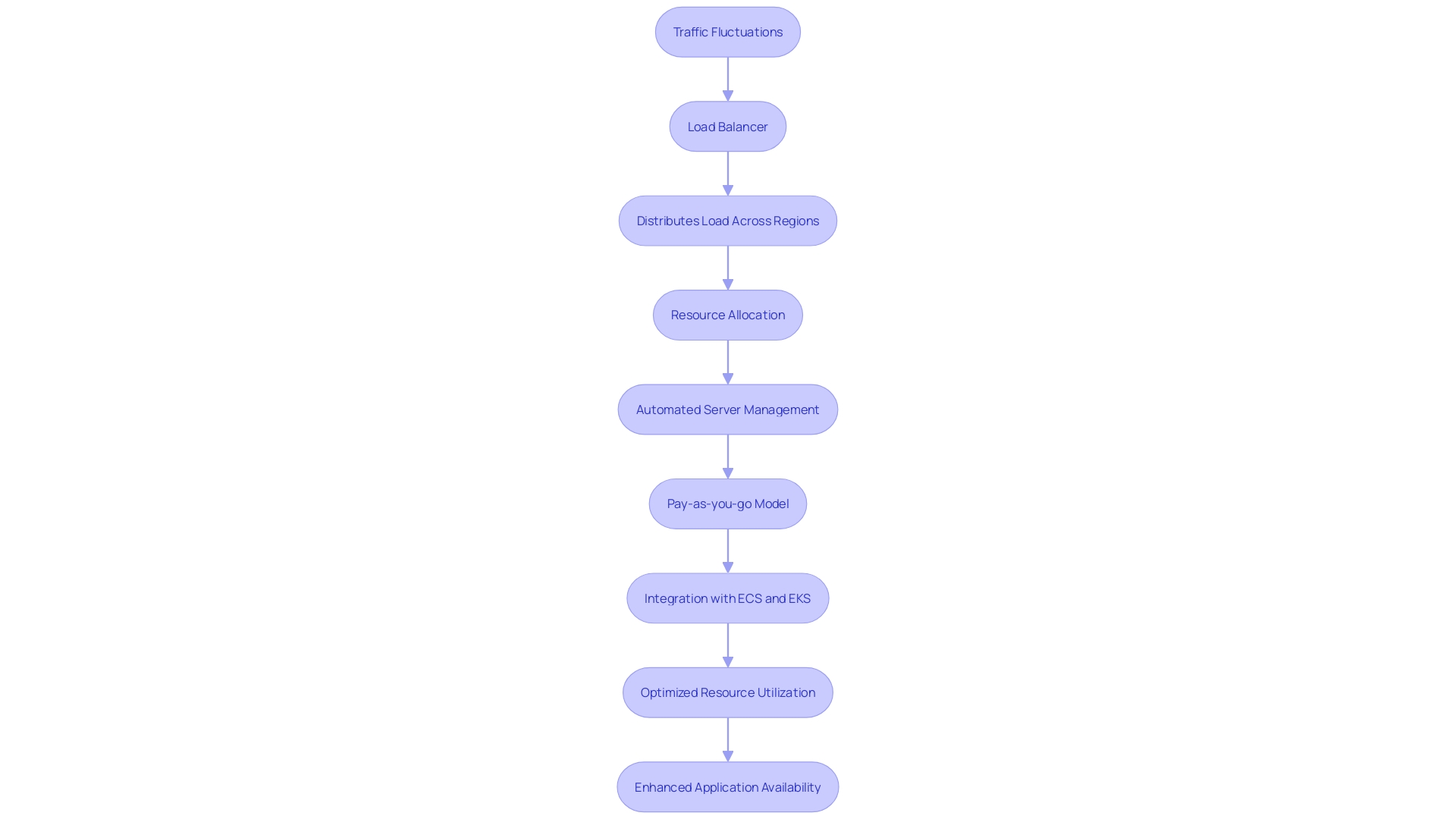

Scaling and Flexibility with Fargate's Serverless Model

A remarkable aspect of AWS is its capability to automatically adjust resources based on demand, removing the necessity for manual server management. This dynamic scalability enables organizations to deploy systems seamlessly, adjusting to traffic fluctuations without the need to provision or manage servers. The flexibility provided by the service is especially advantageous for companies experiencing rapid growth or seasonal surges in usage, improving customer experiences by guaranteeing steady application availability.

The significance of this flexibility is evident in recent case studies, such as the one involving Spot by NetApp. This solution optimizes container infrastructure for AWS, ensuring clusters have the necessary resources while minimizing costs. By automating resource allocation, CloudOps teams can concentrate on workloads instead of overseeing container infrastructure, thereby emphasizing the practical benefits of the serverless model.

Moreover, AWS services operate on a pay-as-you-go model, which eliminates idle instances and reduces operational costs, as stated by AWS. This model is crucial for businesses looking to optimize resource utilization and minimize costs associated with over-provisioning. The hard limit for pods is 65,535, which is significant for scalability; this limit ensures that even the most demanding applications can be supported without performance issues.

Furthermore, recent updates have indicated that AWS must be integrated with services like ECS, EKS, or AWS Batch. This integration highlights the significance of a specific service within a larger cloud infrastructure ecosystem, as it offers a resource-free environment that operates as a layer above these services. By utilizing its capabilities, organizations can redirect their focus from managing infrastructure to driving innovation and delivering value, thereby enhancing their competitive edge in the market.

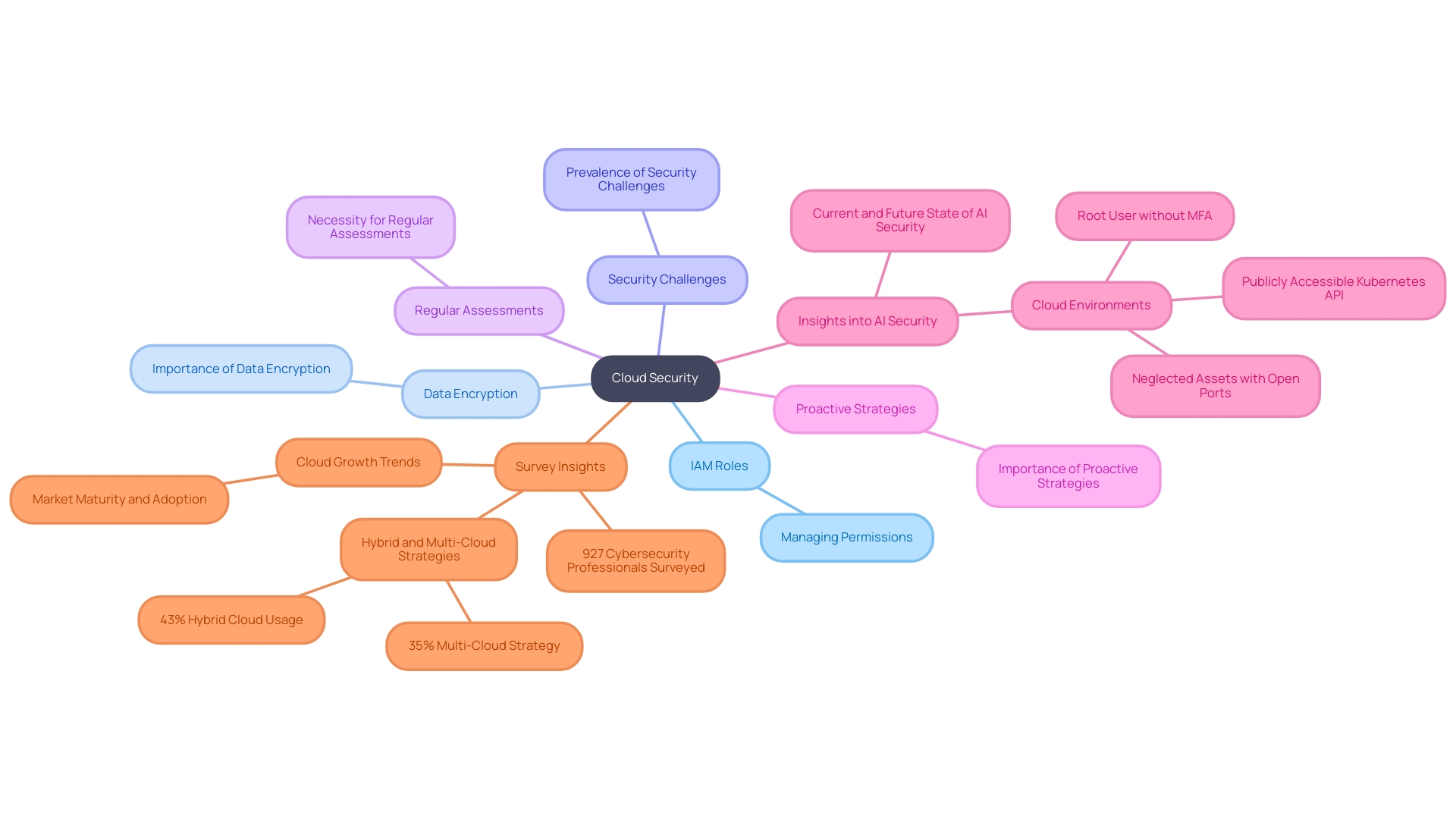

Security Considerations in Serverless Deployments

In the domain of cloud architectures such as AWS Fargate, security must be a top priority to protect systems and data. AWS provides foundational security mechanisms, but the responsibility lies with developers to implement robust security measures. This includes the strategic use of IAM roles for precise permission management, as highlighted by Senior AWS Cloud Engineer Danny Steenman, who remarked, 'Roles are the most common way to manage these interactions and are the only option in many cases.'

To ensure comprehensive security, it is essential to encrypt sensitive data both in transit and at rest. Implementing IAM roles effectively, such as ECS task roles and execution roles, is crucial for starting containers and accessing AWS services. Recent statistics reveal that 80% of organizations face security challenges with cloud-based architectures, emphasizing the need for stringent measures. A case study delineates the distinction between these roles, illustrating their necessity for tasks like pulling container images from Amazon ECR and sending logs to CloudWatch, thus highlighting the practical implications of IAM roles.

Organizations must also conduct regular security assessments and adopt proactive vulnerability management strategies. By incorporating security best practices into the development lifecycle, businesses can reduce risks and improve the resilience of their cloud-based services. This proactive approach involves continuous monitoring of logs for unusual activities and staying informed about the latest security challenges in cloud-based architectures, which have been found to increase by 30% year-over-year.

In summary, the significance of IAM roles in securing applications cannot be overstated. By leveraging these roles effectively and adhering to recent best practices, organizations can fortify their serverless deployments against potential threats, ensuring a secure and reliable cloud environment.

Best Practices for Implementing Fargate in Your Organization

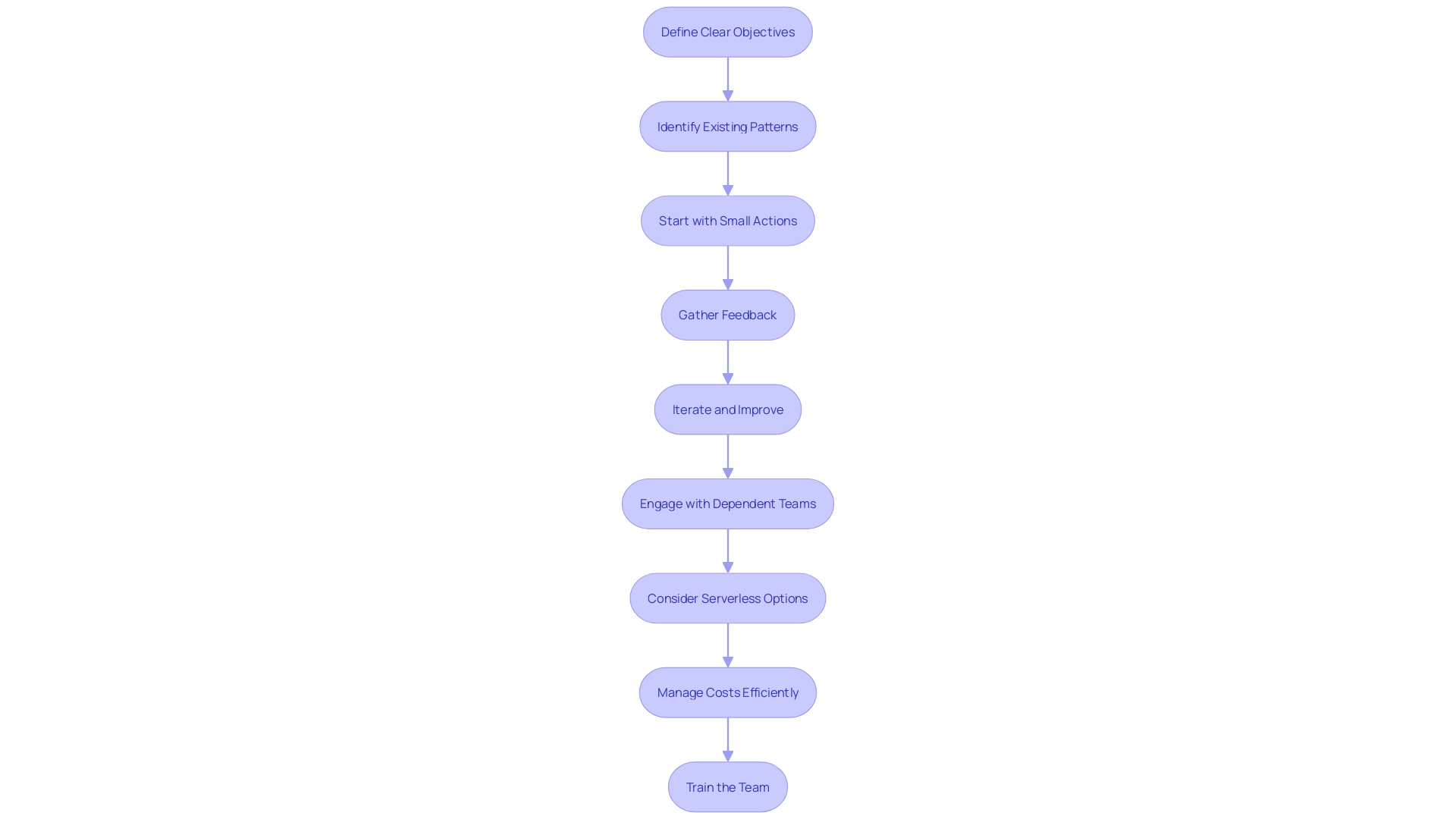

To successfully implement the service within your organization, consider the following best practices:

- Define Clear Objectives: Identify the specific business goals you aim to achieve with Fargate, ensuring alignment with your overall technology strategy.

- Optimize Resource Allocation: Monitor resource usage and adjust configurations to prevent unnecessary costs while maintaining performance.

- Automate Testing and Deployment: Leverage CI/CD pipelines to streamline testing and deployment processes, enhancing agility and reliability.

- Implement Robust Monitoring: Use monitoring tools to gain insights into application performance and detect issues proactively.

- Train Your Team: Ensure your team is equipped with the necessary skills and knowledge to manage serverless deployments effectively.

By following these practices, organizations can maximize the benefits of Fargate while minimizing potential pitfalls.

Conclusion

AWS Fargate represents a significant advancement in the realm of serverless computing, enabling organizations to deploy applications with unparalleled ease and efficiency. By abstracting the server management layer, Fargate allows developers to concentrate on writing code and driving innovation, while also offering automatic scaling and a pay-as-you-go pricing model that can optimize resource utilization.

However, it is imperative to recognize the challenges that accompany this transformative service. The potential for increased costs, performance limitations, and heightened security responsibilities necessitate a careful evaluation of Fargate's fit within an organization's overall strategy. By understanding both the advantages and drawbacks, businesses can make informed decisions that align with their operational needs and budgetary constraints.

Implementing best practices, such as defining clear objectives and optimizing resource allocation, can further enhance the value derived from AWS Fargate. Organizations that invest in comprehensive security strategies and proactive monitoring will be better positioned to mitigate risks and maximize the benefits of this serverless model.

In conclusion, AWS Fargate stands as a powerful tool for modern cloud environments, offering the promise of increased agility and efficiency. By navigating its complexities with a strategic approach, organizations can harness the full potential of serverless computing, positioning themselves for success in a rapidly evolving digital landscape.